NVIDIA and Microsoft today unveiled product integrations designed to advance full-stack NVIDIA AI development on Microsoft platforms and applications.

At Microsoft Ignite, Microsoft announced the launch of the first cloud private preview of the Azure ND GB200 V6 VM series, based on the NVIDIA Blackwell platform. The Azure ND GB200 v6 will be a new AI-optimized virtual machine (VM) series and combines the NVIDIA GB200 NVL72 rack design with NVIDIA Quantum InfiniBand networking.

In addition, Microsoft revealed that Azure Container Apps now supports NVIDIA GPUs, enabling simplified and scalable AI deployment. Plus, the NVIDIA AI platform on Azure includes new reference workflows for industrial AI and an NVIDIA Omniverse Blueprint for creating immersive, AI-powered visuals.

At Ignite, NVIDIA also announced multimodal small language models (SLMs) for RTX AI PCs and workstations, enhancing digital human interactions and virtual assistants with greater realism.

NVIDIA Blackwell Powers Next-Gen AI on Microsoft Azure

Microsoft’s new Azure ND GB200 V6 VM series will harness the powerful performance of NVIDIA GB200 Grace Blackwell Superchips, coupled with advanced NVIDIA Quantum InfiniBand networking. This offering is optimized for large-scale deep learning workloads to accelerate breakthroughs in natural language processing, computer vision and more.

The Blackwell-based VM series complements previously announced Azure AI clusters with ND H200 V5 VMs, which provide increased high-bandwidth memory for improved AI inferencing. The ND H200 V5 VMs are already being used by OpenAI to enhance ChatGPT.

Azure Container Apps Enables Serverless AI Inference With NVIDIA Accelerated Computing

Serverless computing provides AI application developers increased agility to rapidly deploy, scale and iterate on applications without worrying about underlying infrastructure. This enables them to focus on optimizing models and improving functionality while minimizing operational overhead.

The Azure Container Apps serverless containers platform simplifies deploying and managing microservices-based applications by abstracting away the underlying infrastructure.

Azure Container Apps now supports NVIDIA-accelerated workloads with serverless GPUs, allowing developers to use the power of accelerated computing for real-time AI inference applications in a flexible, consumption-based, serverless environment. This capability simplifies AI deployments at scale while improving resource efficiency and application performance without the burden of infrastructure management.

Serverless GPUs allow development teams to focus more on innovation and less on infrastructure management. With per-second billing and scale-to-zero capabilities, customers pay only for the compute they use, helping ensure resource utilization is both economical and efficient. NVIDIA is also working with Microsoft to bring NVIDIA NIM microservices to serverless NVIDIA GPUs in Azure to optimize AI model performance.

NVIDIA Unveils Omniverse Reference Workflows for Advanced 3D Applications

NVIDIA announced reference workflows that help developers to build 3D simulation and digital twin applications on NVIDIA Omniverse and Universal Scene Description (OpenUSD) — accelerating industrial AI and advancing AI-driven creativity.

A reference workflow for 3D remote monitoring of industrial operations is coming soon to enable developers to connect physically accurate 3D models of industrial systems to real-time data from Azure IoT Operations and Power BI.

These two Microsoft services integrate with applications built on NVIDIA Omniverse and OpenUSD to provide solutions for industrial IoT use cases. This helps remote operations teams accelerate decision-making and optimize processes in production facilities.

The Omniverse Blueprint for precise visual generative AI enables developers to create applications that let nontechnical teams generate AI-enhanced visuals while preserving brand assets. The blueprint supports models like SDXL and Shutterstock Generative 3D to streamline the creation of on-brand, AI-generated images.

Leading creative groups, including Accenture Song, Collective, GRIP, Monks and WPP, have adopted this NVIDIA Omniverse Blueprint to personalize and customize imagery across markets.

Accelerating Gen AI for Windows With RTX AI PCs

NVIDIA’s collaboration with Microsoft extends to bringing AI capabilities to personal computing devices.

At Ignite, NVIDIA announced its new multimodal SLM, NVIDIA Nemovision-4B Instruct, for understanding visual imagery in the real world and on screen. It’s coming soon to RTX AI PCs and workstations and will pave the way for more sophisticated and lifelike digital human interactions.

Plus, updates to NVIDIA TensorRT Model Optimizer (ModelOpt) offer Windows developers a path to optimize a model for ONNX Runtime deployment. TensorRT ModelOpt enables developers to create AI models for PCs that are faster and more accurate when accelerated by RTX GPUs. This enables large models to fit within the constraints of PC environments, while making it easy for developers to deploy across the PC ecosystem with ONNX runtimes.

RTX AI-enabled PCs and workstations offer enhanced productivity tools, creative applications and immersive experiences powered by local AI processing.

Full-Stack Collaboration for AI Development

NVIDIA’s extensive ecosystem of partners and developers brings a wealth of AI and high-performance computing options to the Azure platform.

SoftServe, a global IT consulting and digital services provider, today announced the availability of SoftServe Gen AI Industrial Assistant, based on the NVIDIA AI Blueprint for multimodal PDF data extraction, on the Azure marketplace. The assistant addresses critical challenges in manufacturing by using AI to enhance equipment maintenance and improve worker productivity.

At Ignite, AT&T will showcase how it’s using NVIDIA AI and Azure to enhance operational efficiency, boost employee productivity and drive business growth through retrieval-augmented generation and autonomous assistants and agents.

Learn more about NVIDIA and Microsoft’s collaboration and sessions at Ignite.

See notice regarding software product information.

]]>Cloud-native technologies have become crucial for developers to create and implement scalable applications in dynamic cloud environments.

This week at KubeCon + CloudNativeCon North America 2024, one of the most-attended conferences focused on open-source technologies, Chris Lamb, vice president of computing software platforms at NVIDIA, delivered a keynote outlining the benefits of open source for developers and enterprises alike — and NVIDIA offered nearly 20 interactive sessions with engineers and experts.

The Cloud Native Computing Foundation (CNCF), part of the Linux Foundation and host of KubeCon, is at the forefront of championing a robust ecosystem to foster collaboration among industry leaders, developers and end users.

As a member of CNCF since 2018, NVIDIA is working across the developer community to contribute to and sustain cloud-native open-source projects. Our open-source software and more than 750 NVIDIA-led open-source projects help democratize access to tools that accelerate AI development and innovation.

Empowering Cloud-Native Ecosystems

NVIDIA has benefited from the many open-source projects under CNCF and has made contributions to dozens of them over the past decade. These actions help developers as they build applications and microservice architectures aligned with managing AI and machine learning workloads.

Kubernetes, the cornerstone of cloud-native computing, is undergoing a transformation to meet the challenges of AI and machine learning workloads. As organizations increasingly adopt large language models and other AI technologies, robust infrastructure becomes paramount.

NVIDIA has been working closely with the Kubernetes community to address these challenges. This includes:

- Work on dynamic resource allocation (DRA) that allows for more flexible and nuanced resource management. This is crucial for AI workloads, which often require specialized hardware. NVIDIA engineers played a key role in designing and implementing this feature.

- Leading efforts in KubeVirt, an open-source project extending Kubernetes to manage virtual machines alongside containers. This provides a unified, cloud-native approach to managing hybrid infrastructure.

- Development of NVIDIA GPU Operator, which automates the lifecycle management of NVIDIA GPUs in Kubernetes clusters. This software simplifies the deployment and configuration of GPU drivers, runtime and monitoring tools, allowing organizations to focus on building AI applications rather than managing infrastructure.

The company’s open-source efforts extend beyond Kubernetes to other CNCF projects:

- NVIDIA is a key contributor to Kubeflow, a comprehensive toolkit that makes it easier for data scientists and engineers to build and manage ML systems on Kubernetes. Kubeflow reduces the complexity of infrastructure management and allows users to focus on developing and improving ML models.

- NVIDIA has contributed to the development of CNAO, which manages the lifecycle of host networks in Kubernetes clusters.

- NVIDIA has also added to Node Health Check, which provides virtual machine high availability.

And NVIDIA has assisted with projects that address the observability, performance and other critical areas of cloud-native computing, such as:

- Prometheus: Enhancing monitoring and alerting capabilities

- Envoy: Improving distributed proxy performance

- OpenTelemetry: Advancing observability in complex, distributed systems

- Argo: Facilitating Kubernetes-native workflows and application management

Community Engagement

NVIDIA engages the cloud-native ecosystem by participating in CNCF events and activities, including:

- Collaboration with cloud service providers to help them onboard new workloads.

- Participation in CNCF’s special interest groups and working groups on AI discussions.

- Participation in industry events such as KubeCon + CloudNativeCon, where it shares insights on GPU acceleration for AI workloads.

- Work with CNCF-adjacent projects in the Linux Foundation as well as many partners.

This translates into extended benefits for developers, such as improved efficiency in managing AI and ML workloads; enhanced scalability and performance of cloud-native applications; better resource utilization, which can lead to cost savings; and simplified deployment and management of complex AI infrastructures.

As AI and machine learning continue to transform industries, NVIDIA is helping advance cloud-native technologies to support compute-intensive workloads. This includes facilitating the migration of legacy applications and supporting the development of new ones.

These contributions to the open-source community help developers harness the full potential of AI technologies and strengthen Kubernetes and other CNCF projects as the tools of choice for AI compute workloads.

Check out NVIDIA’s keynote at KubeCon + CloudNativeCon North America 2024 delivered by Chris Lamb, where he discusses the importance of CNCF projects in building and delivering AI in the cloud and NVIDIA’s contributions to the community to push the AI revolution forward.

]]>Consulting giants including Accenture, Deloitte, EY Strategy and Consulting Co., Ltd. (or EY Japan), FPT, Kyndryl and Tata Consultancy Services Japan (TCS Japan) are working with NVIDIA to establish innovation centers in Japan to accelerate the nation’s goal of embracing enterprise AI and physical AI across its industrial landscape.

The centers will use NVIDIA AI Enterprise software, local language models and NVIDIA NIM microservices to help clients in Japan advance the development and deployment of AI agents tailored to their industries’ respective needs, boosting productivity with a digital workforce.

Using the NVIDIA Omniverse platform, Japanese firms can develop digital twins and simulate complex physical AI systems, driving innovation in manufacturing, robotics and other sectors.

Like many nations, Japan is navigating complex social and demographic challenges, which is leading to a smaller workforce as older generations retire. Leaning into its manufacturing and robotics leadership, the country is seeking opportunities to solve these challenges using AI.

The Japanese government in April published a paper on its aims to become “the world’s most AI-friendly country.” AI adoption is strong and growing, as IDC reports that the Japanese AI systems market reached approximately $5.9 billion this year, with a year-on-year growth rate of 31.2%.1

The consulting giants’ initiatives and activities include:

- Accenture has established the Accenture NVIDIA Business Group and will provide solutions and services incorporating a Japanese large language model (LLM), which uses NVIDIA NIM and NVIDIA NeMo, as a Japan-specific offering. In addition, Accenture will deploy agentic AI solutions based on Accenture AI Refinery to all industries in Japan, accelerating total enterprise reinvention for its clients. In the future, Accenture plans to build new services using NVIDIA AI Enterprise and Omniverse at Accenture Innovation Hub Tokyo.

- Deloitte is establishing its AI Experience Center in Tokyo, which will serve as an executive briefing center to showcase generative AI solutions built on NVIDIA technology. This facility builds on the Deloitte Japan NVIDIA Practice announced in June and will allow clients to experience firsthand how AI can revolutionize their operations. The center will also offer NVIDIA AI and Omniverse Blueprints to help enterprises in Japan adopt agentic AI effectively.

- EY Strategy and Consulting Co., Ltd (EY Japan) is developing a multitude of digital transformation (DX) solutions in Japan across diverse industries including finance, retail, media and manufacturing. The new EY Japan DX offerings will be built with NVIDIA AI Enterprise to serve the country’s growing demand for digital twins, 3D applications, multimodal AI and generative AI.

- FPT is launching FPT AI Factory in Japan with NVIDIA Hopper GPUs and NVIDIA AI Enterprise software to support the country’s AI transformation by using business data in a secure, sovereign environment. FPT is integrating the NVIDIA NeMo framework with FPT AI Studio for building, pretraining and fine-tuning generative AI models, including FPT’s multi-language LLM, named Saola. In addition, to provide end-to-end AI integration services, FPT plans to train over 1,000 software engineers and consultants domestically in Japan, and over 7,000 globally by 2026.

- IT infrastructure services provider Kyndryl has launched a dedicated AI private cloud in Japan. Built in collaboration with Dell Technologies using the Dell AI Factory with NVIDIA, this new AI private cloud will provide a controlled, secure and sovereign location for customers to develop, test and plan implementation of AI on the end-to-end NVIDIA AI platform, including NVIDIA accelerated computing and networking, as well as the NVIDIA AI Enterprise software.

- TCS Japan will begin offering its TCS global AI offerings built on the full NVIDIA AI stack in the automotive and manufacturing industries. These solutions will be hosted in its showcase centers at TCS Japan’s Azabudai office in Tokyo.

Located in the Tokyo and Kansai metropolitan areas, these new consulting centers offer hands-on experience with NVIDIA’s latest technologies and expert guidance — helping accelerate AI transformation, solve complex social challenges and support the nation’s economic growth.

To learn more, watch the NVIDIA AI Summit Japan fireside chat with NVIDIA founder and CEO Jensen Huang.

Editor’s note: IDC figures are sourced to IDC, 2024 Domestic AI System Market Forecast Announced, April 2024. The IDC forecast amount was converted to USD by NVIDIA, while the CAGR (31.2%) was calculated based on JPY.

]]>Artificial intelligence will be the driving force behind India’s digital transformation, fueling innovation, economic growth, and global leadership, NVIDIA founder and CEO Jensen Huang said Thursday at NVIDIA’s AI Summit in Mumbai.

Addressing a crowd of entrepreneurs, developers, academics and business leaders, Huang positioned AI as the cornerstone of the country’s future.

India has an “amazing natural resource” in its IT and computer science expertise, Huang said, noting the vast potential waiting to be unlocked.

To capitalize on this country’s talent and India’s immense data resources, the country’s leading cloud infrastructure providers are rapidly accelerating their data center capacity. NVIDIA is playing a key role, with NVIDIA GPU deployments expected to grow nearly 10x by year’s end, creating the backbone for an AI-driven economy.

Together with NVIDIA, these companies are at the cutting edge of a shift Huang compared to the seismic change in computing introduced by IBM’s System 360 in 1964, calling it the most profound platform shift since then.

“This industry, the computing industry, is going to become the intelligence industry,” Huang said, pointing to India’s unique strengths to lead this industry, thanks to its enormous amounts of data and large population.

With this rapid expansion in infrastructure, AI factories will play a critical role in India’s future, serving as the backbone of the nation’s AI-driven growth.

“It makes complete sense that India should manufacture its own AI,” Huang said. “You should not export data to import intelligence,” he added, noting the importance of India building its own AI infrastructure.

Huang identified three areas where AI will transform industries: sovereign AI, where nations use their own data to drive innovation; agentic AI, which automates knowledge-based work; and physical AI, which applies AI to industrial tasks through robotics and autonomous systems. India, Huang noted, is uniquely positioned to lead in all three areas.

India’s startups are already harnessing NVIDIA technology to drive innovation across industries and are positioning themselves as global players, bringing the country’s AI solutions to the world.

Meanwhile, India’s robotics ecosystem is adopting NVIDIA Isaac and Omniverse to power the next generation of physical AI, revolutionizing industries like manufacturing and logistics with advanced automation.

Huang’s also keynote featured a surprise appearance by actor and producer Akshay Kumar.

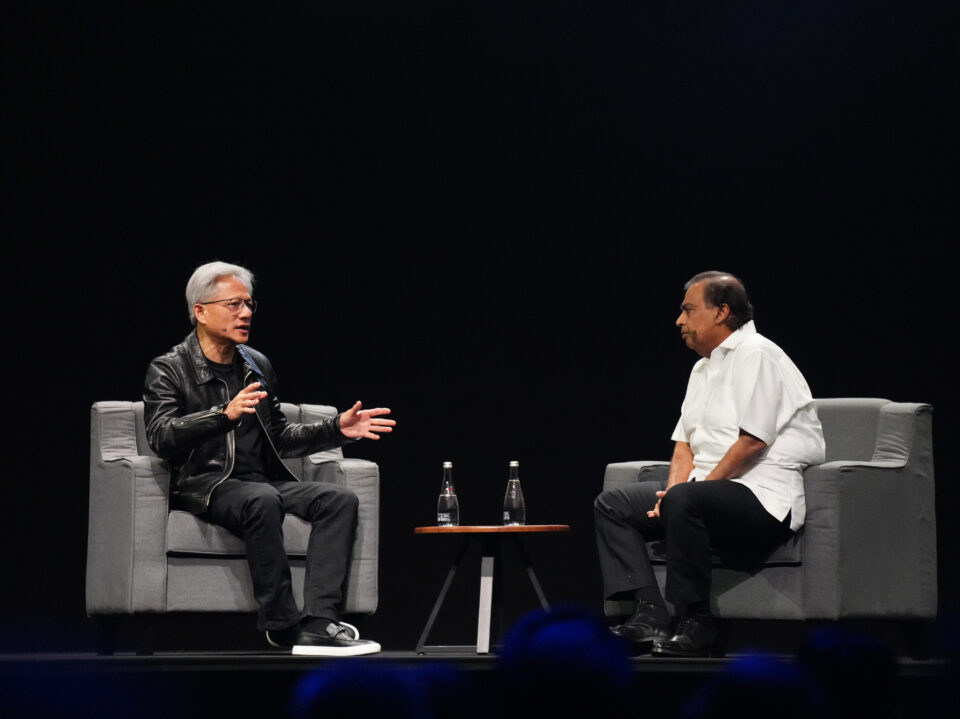

Following Huang’s remarks, the focus shifted to a fireside chat between Huang and Reliance Industries Chairman Mukesh Ambani, where the two leaders explored how AI will shape the future of Indian industries, particularly in sectors like energy, telecommunications and manufacturing.

Ambani emphasized that AI is central to this continued growth. Reliance, in partnership with NVIDIA, is building AI factories to automate industrial tasks and transform processes in sectors like energy and manufacturing.

Both men discussed their companies’ joint efforts to pioneer AI infrastructure in India.

Ambani underscored the role of AI in public sector services, explaining how India’s data combined with AI is already transforming governance and service delivery.

Huang added that AI promises to democratize technology.

“The ability to program AI is something that everyone can do … if AI could be put into the hands of every citizen, it would elevate and put into the hands of everyone this incredible capability,” he said.

Huang emphasized NVIDIA’s role in preparing India’s workforce for an AI-driven future.

NVIDIA is partnering with India’s IT giants such as Infosys, TCS, Tech Mahindra and Wipro to upskill nearly half a million developers, ensuring India leads the AI revolution with a highly trained workforce.

“India’s technical talent is unmatched,” Huang said.

Ambani echoed these sentiments, stressing that “India will be one of the biggest intelligence markets,” pointing to the nation’s youthful, technically talented population.

A Vision for India’s AI-Driven Future

As the session drew to a close, Huang and Ambani reflected on their vision for India’s AI-driven future.

With its vast talent pool, burgeoning tech ecosystem and immense data resources, the country, they agreed, has the potential to contribute globally in sectors such as energy, healthcare, finance and manufacturing.

“This cannot be done by any one company, any one individual, but we all have to work together to bring this intelligence age safely to the world so that we can create a more equal world, a more prosperous world,” Ambani said.

Huang echoed the sentiment, adding: “Let’s make it a promise today that we will work together so that India can take advantage of the intelligence revolution that’s ahead of us.”

]]>India’s leading cloud infrastructure providers and server manufacturers are ramping up accelerated data center capacity. By year’s end, they’ll have boosted NVIDIA GPU deployment in the country by nearly 10x compared to 18 months ago.

Tens of thousands of NVIDIA Hopper GPUs will be added to build AI factories — large-scale data centers for producing AI — that support India’s large businesses, startups and research centers running AI workloads in the cloud and on premises. This will cumulatively provide nearly 180 exaflops of compute to power innovation in healthcare, financial services and digital content creation.

Announced today at the NVIDIA AI Summit, taking place in Mumbai through Oct. 25, this buildout of accelerated computing technology is led by data center provider Yotta Data Services, global digital ecosystem enabler Tata Communications, cloud service provider E2E Networks and original equipment manufacturer Netweb.

Their systems will enable developers to harness domestic data center resources powerful enough to fuel a new wave of large language models, complex scientific visualizations and industrial digital twins that could propel India to the forefront of AI-accelerated innovation.

Yotta Brings AI Systems and Services to Shakti Cloud

Yotta Data Services is providing Indian businesses, government departments and researchers access to managed cloud services through its Shakti Cloud platform to boost generative AI adoption and AI education.

Powered by thousands of NVIDIA Hopper GPUs, these computing resources are complemented by NVIDIA AI Enterprise, an end-to-end, cloud-native software platform that accelerates data science pipelines and streamlines development and deployment of production-grade copilots and other generative AI applications.

With NVIDIA AI Enterprise, Yotta customers can access NVIDIA NIM, a collection of microservices for optimized AI inference, and NVIDIA NIM Agent Blueprints, a set of customizable reference architectures for generative AI applications. This will allow them to rapidly adopt optimized, state-of-the-art AI for applications including biomolecular generation, virtual avatar creation and language generation.

“The future of AI is about speed, flexibility and scalability, which is why Yotta’s Shakti Cloud platform is designed to eliminate the common barriers that organizations across industries face in AI adoption,” said Sunil Gupta, cofounder, CEO and managing director of Yotta. “Shakti Cloud brings together high-performance GPUs, optimized storage and a services layer that simplifies AI development from model training to deployment, so organizations can quickly scale their AI efforts, streamline operations and push the boundaries of what AI can accomplish.”

Yotta’s customers include Sarvam AI, which is building AI models that support major Indian languages; Innoplexus, which is developing an AI-powered life sciences platform for drug discovery; and Zoho Corporation, which is creating language models for enterprise customers.

Tata Supports Enterprise AI Innovation Across Industries

Tata Communications is initiating a large-scale deployment of NVIDIA Hopper architecture GPUs to power its public cloud infrastructure and support a wide range of AI applications. The company also plans to expand its offerings next year to include NVIDIA Blackwell GPUs.

In addition to providing accelerated hardware, Tata Communications will enable customers to run NVIDIA AI Enterprise, including NVIDIA NIM and NIM Agent Blueprints, and NVIDIA Omniverse, a software platform and operating system that developers use to build physical AI and robotic system simulation applications.

“By combining NVIDIA’s accelerated computing infrastructure with Tata Communications’ AI Studio and global network, we’re creating a future-ready platform that will enable AI transformation across industries,” said A.S. Lakshminarayanan, managing director and CEO of Tata Communications. “Access to these resources will make AI more accessible to innovators in fields including manufacturing, healthcare, retail, banking and financial services.”

E2E Expands Cloud Infrastructure for AI Innovation

E2E Networks supports enterprises in India, the Middle East, the Asia-Pacific region and the U.S with GPU-powered cloud servers.

It offers customers access to clusters featuring NVIDIA Hopper GPUs interconnected with NVIDIA Quantum-2 InfiniBand networking to help meet the demand for high-compute tasks including simulations, foundation model training and real-time AI inference.

“This infrastructure expansion helps ensure Indian businesses have access to high-performance, scalable infrastructure to develop custom AI models,” said Tarun Dua, cofounder and managing director of E2E Networks. “NVIDIA Hopper GPUs will be a powerful driver of innovation in large language models and large vision models for our users.”

E2E’s clients include AI4Bharat, a research lab at the Indian Institute of Technology Madras developing open-source AI applications for Indian languages — as well as members of the NVIDIA Inception startup program such as disease detection company Qure.ai, text-to-video generative AI company Invideo AI and intelligent voice agent company Assisto.

Netweb Servers Advance Sovereign AI Initiatives

Netweb is expanding its range of Tyrone AI systems based on NVIDIA MGX, a modular reference architecture to accelerate enterprise data center workloads.

Offered for both on-premises and off-premises cloud infrastructure, the new servers feature NVIDIA GH200 Grace Hopper Superchips, delivering the computational power to support large hyperscalers, research centers, enterprises and supercomputing centers in India and across Asia.

“Through Netweb’s decade-long collaboration with NVIDIA, we’ve shown that world-class computing infrastructure can be developed in India,” said Sanjay Lodha, chairman and managing director of Netweb. “Our next-generation systems will help the country’s businesses and researchers build and deploy more complex AI applications trained on proprietary datasets.”

Netweb also offers customers Tryone Skylus cloud instances that include the company’s full software stack, alongside the NVIDIA AI Enterprise and NVIDIA Omniverse software platforms, to develop large-scale agentic AI and physical AI.

NVIDIA’s roadmap features new platforms set to arrive on a one-year rhythm. By harnessing these advancements in AI computing and networking, infrastructure providers and manufacturers in India and beyond will be able to further scale the capabilities of AI development to power larger, multimodal models, optimize inference performance and train the next generation of AI applications.

Learn more about India’s AI adoption in the fireside chat between NVIDIA founder and CEO Jensen Huang and Mukesh Ambani, chairman and managing director of Reliance Industries, at the NVIDIA AI Summit.

]]>NVIDIA is expanding its collaboration with Microsoft to support global AI startups across industries — with an initial focus on healthcare and life sciences companies.

Announced today at the HLTH healthcare innovation conference, the initiative connects the startup ecosystem by bringing together the NVIDIA Inception global program for cutting-edge startups and Microsoft for Startups to broaden innovators’ access to accelerated computing by providing cloud credits, software for AI development and the support of technical and business experts.

The first phase will focus on high-potential digital health and life sciences companies that are part of both programs. Future phases will focus on startups in other industries.

Microsoft for Startups will provide each company with $150,000 of Microsoft Azure credits to access leading AI models, up to $200,000 worth of Microsoft business tools, and priority access to its Pegasus Program for go-to-market support.

NVIDIA Inception will provide 10,000 ai.nvidia.com inference credits to run GPU-optimized AI models through NVIDIA-managed serverless APIs; preferred pricing on NVIDIA AI Enterprise, which includes the full suite of NVIDIA Clara healthcare and life sciences computing platforms, software and services; early access to new NVIDIA healthcare offerings; and opportunities to connect with investors through the Inception VC Alliance and with industry partners through the Inception Alliance for Healthcare.

Both companies will provide the selected startups with dedicated technical support and hands-on workshops to develop digital health applications with the NVIDIA technology stack on Azure.

Supporting Startups at Every Stage

Hundreds of companies are already part of both NVIDIA Inception and Microsoft for Startups, using the combination of accelerated computing infrastructure and cutting-edge AI to advance their work.

Artisight, for example, is a smart hospital startup using AI to improve operational efficiency, documentation and care coordination in order to reduce the administrative burden on clinical staff and improve the patient experience. Its smart hospital network includes over 2,000 cameras and microphones at Northwestern Medicine, in Chicago, and over 200 other hospitals.

The company uses speech recognition models that can automate patient check-in with voice-enabled kiosks and computer vision models that can alert nurses when a patient is at risk of falling. Its products use software including NVIDIA Riva for conversational AI, NVIDIA DeepStream for vision AI and NVIDIA Triton Inference server to simplify AI inference in production.

“Access to the latest AI technologies is critical to developing smart hospital solutions that are reliable enough to be deployed in real-world clinical settings,” said Andrew Gostine, founder and CEO of Artisight. “The support of NVIDIA Inception and Microsoft for Startups has enabled our company to scale our products to help top U.S. hospitals care for thousands of patients.”

Another company, Pangaea Data, is helping healthcare organizations and pharmaceutical companies identify patients who remain undertreated or untreated despite available intelligence in their existing medical records. The company’s PALLUX platform supports clinicians at the point of care by finding more patients for screening and treatment. Deployed with NVIDIA GPUs on Azure’s HIPAA-compliant, secure cloud environment, PALLUX uses the NVIDIA FLARE federated learning framework to preserve patient privacy while driving improvement in health outcomes.

PALLUX helped one healthcare provider find 6x more cancer patients with cachexia — a condition characterized by loss of weight and muscle mass — for treatment and clinical trials. Pangaea Data’s platform achieved 90% accuracy and was deployed on the provider’s existing infrastructure within 12 weeks.

“By building our platform on a trusted cloud environment, we’re offering healthcare providers and pharmaceutical companies a solution to uncover insights from existing health records and realize the true promise of precision medicine and preventative healthcare,” said Pangaea Data CEO Vibhor Gupta. “Microsoft and NVIDIA have supported our work with powerful virtual machines and AI software, enabling us to focus on advancing our platform, rather than infrastructure management.”

Other startups participating in both programs and using NVIDIA GPUs on Azure include:

- Artificial, a lab orchestration startup that enables researchers to digitize end-to-end scientific workflows with AI tools that optimize scheduling, automate data entry tasks and guide scientists in real time using virtual assistants. The company is exploring the use of NVIDIA BioNeMo, an AI platform for drug discovery.

- BeeKeeperAI, which enables secure computing on sensitive data, including regulated data that can’t be anonymized or de-identified. Its EscrowAI platform integrates trusted execution environments with confidential computing and other privacy-enhancing technologies — including NVIDIA H100 Tensor Core GPUs — to meet data protection requirements and protect data sovereignty, individual privacy and intellectual property.

- Niramai, a startup that has developed an AI-powered medical device for early breast cancer detection. Its Thermalytix solution is a low-cost, portable screening tool that has been used to help screen over 250,000 women in 18 countries.

Building on a Trove of Healthcare Resources

Microsoft earlier this year announced a collaboration with NVIDIA to boost healthcare and life sciences organizations with generative AI, accelerated computing and the cloud.

Aimed at supporting projects in clinical research, drug discovery, medical imaging and precision medicine, this collaboration brought together Microsoft Azure with NVIDIA DGX Cloud, an end-to-end, scalable AI platform for developers.

It also provides users of NVIDIA DGX Cloud on Azure access to NVIDIA Clara, including domain-specific resources such as NVIDIA BioNeMo, a generative AI platform for drug discovery; NVIDIA MONAI, a suite of enterprise-grade AI for medical imaging; and NVIDIA Parabricks, a software suite designed to accelerate processing of sequencing data for genomics applications.

Join the Microsoft for Startups Founders Hub and the NVIDIA Inception program.

]]>AI can help solve some of the world’s biggest challenges — whether climate change, cancer or national security — U.S. Secretary of Energy Jennifer Granholm emphasized today during her remarks at the AI for Science, Energy and Security session at the NVIDIA AI Summit, in Washington, D.C.

Granholm went on to highlight the pivotal role AI is playing in tackling major national challenges, from energy innovation to bolstering national security.

“We need to use AI for both offense and defense — offense to solve these big problems and defense to make sure the bad guys are not using AI for nefarious purposes,” she said.

Granholm, who calls the Department of Energy “America’s Solutions Department,” highlighted the agency’s focus on solving the world’s biggest problems.

“Yes, climate change, obviously, but a whole slew of other problems, too … quantum computing and all sorts of next-generation technologies,” she said, pointing out that AI is a driving force behind many of these advances.

“AI can really help to solve some of those huge problems — whether climate change, cancer or national security,” she said. “The possibilities of AI for good are awesome, awesome.”

Following Granholm’s 15-minute address, a panel of experts from government, academia and industry took the stage to further discuss how AI accelerates advancements in scientific discovery, national security and energy innovation.

“AI is going to be transformative to our mission space.… We’re going to see these big step changes in capabilities,” said Helena Fu, director of the Office of Critical and Emerging Technologies at the Department of Energy, underscoring AI’s potential in safeguarding critical infrastructure and addressing cyber threats.

During her remarks, Granholm also stressed that AI’s increasing energy demands must be met responsibly.

“We are going to see about a 15% increase in power demand on our electric grid as a result of the data centers that we want to be located in the United States,” she explained.

However, the DOE is taking steps to meet this demand with clean energy.

“This year, in 2024, the United States will have added 30 Hoover Dams’ worth of clean power to our electric grid,” Granholm announced, emphasizing that the clean energy revolution is well underway.

AI’s Impact on Scientific Discovery and National Security

The discussion then shifted to how AI is revolutionizing scientific research and national security.

Tanya Das, director of the Energy Program at the Bipartisan Policy Center, pointed out that “AI can accelerate every stage of the innovation pipeline in the energy sector … starting from scientific discovery at the very beginning … going through to deployment and permitting.”

Das also highlighted the growing interest in Congress to support AI innovations, adding, “Congress is paying attention to this issue, and, I think, very motivated to take action on updating what the national vision is for artificial intelligence.”

Fu reiterated the department’s comprehensive approach, stating, “We cross from open science through national security, and we do this at scale.… Whether they be around energy security, resilience, climate change or the national security challenges that we’re seeing every day emerging.”

She also touched on the DOE’s future goals: “Our scientific systems will need access to AI systems,” Fu said, emphasizing the need to bridge both scientific reasoning and the new kinds of models we’ll need to develop for AI.

Collaboration Across Sectors: Government, Academia and Industry

Karthik Duraisamy, director of the Michigan Institute for Computational Discovery and Engineering at the University of Michigan, highlighted the power of collaboration in advancing scientific research through AI.

“Think about the scientific endeavor as 5% creativity and innovation and 95% intense labor. AI amplifies that 5% by a bit, and then significantly accelerates the 95% part,” Duraisamy explained. “That is going to completely transform science.”

Duraisamy further elaborated on the role AI could play as a persistent collaborator, envisioning a future where AI can work alongside scientists over weeks, months and years, generating new ideas and following through on complex projects.

“Instead of replacing graduate students, I think graduate students can be smarter than the professors on day one,” he said, emphasizing the potential for AI to support long-term research and innovation.

Learn more about how this week’s AI Summit highlights how AI is shaping the future across industries and how NVIDIA’s solutions are laying the groundwork for continued innovation.

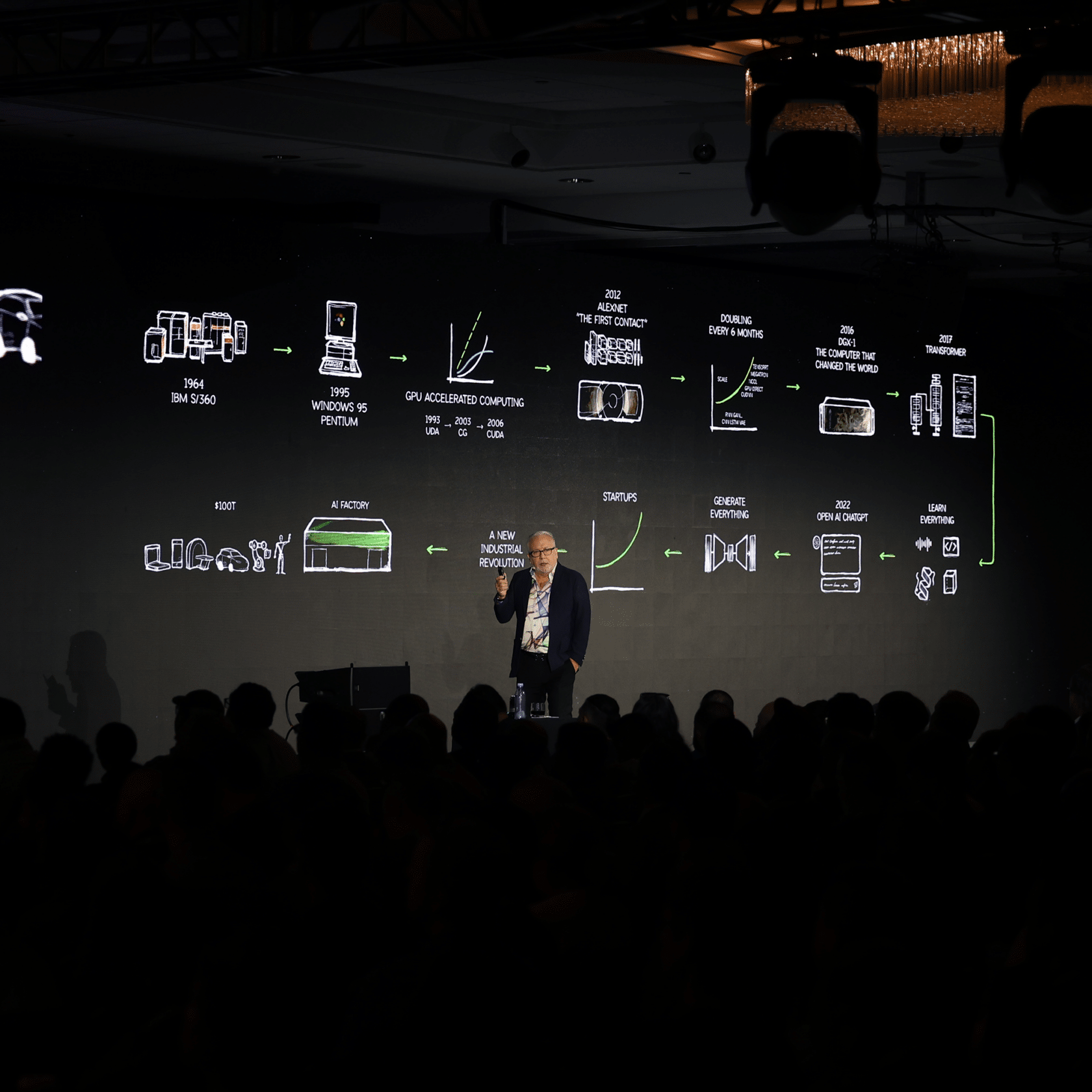

]]>Accelerated computing is sustainable computing, Bob Pette, NVIDIA’s vice president and general manager of enterprise platforms, explained in a keynote at the NVIDIA AI Summit on Tuesday in Washington, D.C.

NVIDIA’s accelerated computing isn’t just efficient. It’s critical to the next wave of industrial, scientific and healthcare transformations.

“We are in the dawn of a new industrial revolution,” Pette told an audience of policymakers, press, developers and entrepreneurs gathered for the event. “I’m just here to tell you that we’re designing our systems with not just performance in mind, but with energy efficiency in mind.”

NVIDIA’s Blackwell platform has achieved groundbreaking energy efficiency in AI computing, reducing energy consumption by up to 2,000x over the past decade for training models like GPT-4.

NVIDIA accelerated computing is cutting energy use for token generation — the output from AI models — by 100,000x, underscoring the value of accelerated computing for sustainability amid the rapid adoption of AI worldwide.

“These AI factories produce product. Those products are tokens, tokens are intelligence, and Intelligence is money,” Pette said. That’s what “will revolutionize every industry on this planet.”

NVIDIA’s CUDA libraries, which have been fundamental in enabling breakthroughs across industries, now power over 4,000 accelerated applications, Pette explained.

NVIDIA’s CUDA libraries, which have been fundamental in enabling breakthroughs across industries, now power over 4,000 accelerated applications, Pette explained.

“CUDA enables acceleration…. It also turns out to be one of the most impressive ways to reduce energy consumption,” Pette said.

These libraries are central to the company’s energy-efficient AI innovations driving significant performance gains while minimizing power consumption.

Pette also detailed how NVIDIA’s AI software helps organizations deploy AI solutions quickly and efficiently, enabling businesses to innovate faster and solve complex problems across sectors.

Pette discussed the concept of agentic AI, which goes beyond traditional AI by enabling intelligent agents to perceive, reason and act autonomously.

Agentic AI is capable of ”reasoning, of learning, and taking action,” Pette said. It’s transforming industries like manufacturing, customer service, and healthcare,” Pette said.

These AI agents are transforming industries by automating complex tasks and accelerating innovation in sectors like manufacturing, customer service and healthcare, he explained.

He also described how AI agents empower businesses to drive innovation in healthcare, manufacturing, scientific research and climate modeling.

With agentic AI, “you can do in minutes what used to take days,” Pette said.

NVIDIA, in collaboration with its partners, is tackling some of the world’s greatest challenges, including improving diagnostics and healthcare delivery, advancing climate modeling efforts and even helping find signs of life beyond our planet.

NVIDIA is collaborating with SETI to conduct real-time AI searches for fast radio bursts from distant galaxies, helping continue the exploration of space, Pette said.

Pette emphasized that NVIDIA is unlocking a $10 trillion opportunity in healthcare.

Through AI, NVIDIA is accelerating innovations in diagnostics, drug discovery and medical imaging, helping transform patient care worldwide.

Solutions like the NVIDIA Clara medical imaging platform are revolutionizing diagnostics, Parabricks is enabling breakthroughs in genomics research and the MONAI AI framework is advancing medical imaging capabilities.

Pette highlighted partnerships with leading institutions, including Carnegie Mellon University and the University of Pittsburgh, fostering AI innovation and development.

Pette also described how NVIDIA’s collaboration with federal agencies illustrates the importance of public-private partnerships in advancing AI-driven solutions in healthcare, climate modeling and national security.

Pette also announced that a new NVIDIA NIM Agent Blueprint supports cybersecurity advancements, enabling industries to safeguard critical infrastructure with AI-driven solutions.

In cybersecurity, Pette highlighted the NVIDIA NIM Agent Blueprint, a powerful tool enabling organizations to safeguard critical infrastructure through real-time threat detection and analysis.

This blueprint reduces threat response times from days to seconds, representing a significant leap forward in protecting industries.

“Agentic systems can access tools and reason through full lines of thought to provide instant one-click assessments,” Pette said. “This boosts productivity by allowing security analysts to focus on the most critical tasks while AI handles the heavy lifting of analysis, delivering fast and actionable insights.

NVIDIA’s accelerated computing solutions are advancing climate research by enabling more accurate and faster climate modeling. This technology is helping scientists tackle some of the most urgent environmental challenges, from monitoring global temperatures to predicting natural disasters.

Pette described how the NVIDIA Earth 2 platform enables climate experts to import data from multiple sources, fusing them together for analysis using Nvidia Omniverse. “NVIDIA Earth 2 brings together the power of simulation AI and visualization to empower the climate, tech ecosystem,” Pette said.

NVIDIA’s Greg Estes on Building the AI Workforce of the Future

Following Pette’s keynote, Greg Estes, NVIDIA’s vice president of corporate marketing and developer programs, underscored the company’s dedication to workforce training through initiatives like the NVIDIA AI Tech Community.

And through its Deep Learning Institute, NVIDIA has already trained more than 600,000 people worldwide, equipping the next generation with the critical skills to navigate and lead in the AI-driven future.

Exploring AI’s Role in Cybersecurity and Sustainability

Throughout the week, industry leaders are exploring AI’s role in solving critical issues in fields like cybersecurity and sustainability.

Upcoming sessions will feature U.S. Secretary of Energy Jennifer Granholm, who will discuss how AI is advancing energy innovation and scientific discovery.

Other speakers will address AI’s role in climate monitoring and environmental management, further showcasing the technology’s ability to address global sustainability challenges.

Learn more about how this week’s AI Summit highlights how AI is shaping the future across industries and how NVIDIA’s solutions are laying the groundwork for continued innovation.

Enterprises are looking for increasingly powerful compute to support their AI workloads and accelerate data processing. The efficiency gained can translate to better returns for their investments in AI training and fine-tuning, and improved user experiences for AI inference.

At the Oracle CloudWorld conference today, Oracle Cloud Infrastructure (OCI) announced the first zettascale OCI Supercluster, accelerated by the NVIDIA Blackwell platform, to help enterprises train and deploy next-generation AI models using more than 100,000 of NVIDIA’s latest-generation GPUs.

OCI Superclusters allow customers to choose from a wide range of NVIDIA GPUs and deploy them anywhere: on premises, public cloud and sovereign cloud. Set for availability in the first half of next year, the Blackwell-based systems can scale up to 131,072 Blackwell GPUs with NVIDIA ConnectX-7 NICs for RoCEv2 or NVIDIA Quantum-2 InfiniBand networking to deliver an astounding 2.4 zettaflops of peak AI compute to the cloud. (Read the press release to learn more about OCI Superclusters.)

At the show, Oracle also previewed NVIDIA GB200 NVL72 liquid-cooled bare-metal instances to help power generative AI applications. The instances are capable of large-scale training with Quantum-2 InfiniBand and real-time inference of trillion-parameter models within the expanded 72-GPU NVIDIA NVLink domain, which can act as a single, massive GPU.

This year, OCI will offer NVIDIA HGX H200 — connecting eight NVIDIA H200 Tensor Core GPUs in a single bare-metal instance via NVLink and NVLink Switch, and scaling to 65,536 H200 GPUs with NVIDIA ConnectX-7 NICs over RoCEv2 cluster networking. The instance is available to order for customers looking to deliver real-time inference at scale and accelerate their training workloads. (Read a blog on OCI Superclusters with NVIDIA B200, GB200 and H200 GPUs.)

OCI also announced general availability of NVIDIA L40S GPU-accelerated instances for midrange AI workloads, NVIDIA Omniverse and visualization. (Read a blog on OCI Superclusters with NVIDIA L40S GPUs.)

For single-node to multi-rack solutions, Oracle’s edge offerings provide scalable AI at the edge accelerated by NVIDIA GPUs, even in disconnected and remote locations. For example, smaller-scale deployments with Oracle’s Roving Edge Device v2 will now support up to three NVIDIA L4 Tensor Core GPUs.

Companies are using NVIDIA-powered OCI Superclusters to drive AI innovation. Foundation model startup Reka, for example, is using the clusters to develop advanced multimodal AI models to develop enterprise agents.

“Reka’s multimodal AI models, built with OCI and NVIDIA technology, empower next-generation enterprise agents that can read, see, hear and speak to make sense of our complex world,” said Dani Yogatama, cofounder and CEO of Reka. “With NVIDIA GPU-accelerated infrastructure, we can handle very large models and extensive contexts with ease, all while enabling dense and sparse training to scale efficiently at cluster levels.”

NVIDIA received the 2024 Oracle Technology Solution Partner Award in Innovation for its full-stack approach to innovation.

Accelerating Generative AI Oracle Database Workloads

Oracle Autonomous Database is gaining NVIDIA GPU support for Oracle Machine Learning notebooks to allow customers to accelerate their data processing workloads on Oracle Autonomous Database.

At Oracle CloudWorld, NVIDIA and Oracle are partnering to demonstrate three capabilities that show how the NVIDIA accelerated computing platform could be used today or in the future to accelerate key components of generative AI retrieval-augmented generation pipelines.

The first will showcase how NVIDIA GPUs can be used to accelerate bulk vector embeddings directly from within Oracle Autonomous Database Serverless to efficiently bring enterprise data closer to AI. These vectors can be searched using Oracle Database 23ai’s AI Vector Search.

The second demonstration will showcase a proof-of-concept prototype that uses NVIDIA GPUs, NVIDIA cuVS and an Oracle-developed offload framework to accelerate vector graph index generation, which significantly reduces the time needed to build indexes for efficient vector searches.

The third demonstration illustrates how NVIDIA NIM, a set of easy-to-use inference microservices, can boost generative AI performance for text generation and translation use cases across a range of model sizes and concurrency levels.

Together, these new Oracle Database capabilities and demonstrations highlight how NVIDIA GPUs can be used to help enterprises bring generative AI to their structured and unstructured data housed in or managed by an Oracle Database.

Sovereign AI Worldwide

NVIDIA and Oracle are collaborating to deliver sovereign AI infrastructure worldwide, helping address the data residency needs of governments and enterprises.

Brazil-based startup Wide Labs trained and deployed Amazonia IA, one of the first large language models for Brazilian Portuguese, using NVIDIA H100 Tensor Core GPUs and the NVIDIA NeMo framework in OCI’s Brazilian data centers to help ensure data sovereignty.

“Developing a sovereign LLM allows us to offer clients a service that processes their data within Brazilian borders, giving Amazônia a unique market position,” said Nelson Leoni, CEO of Wide Labs. “Using the NVIDIA NeMo framework, we successfully trained Amazônia IA.”

In Japan, Nomura Research Institute, a leading global provider of consulting services and system solutions, is using OCI’s Alloy infrastructure with NVIDIA GPUs to enhance its financial AI platform with LLMs operating in accordance with financial regulations and data sovereignty requirements.

Communication and collaboration company Zoom will be using NVIDIA GPUs in OCI’s Saudi Arabian data centers to help support compliance with local data requirements.

And geospatial modeling company RSS-Hydro is demonstrating how its flood mapping platform — built on the NVIDIA Omniverse platform and powered by L40S GPUs on OCI — can use digital twins to simulate flood impacts in Japan’s Kumamoto region, helping mitigate the impact of climate change.

These customers are among numerous nations and organizations building and deploying domestic AI applications powered by NVIDIA and OCI, driving economic resilience through sovereign AI infrastructure.

Enterprise-Ready AI With NVIDIA and Oracle

Enterprises can accelerate task automation on OCI by deploying NVIDIA software such as NIM microservices and NVIDIA cuOpt with OCI’s scalable cloud solutions. These solutions enable enterprises to quickly adopt generative AI and build agentic workflows for complex tasks like code generation and route optimization.

NVIDIA cuOpt, NIM, RAPIDS and more are included in the NVIDIA AI Enterprise software platform, available on the Oracle Cloud Marketplace.

Learn More at Oracle CloudWorld

Join NVIDIA at Oracle CloudWorld 2024 to learn how the companies’ collaboration is bringing AI and accelerated data processing to the world’s organizations.

Register to the event to watch sessions, see demos and join Oracle and NVIDIA for the solution keynote, “Unlock AI Performance with NVIDIA’s Accelerated Computing Platform” (SOL3866), on Wednesday, Sept. 11, in Las Vegas.

]]>One of the world’s largest AI communities — comprising 4 million developers on the Hugging Face platform — is gaining easy access to NVIDIA-accelerated inference on some of the most popular AI models.

New inference-as-a-service capabilities will enable developers to rapidly deploy leading large language models such as the Llama 3 family and Mistral AI models with optimization from NVIDIA NIM microservices running on NVIDIA DGX Cloud.

Announced today at the SIGGRAPH conference, the service will help developers quickly prototype with open-source AI models hosted on the Hugging Face Hub and deploy them in production. Enterprise Hub users can tap serverless inference for increased flexibility, minimal infrastructure overhead and optimized performance with NVIDIA NIM.

The inference service complements Train on DGX Cloud, an AI training service already available on Hugging Face.

Developers facing a growing number of open-source models can benefit from a hub where they can easily compare options. These training and inference tools give Hugging Face developers new ways to experiment with, test and deploy cutting-edge models on NVIDIA-accelerated infrastructure. They’re made easily accessible using the “Train” and “Deploy” drop-down menus on Hugging Face model cards, letting users get started with just a few clicks.

Get started with inference-as-a-service powered by NVIDIA NIM.

Beyond a Token Gesture — NVIDIA NIM Brings Big Benefits

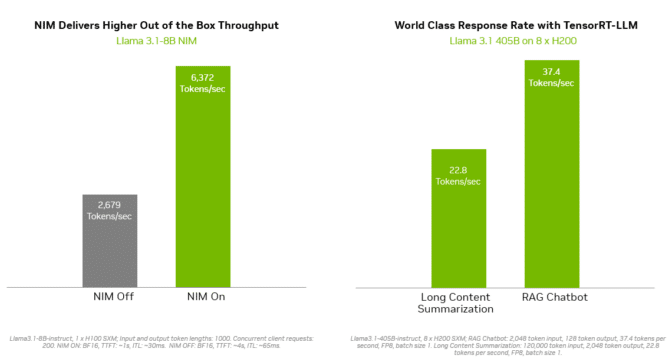

NVIDIA NIM is a collection of AI microservices — including NVIDIA AI foundation models and open-source community models — optimized for inference using industry-standard application programming interfaces, or APIs.

NIM offers users higher efficiency in processing tokens — the units of data used and generated by a language model. The optimized microservices also improve the efficiency of the underlying NVIDIA DGX Cloud infrastructure, which can increase the speed of critical AI applications.

This means developers see faster, more robust results from an AI model accessed as a NIM compared with other versions of the model. The 70-billion-parameter version of Llama 3, for example, delivers up to 5x higher throughput when accessed as a NIM compared with off-the-shelf deployment on NVIDIA H100 Tensor Core GPU-powered systems.

Near-Instant Access to DGX Cloud Provides Accessible AI Acceleration

The NVIDIA DGX Cloud platform is purpose-built for generative AI, offering developers easy access to reliable accelerated computing infrastructure that can help them bring production-ready applications to market faster.

The platform provides scalable GPU resources that support every step of AI development, from prototype to production, without requiring developers to make long-term AI infrastructure commitments.

Hugging Face inference-as-a-service on NVIDIA DGX Cloud powered by NIM microservices offers easy access to compute resources that are optimized for AI deployment, enabling users to experiment with the latest AI models in an enterprise-grade environment.

More on NVIDIA NIM at SIGGRAPH

At SIGGRAPH, NVIDIA also introduced generative AI models and NIM microservices for the OpenUSD framework to accelerate developers’ abilities to build highly accurate virtual worlds for the next evolution of AI.

To experience more than 100 NVIDIA NIM microservices with applications across industries, visit ai.nvidia.com.

]]>Businesses seeking to harness the power of AI need customized models tailored to their specific industry needs.

NVIDIA AI Foundry is a service that enables enterprises to use data, accelerated computing and software tools to create and deploy custom models that can supercharge their generative AI initiatives.

Just as TSMC manufactures chips designed by other companies, NVIDIA AI Foundry provides the infrastructure and tools for other companies to develop and customize AI models — using DGX Cloud, foundation models, NVIDIA NeMo software, NVIDIA expertise, as well as ecosystem tools and support.

The key difference is the product: TSMC produces physical semiconductor chips, while NVIDIA AI Foundry helps create custom models. Both enable innovation and connect to a vast ecosystem of tools and partners.

Enterprises can use AI Foundry to customize NVIDIA and open community models, including the new Llama 3.1 collection, as well as NVIDIA Nemotron, CodeGemma by Google DeepMind, CodeLlama, Gemma by Google DeepMind, Mistral, Mixtral, Phi-3, StarCoder2 and others.

Industry Pioneers Drive AI Innovation

Industry leaders Amdocs, Capital One, Getty Images, KT, Hyundai Motor Company, SAP, ServiceNow and Snowflake are among the first using NVIDIA AI Foundry. These pioneers are setting the stage for a new era of AI-driven innovation in enterprise software, technology, communications and media.

“Organizations deploying AI can gain a competitive edge with custom models that incorporate industry and business knowledge,” said Jeremy Barnes, vice president of AI Product at ServiceNow. “ServiceNow is using NVIDIA AI Foundry to fine-tune and deploy models that can integrate easily within customers’ existing workflows.”

The Pillars of NVIDIA AI Foundry

NVIDIA AI Foundry is supported by the key pillars of foundation models, enterprise software, accelerated computing, expert support and a broad partner ecosystem.

Its software includes AI foundation models from NVIDIA and the AI community as well as the complete NVIDIA NeMo software platform for fast-tracking model development.

The computing muscle of NVIDIA AI Foundry is NVIDIA DGX Cloud, a network of accelerated compute resources co-engineered with the world’s leading public clouds — Amazon Web Services, Google Cloud and Oracle Cloud Infrastructure. With DGX Cloud, AI Foundry customers can develop and fine-tune custom generative AI applications with unprecedented ease and efficiency, and scale their AI initiatives as needed without significant upfront investments in hardware. This flexibility is crucial for businesses looking to stay agile in a rapidly changing market.

If an NVIDIA AI Foundry customer needs assistance, NVIDIA AI Enterprise experts are on hand to help. NVIDIA experts can walk customers through each of the steps required to build, fine-tune and deploy their models with proprietary data, ensuring the models tightly align with their business requirements.

NVIDIA AI Foundry customers have access to a global ecosystem of partners that can provide a full range of support. Accenture, Deloitte, Infosys, Tata Consultancy Services and Wipro are among the NVIDIA partners that offer AI Foundry consulting services that encompass design, implementation and management of AI-driven digital transformation projects. Accenture is first to offer its own AI Foundry-based offering for custom model development, the Accenture AI Refinery framework.

Additionally, service delivery partners such as Data Monsters, Quantiphi, Slalom and SoftServe help enterprises navigate the complexities of integrating AI into their existing IT landscapes, ensuring that AI applications are scalable, secure and aligned with business objectives.

Customers can develop NVIDIA AI Foundry models for production using AIOps and MLOps platforms from NVIDIA partners, including ActiveFence, AutoAlign, Cleanlab, DataDog, Dataiku, Dataloop, DataRobot, Deepchecks, Domino Data Lab, Fiddler AI, Giskard, New Relic, Scale, Tumeryk and Weights & Biases.

Customers can output their AI Foundry models as NVIDIA NIM inference microservices — which include the custom model, optimized engines and a standard API — to run on their preferred accelerated infrastructure.

Inferencing solutions like NVIDIA TensorRT-LLM deliver improved efficiency for Llama 3.1 models to minimize latency and maximize throughput. This enables enterprises to generate tokens faster while reducing total cost of running the models in production. Enterprise-grade support and security is provided by the NVIDIA AI Enterprise software suite.

The broad range of deployment options includes NVIDIA-Certified Systems from global server manufacturing partners including Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro, as well as cloud instances from Amazon Web Services, Google Cloud and Oracle Cloud Infrastructure.

Additionally, Together AI, a leading AI acceleration cloud, today announced it will enable its ecosystem of over 100,000 developers and enterprises to use its NVIDIA GPU-accelerated inference stack to deploy Llama 3.1 endpoints and other open models on DGX Cloud.

“Every enterprise running generative AI applications wants a faster user experience, with greater efficiency and lower cost,” said Vipul Ved Prakash, founder and CEO of Together AI. “Now, developers and enterprises using the Together Inference Engine can maximize performance, scalability and security on NVIDIA DGX Cloud.”

NVIDIA NeMo Speeds and Simplifies Custom Model Development

With NVIDIA NeMo integrated into AI Foundry, developers have at their fingertips the tools needed to curate data, customize foundation models and evaluate performance. NeMo technologies include:

- NeMo Curator is a GPU-accelerated data-curation library that improves generative AI model performance by preparing large-scale, high-quality datasets for pretraining and fine-tuning.

- NeMo Customizer is a high-performance, scalable microservice that simplifies fine-tuning and alignment of LLMs for domain-specific use cases.

- NeMo Evaluator provides automatic assessment of generative AI models across academic and custom benchmarks on any accelerated cloud or data center.

- NeMo Guardrails orchestrates dialog management, supporting accuracy, appropriateness and security in smart applications with large language models to provide safeguards for generative AI applications.

Using the NeMo platform in NVIDIA AI Foundry, businesses can create custom AI models that are precisely tailored to their needs. This customization allows for better alignment with strategic objectives, improved accuracy in decision-making and enhanced operational efficiency. For instance, companies can develop models that understand industry-specific jargon, comply with regulatory requirements and integrate seamlessly with existing workflows.

“As a next step of our partnership, SAP plans to use NVIDIA’s NeMo platform to help businesses to accelerate AI-driven productivity powered by SAP Business AI,” said Philipp Herzig, chief AI officer at SAP.

Enterprises can deploy their custom AI models in production with NVIDIA NeMo Retriever NIM inference microservices. These help developers fetch proprietary data to generate knowledgeable responses for their AI applications with retrieval-augmented generation (RAG).

“Safe, trustworthy AI is a non-negotiable for enterprises harnessing generative AI, with retrieval accuracy directly impacting the relevance and quality of generated responses in RAG systems,” said Baris Gultekin, Head of AI, Snowflake. “Snowflake Cortex AI leverages NeMo Retriever, a component of NVIDIA AI Foundry, to further provide enterprises with easy, efficient, and trusted answers using their custom data.”

Custom Models Drive Competitive Advantage

One of the key advantages of NVIDIA AI Foundry is its ability to address the unique challenges faced by enterprises in adopting AI. Generic AI models can fall short of meeting specific business needs and data security requirements. Custom AI models, on the other hand, offer superior flexibility, adaptability and performance, making them ideal for enterprises seeking to gain a competitive edge.

Learn more about how NVIDIA AI Foundry allows enterprises to boost productivity and innovation.

]]>If optimized AI workflows are like a perfectly tuned orchestra — where each component, from hardware infrastructure to software libraries, hits exactly the right note — then the long-standing harmony between NVIDIA and Microsoft is music to developers’ ears.

The latest AI models developed by Microsoft, including the Phi-3 family of small language models, are being optimized to run on NVIDIA GPUs and made available as NVIDIA NIM inference microservices. Other microservices developed by NVIDIA, such as the cuOpt route optimization AI, are regularly added to Microsoft Azure Marketplace as part of the NVIDIA AI Enterprise software platform.

In addition to these AI technologies, NVIDIA and Microsoft are delivering a growing set of optimizations and integrations for developers creating high-performance AI apps for PCs powered by NVIDIA GeForce RTX and NVIDIA RTX GPUs.

Building on the progress shared at NVIDIA GTC, the two companies are furthering this ongoing collaboration at Microsoft Build, an annual developer event, taking place this year in Seattle through May 23.

Accelerating Microsoft’s Phi-3 Models

Microsoft is expanding its family of Phi-3 open small language models, adding small (7-billion-parameter) and medium (14-billion-parameter) models similar to its Phi-3-mini, which has 3.8 billion parameters. It’s also introducing a new 4.2-billion-parameter multimodal model, Phi-3-vision, that supports images and text.

All of these models are GPU-optimized with NVIDIA TensorRT-LLM and available as NVIDIA NIMs, which are accelerated inference microservices with a standard application programming interface (API) that can be deployed anywhere.

APIs for the NIM-powered Phi-3 models are available at ai.nvidia.com and through NVIDIA AI Enterprise on the Azure Marketplace.

NVIDIA cuOpt Now Available on Azure Marketplace

NVIDIA cuOpt, a GPU-accelerated AI microservice for route optimization, is now available in Azure Marketplace via NVIDIA AI Enterprise. cuOpt features massively parallel algorithms that enable real-time logistics management for shipping services, railway systems, warehouses and factories.

The model has set two dozen world records on major routing benchmarks, demonstrating the best accuracy and fastest times. It could save billions of dollars for the logistics and supply chain industries by optimizing vehicle routes, saving travel time and minimizing idle periods.

Through Azure Marketplace, developers can easily integrate the cuOpt microservice with Azure Maps to support teal-time logistics management and other cloud-based workflows, backed by enterprise-grade management tools and security.

Optimizing AI Performance on PCs With NVIDIA RTX

The NVIDIA accelerated computing platform is the backbone of modern AI — helping developers build solutions for over 100 million Windows GeForce RTX-powered PCs and NVIDIA RTX-powered workstations worldwide.

NVIDIA and Microsoft are delivering new optimizations and integrations to Windows developers to accelerate AI in next-generation PC and workstation applications. These include:

- Faster inference performance for large language models via the NVIDIA DirectX driver, the Generative AI ONNX Runtime extension and DirectML. These optimizations, available now in the GeForce Game Ready, NVIDIA Studio and NVIDIA RTX Enterprise Drivers, deliver up to 3x faster performance on NVIDIA and GeForce RTX GPUs.

- Optimized performance on RTX GPUs for AI models like Stable Diffusion and Whisper via WebNN, an API that enables developers to accelerate AI models in web applications using on-device hardware.

- With Windows set to support PyTorch through DirectML, thousands of Hugging Face models will work in Windows natively. NVIDIA and Microsoft are collaborating to scale performance on more than 100 million RTX GPUs.

Join NVIDIA at Microsoft Build

Conference attendees can visit NVIDIA booth FP28 to meet developer experts and experience live demos of NVIDIA NIM, NVIDIA cuOpt, NVIDIA Omniverse and the NVIDIA RTX AI platform. The booth also highlights the NVIDIA MONAI platform for medical imaging workflows and NVIDIA BioNeMo generative AI platform for drug discovery — both available on Azure as part of NVIDIA AI Enterprise.

Attend sessions with NVIDIA speakers to dive into the capabilities of the NVIDIA RTX AI platform on Windows PCs and discover how to deploy generative AI and digital twin tools on Microsoft Azure.

And sign up for the Developer Showcase, taking place Wednesday, to discover how developers are building innovative generative AI using NVIDIA AI software on Azure.

]]>Large language models that power generative AI are seeing intense innovation — models that handle multiple types of data such as text, image and sounds are becoming increasingly common.

However, building and deploying these models remains challenging. Developers need a way to quickly experience and evaluate models to determine the best fit for their use case, and then optimize the model for performance in a way that not only is cost-effective but offers the best performance.

To make it easier for developers to create AI-powered applications with world-class performance, NVIDIA and Google today announced three new collaborations at Google I/O ‘24.

Gemma + NIM

Using TensorRT-LLM, NVIDIA is working with Google to optimize two new models it introduced at the event: Gemma 2 and PaliGemma. These models are built from the same research and technology used to create the Gemini models, and each is focused on a specific area:

- Gemma 2 is the next generation of Gemma models for a broad range of use cases and features a brand new architecture designed for breakthrough performance and efficiency.

- PaliGemma is an open vision language model (VLM) inspired by PaLI-3. Built on open components including the SigLIP vision model and the Gemma language model, PaliGemma is designed for vision-language tasks such as image and short video captioning, visual question answering, understanding text in images, object detection and object segmentation. PaliGemma is designed for class-leading fine-tuning performance on a wide range of vision-language tasks and is also supported by NVIDIA JAX-Toolbox.

Gemma 2 and PaliGemma will be offered with NVIDIA NIM inference microservices, part of the NVIDIA AI Enterprise software platform, which simplifies the deployment of AI models at scale. NIM support for the two new models are available from the API catalog, starting with PaliGemma today; they soon will be released as containers on NVIDIA NGC and GitHub.

Bringing Accelerated Data Analytics to Colab

Google also announced that RAPIDS cuDF, an open-source GPU dataframe library, is now supported by default on Google Colab, one of the most popular developer platforms for data scientists. It now takes just a few seconds for Google Colab’s 10 million monthly users to accelerate pandas-based Python workflows by up to 50x using NVIDIA L4 Tensor Core GPUs, with zero code changes.

With RAPIDS cuDF, developers using Google Colab can speed up exploratory analysis and production data pipelines. While pandas is one of the world’s most popular data processing tools due to its intuitive API, applications often struggle as their data sizes grow. With even 5-10GB of data, many simple operations can take minutes to finish on a CPU, slowing down exploratory analysis and production data pipelines.

RAPIDS cuDF is designed to solve this problem by seamlessly accelerating pandas code on GPUs where applicable, and falling back to CPU-pandas where not. With RAPIDS cuDF available by default on Colab, all developers everywhere can leverage accelerated data analytics.

Taking AI on the Road

By employing AI PCs using NVIDIA RTX graphics, Google and NVIDIA also announced a Firebase Genkit collaboration that enables app developers to easily integrate generative AI models, like the new family of Gemma models, into their web and mobile applications to deliver custom content, provide semantic search and answer questions. Developers can start work streams using local RTX GPUs before moving their work seamlessly to Google Cloud infrastructure.

To make this even easier, developers can build apps with Genkit using JavaScript, a programming language mobile developers commonly use to build their apps.

The Innovation Beat Goes On

NVIDIA and Google Cloud are collaborating in multiple domains to propel AI forward. From the upcoming Grace Blackwell-powered DGX Cloud platform and JAX framework support, to bringing the NVIDIA NeMo framework to Google Kubernetes Engine, the companies’ full-stack partnership expands the possibilities of what customers can do with AI using NVIDIA technologies on Google Cloud.

]]>Following an announcement by Japan’s Ministry of Economy, Trade and Industry, NVIDIA will play a central role in developing the nation’s generative AI infrastructure as Japan seeks to capitalize on the technology’s economic potential and further develop its workforce.

NVIDIA is collaborating with key digital infrastructure providers, including GMO Internet Group, Highreso, KDDI Corporation, RUTILEA, SAKURA internet Inc. and SoftBank Corp., which the ministry has certified to spearhead the development of cloud infrastructure crucial for AI applications.

Over the last two months, the ministry announced plans to allocate $740 million, approximately ¥114.6 billion, to assist six local firms in this initiative. Following on from last year, this is a significant effort by the Japanese government to subsidize AI computing resources, by expanding the number of companies involved.

With this move, Japan becomes the latest nation to embrace the concept of sovereign AI, aiming to fortify its local startups, enterprises and research efforts with advanced AI technologies.

Across the globe, nations are building up domestic computing capacity through various models. Some procure and operate sovereign AI clouds with state-owned telecommunications providers or utilities. Others are sponsoring local cloud partners to provide a shared AI computing platform for public and private sector use.

Japan’s initiative follows NVIDIA founder and CEO Jensen Huang’s visit last year, where he met with political and business leaders — including Japanese Prime Minister Fumio Kishida — to discuss the future of AI.

During his trip, Huang emphasized that “AI factories” — next-generation data centers designed to handle the most computationally intensive AI tasks — are crucial for turning vast amounts of data into intelligence. “The AI factory will become the bedrock of modern economies across the world,” Huang said during a meeting with the Japanese press in December.

The Japanese government plans to subsidize a significant portion of the costs for building AI supercomputers, which will facilitate AI adoption, enhance workforce skills, support Japanese language model development and bolster resilience against natural disasters and climate change.

Under the country’s Economic Security Promotion Act, the ministry aims to secure a stable supply of local cloud services, reducing the time and cost of developing next-generation AI technologies.

Japan’s technology powerhouses are already moving fast to embrace AI. Last week, SoftBank Corp. announced that it will invest ¥150 billion, approximately $960 million, for its plan to expand the infrastructure needed to develop Japan’s top-class AI, including purchases of NVIDIA accelerated computing.

The news follows Huang’s meetings with leaders in Canada, France, India, Japan, Malaysia, Singapore and Vietnam over the past year, as countries seek to supercharge their regional economies and embrace challenges such as climate change with AI.

]]>Harnessing optimized AI models for healthcare is easier than ever as NVIDIA NIM, a collection of cloud-native microservices, integrates with Amazon Web Services.

NIM, part of the NVIDIA AI Enterprise software platform available on AWS Marketplace, enables developers to access a growing library of AI models through industry-standard application programming interfaces, or APIs. The library includes foundation models for drug discovery, medical imaging and genomics, backed by enterprise-grade security and support.

NIM is now available via Amazon SageMaker — a fully managed service to prepare data and build, train and deploy machine learning models — and AWS ParallelCluster, an open-source tool to deploy and manage high performance computing clusters on AWS. NIMs can also be orchestrated using AWS HealthOmics, a purpose-built service for biological data analysis.

Easy access to NIM will enable the thousands of healthcare and life sciences companies already using AWS to deploy generative AI more quickly, without the complexities of model development and packaging for production. It’ll also help developers build workflows that combine AI models across different modalities, such as amino acid sequences, MRI images and plain-text patient health records.

Presented today at the AWS Life Sciences Leader Symposium in Boston, this initiative extends the availability of NVIDIA Clara accelerated healthcare software and services on AWS — which include fast and easy-to-deploy NIMs from NVIDIA BioNeMo for drug discovery, NVIDIA MONAI for medical imaging workflows and NVIDIA Parabricks for accelerated genomics.

Pharma and Biotech Companies Adopt NVIDIA AI on AWS

BioNeMo is a generative AI platform of foundation models, training frameworks, domain-specific data loaders and optimized training recipes that support the training and fine-tuning of biology and chemistry models on proprietary data. It’s used by more than 100 organizations globally.

Amgen, one of the world’s leading biotechnology companies, has used the BioNeMo framework to train generative models for protein design, and is exploring the potential use of BioNeMo with AWS.