Starting with the release of CUDA in 2006, NVIDIA has driven advancements in AI and accelerated computing — and the most recent TOP500 list of the world’s most powerful supercomputers highlights the culmination of the company’s achievements in the field.

This year, 384 systems on the TOP500 list are powered by NVIDIA technologies. Among the 53 new to the list, 87% — 46 systems — are accelerated. Of those accelerated systems, 85% use NVIDIA Hopper GPUs, driving advancements in areas like climate forecasting, drug discovery and quantum simulation.

Accelerated computing is much more than floating point operations per second (FLOPS). It requires full-stack, application-specific optimization. At SC24 this week, NVIDIA announced the release of cuPyNumeric, an NVIDIA CUDA-X library that enables over 5 million developers to seamlessly scale to powerful computing clusters without modifying their Python code.

NVIDIA also revealed significant updates to the NVIDIA CUDA-Q development platform, which empowers quantum researchers to simulate quantum devices at a scale previously thought computationally impossible.

And, NVIDIA received nearly a dozen HPCwire Readers’ and Editors’ Choice awards across a variety of categories, marking its 20th consecutive year of recognition.

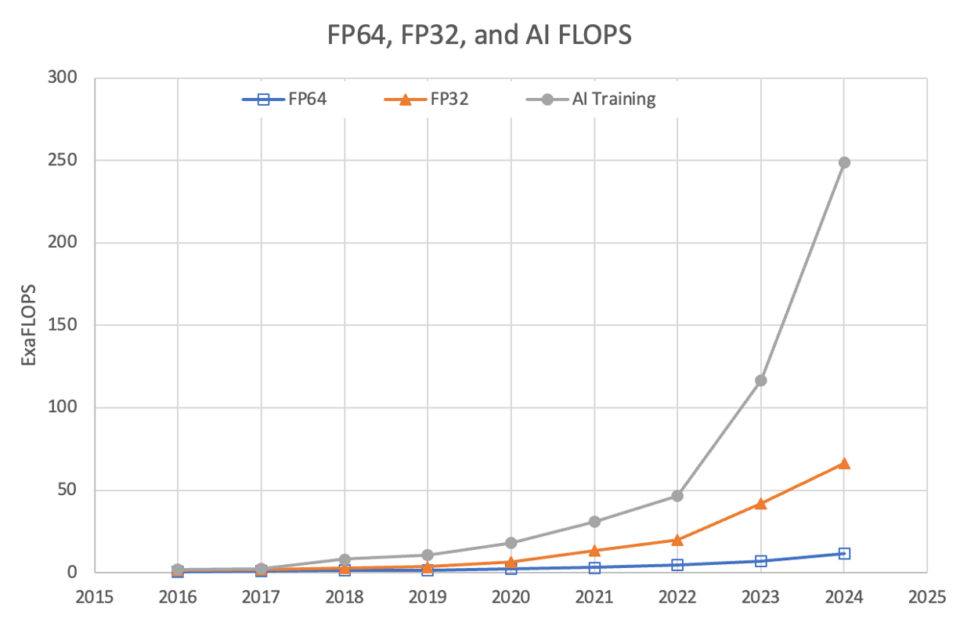

A New Era of Scientific Discovery With Mixed Precision and AI

Mixed-precision floating-point operations and AI have become the tools of choice for researchers grappling with the complexities of modern science. They offer greater speed, efficiency and adaptability than traditional methods, without compromising accuracy.

This shift isn’t just theoretical — it’s already happening. At SC24, two Gordon Bell finalist projects revealed how using AI and mixed precision helped advance genomics and protein design.

In his paper titled “Using Mixed Precision for Genomics,” David Keyes, a professor at King Abdullah University of Science and Technology, used 0.8 exaflops of mixed precision to explore relationships between genomes and their generalized genotypes, and then to the prevalence of diseases to which they are subject.

Similarly, Arvind Ramanathan, a computational biologist from the Argonne National Laboratory, harnessed 3 exaflops of AI performance on the NVIDIA Grace Hopper-powered Alps system to speed up protein design.

To further advance AI-driven drug discovery and the development of lifesaving therapies, researchers can use NVIDIA BioNeMo, powerful tools designed specifically for pharmaceutical applications. Now in open source, the BioNeMo Framework can accelerate AI model creation, customization and deployment for drug discovery and molecular design.

Across the TOP500, the widespread use of AI and mixed-precision floating-point operations reflects a global shift in computing priorities. A total of 249 exaflops of AI performance are now available to TOP500 systems, supercharging innovations and discoveries across industries.

NVIDIA-accelerated TOP500 systems excel across key metrics like AI and mix-precision system performance. With over 190 exaflops of AI performance and 17 exaflops of single-precision (FP32), NVIDIA’s accelerated computing platform is the new engine of scientific computing. NVIDIA also delivers 4 exaflops of double-precision (FP64) performance for certain scientific calculations that still require it.

Accelerated Computing Is Sustainable Computing

As the demand for computational capacity grows, so does the need for sustainability.

In the Green500 list of the world’s most energy-efficient supercomputers, systems with NVIDIA accelerated computing rank among eight of the top 10. The JEDI system at EuroHPC/FZJ, for example, achieves a staggering 72.7 gigaflops per watt, setting a benchmark for what’s possible when performance and sustainability align.

For climate forecasting, NVIDIA announced at SC24 two new NVIDIA NIM microservices for NVIDIA Earth-2, a digital twin platform for simulating and visualizing weather and climate conditions. The CorrDiff NIM and FourCastNet NIM microservices can accelerate climate change modeling and simulation results by up to 500x.

In a world increasingly conscious of its environmental footprint, NVIDIA’s innovations in accelerated computing balance high performance with energy efficiency to help realize a brighter, more sustainable future.

Supercomputing Community Embraces NVIDIA

The 11 HPCwire Readers’ Choice and Editors’ Choice awards NVIDIA received represent the work of the entire scientific community of engineers, developers, researchers, partners, customers and more.

The awards include:

- Readers’ Choice: Best AI Product or Technology – NVIDIA GH200 Grace Hopper Superchip

- Readers’ Choice: Best HPC Interconnect Product or Technology – NVIDIA Quantum-X800

- Readers’ Choice: Best HPC Server Product or Technology – NVIDIA Grace CPU Superchip

- Readers’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA Quantum-X800

- Readers’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA Spectrum-X

- Readers’ and Editors’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA Blackwell GPU

- Editors’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA CUDA-Q

- Readers’ Choice: Top 5 Vendors to Watch – NVIDIA

- Readers’ Choice: Best HPC Response to Societal Plight – NVIDIA Earth-2

- Editors’ Choice: Best Use of HPC in Energy (one of two named contributors) – Real-time simulation of CO2 plume migration in carbon capture and storage

- Readers’ Choice Award: Best HPC Collaboration (one of 11 named contributors) – National Artificial Intelligence Research Resource Pilot

Watch the replay of NVIDIA’s special address at SC24 and learn more about the company’s news in the SC24 online press kit.

See notice regarding software product information.

]]>NVIDIA kicked off SC24 in Atlanta with a wave of AI and supercomputing tools set to revolutionize industries like biopharma and climate science.

The announcements, delivered by NVIDIA founder and CEO Jensen Huang and Vice President of Accelerated Computing Ian Buck, are rooted in the company’s deep history in transforming computing.

“Supercomputers are among humanity’s most vital instruments, driving scientific breakthroughs and expanding the frontiers of knowledge,” Huang said. “Twenty-five years after creating the first GPU, we have reinvented computing and sparked a new industrial revolution.”

NVIDIA’s journey in accelerated computing began with CUDA in 2006 and the first GPU for scientific computing, Huang said.

Milestones like Tokyo Tech’s Tsubame supercomputer in 2008, the Oak Ridge National Laboratory’s Titan supercomputer in 2012 and the AI-focused NVIDIA DGX-1 delivered to OpenAI in 2016 highlight NVIDIA’s transformative role in the field.

“Since CUDA’s inception, we’ve driven down the cost of computing by a millionfold,” Huang said. “For some, NVIDIA is a computational microscope, allowing them to see the impossibly small. For others, it’s a telescope exploring the unimaginably distant. And for many, it’s a time machine, letting them do their life’s work within their lifetime.”

At SC24, NVIDIA’s announcements spanned tools for next-generation drug discovery, real-time climate forecasting and quantum simulations.

Central to the company’s advancements are CUDA-X libraries, described by Huang as “the engines of accelerated computing,” which power everything from AI-driven healthcare breakthroughs to quantum circuit simulations.

Huang and Buck highlighted examples of real-world impact, including Nobel Prize-winning breakthroughs in neural networks and protein prediction, powered by NVIDIA technology.

“AI will accelerate scientific discovery, transforming industries and revolutionizing every one of the world’s $100 trillion markets,” Huang said.

CUDA-X Libraries Power New Frontiers

At SC24, NVIDIA announced the new cuPyNumeric library, a GPU-accelerated implementation of NumPy, designed to supercharge applications in data science, machine learning and numerical computing.

With over 400 CUDA-X libraries, including cuDNN for deep learning and cuQuantum for quantum circuit simulations, NVIDIA continues to lead in enhancing computing capabilities across various industries.

Real-Time Digital Twins With Omniverse Blueprint

NVIDIA unveiled the NVIDIA Omniverse Blueprint for real-time computer-aided engineering digital twins, a reference workflow designed to help developers create interactive digital twins for industries like aerospace, automotive, energy and manufacturing.

Built on NVIDIA acceleration libraries, physics-AI frameworks and interactive, physically based rendering, the blueprint accelerates simulations by up to 1,200x, setting a new standard for real-time interactivity.

Early adopters, including Siemens, Altair, Ansys and Cadence, are already using the blueprint to optimize workflows, cut costs and bring products to market faster.

Quantum Leap With CUDA-Q

NVIDIA’s focus on real-time, interactive technologies extends across fields, from engineering to quantum simulations.

In partnership with Google, NVIDIA’s CUDA-Q now powers detailed dynamical simulations of quantum processors, reducing weeks-long calculations to minutes.

Buck explained that with CUDA-Q, developers of all quantum processors can perform larger simulations and explore more scalable qubit designs.

AI Breakthroughs in Drug Discovery and Chemistry

With the open-source release of BioNeMo Framework, NVIDIA is advancing AI-driven drug discovery as researchers gain powerful tools tailored specifically for pharmaceutical applications.

BioNeMo accelerates training by 2x compared to other AI software, enabling faster development of lifesaving therapies.

NVIDIA also unveiled DiffDock 2.0, a breakthrough tool for predicting how drugs bind to target proteins — critical for drug discovery.

Powered by the new cuEquivariance library, DiffDock 2.0 is 6x faster than before, enabling researchers to screen millions of molecules with unprecedented speed and accuracy.

And the NVIDIA ALCHEMI NIM microservice, NVIDIA introduces generative AI to chemistry, allowing researchers to design and evaluate novel materials with incredible speed.

Scientists start by defining the properties they want — like strength, conductivity, low toxicity or even color, Buck explained.

A generative model suggests thousands of potential candidates with the desired properties. Then the ALCHEMI NIM sorts candidate compounds for stability by solving for their lowest energy states using NVIDIA Warp.

This microservice is a game-changer for materials discovery, helping developers tackle challenges in renewable energy and beyond.

These innovations demonstrate how NVIDIA is harnessing AI to drive breakthroughs in science, transforming industries and enabling faster solutions to global challenges.

Earth-2 NIM Microservices: Redefining Climate Forecasts in Real Time

Buck also announced two new microservices — CorrDiff NIM and FourCastNet NIM — to accelerate climate change modeling and simulation results by up to 500x in the NVIDIA Earth-2 platform.

Earth-2, a digital twin for simulating and visualizing weather and climate conditions, is designed to empower weather technology companies with advanced generative AI-driven capabilities.

These tools deliver higher-resolution and more accurate predictions, enabling the forecasting of extreme weather events with unprecedented speed and energy efficiency.

With natural disasters causing $62 billion in insured losses in the first half of this year — 70% higher than the 10-year average — NVIDIA’s innovations address a growing need for precise, real-time climate forecasting. These tools highlight NVIDIA’s commitment to leveraging AI for societal resilience and climate preparedness.

Expanding Production With Foxconn Collaboration

As demand for AI systems like the Blackwell supercomputer grows, NVIDIA is scaling production through new Foxconn facilities in the U.S., Mexico and Taiwan.

Foxconn is building the production and testing facilities using NVIDIA Omniverse to bring up the factories as fast as possible.

Scaling New Heights With Hopper

NVIDIA also announced the general availability of the NVIDIA H200 NVL, a PCIe GPU based on the NVIDIA Hopper architecture optimized for low-power, air-cooled data centers.

The H200 NVL offers up to 1.7x faster large language model inference and 1.3x more performance on HPC applications, making it ideal for flexible data center configurations.

It supports a variety of AI and HPC workloads, enhancing performance while optimizing existing infrastructure.

And the GB200 Grace Blackwell NVL4 Superchip integrates four NVIDIA NVLink-connected Blackwell GPUs unified with two Grace CPUs over NVLink-C2C, Buck said. It provides up to 2x performance for scientific computing, training and inference applications over the prior generation. |

The GB200 NVL4 superchip will be available in the second half of 2025.

The talk wrapped up with an invitation to attendees to visit NVIDIA’s booth at SC24 to interact with various demos, including James, NVIDIA’s digital human, the world’s first real-time interactive wind tunnel and the Earth-2 NIM microservices for climate modeling.

Learn more about how NVIDIA’s innovations are shaping the future of science at SC24.

]]>

NVIDIA today at SC24 announced two new NVIDIA NIM microservices that can accelerate climate change modeling simulation results by 500x in NVIDIA Earth-2.

Earth-2 is a digital twin platform for simulating and visualizing weather and climate conditions. The new NIM microservices offer climate technology application providers advanced generative AI-driven capabilities to assist in forecasting extreme weather events.

NVIDIA NIM microservices help accelerate the deployment of foundation models while keeping data secure.

Extreme weather incidents are increasing in frequency, raising concerns over disaster safety and preparedness, and possible financial impacts.

Natural disasters were responsible for roughly $62 billion of insured losses during the first half of this year. That’s about 70% more than the 10-year average, according to a report in Bloomberg.

NVIDIA is releasing the CorrDiff NIM and FourCastNet NIM microservices to help weather technology companies more quickly develop higher-resolution and more accurate predictions. The NIM microservices also deliver leading energy efficiency compared with traditional systems.

New CorrDiff NIM Microservices for Higher-Resolution Modeling

NVIDIA CorrDiff is a generative AI model for kilometer-scale super resolution. Its capability to super-resolve typhoons over Taiwan was recently shown at GTC 2024. CorrDiff was trained on the Weather Research and Forecasting (WRF) model’s numerical simulations to generate weather patterns at 12x higher resolution.

High-resolution forecasts capable of visualizing within the fewest kilometers are essential to meteorologists and industries. The insurance and reinsurance industries rely on detailed weather data for assessing risk profiles. But achieving this level of detail using traditional numerical weather prediction models like WRF or High-Resolution Rapid Refresh is often too costly and time-consuming to be practical.

The CorrDiff NIM microservice is 500x faster and 10,000x more energy-efficient than traditional high-resolution numerical weather prediction using CPUs. Also, CorrDiff is now operating at 300x larger scale. It is super-resolving — or increasing the resolution of lower-resolution images or videos — for the entire United States and predicting precipitation events, including snow, ice and hail, with visibility in the kilometers.

Enabling Large Sets of Forecasts With New FourCastNet NIM Microservice

Not every use case requires high-resolution forecasts. Some applications benefit more from larger sets of forecasts at coarser resolution.

State-of-the-art numerical models like IFS and GFS are limited to 50 and 20 sets of forecasts, respectively, due to computational constraints.

The FourCastNet NIM microservice, available today, offers global, medium-range coarse forecasts. By using the initial assimilated state from operational weather centers such as European Centre for Medium-Range Weather Forecasts or National Oceanic and Atmospheric Administration, providers can generate forecasts for the next two weeks, 5,000x faster than traditional numerical weather models.

This opens new opportunities for climate tech providers to estimate risks related to extreme weather at a different scale, enabling them to predict the likelihood of low-probability events that current computational pipelines overlook.

Learn more about CorrDiff and FourCastNet NIM microservices on ai.nvidia.com.

]]>Whether they’re looking at nanoscale electron behaviors or starry galaxies colliding millions of light years away, many scientists share a common challenge — they must comb through petabytes of data to extract insights that can advance their fields.

With the NVIDIA cuPyNumeric accelerated computing library, researchers can now take their data-crunching Python code and effortlessly run it on CPU-based laptops and GPU-accelerated workstations, cloud servers or massive supercomputers. The faster they can work through their data, the quicker they can make decisions about promising data points, trends worth investigating and adjustments to their experiments.

To make the leap to accelerated computing, researchers don’t need expertise in computer science. They can simply write code using the familiar NumPy interface or apply cuPyNumeric to existing code, following best practices for performance and scalability.

Once cuPyNumeric is applied, they can run their code on one or thousands of GPUs with zero code changes.

The latest version of cuPyNumeric, now available on Conda and GitHub, offers support for the NVIDIA GH200 Grace Hopper Superchip, automatic resource configuration at run time and improved memory scaling. It also supports HDF5, a popular file format in the scientific community that helps efficiently manage large, complex data.

Researchers at the SLAC National Accelerator Laboratory, Los Alamos National Laboratory, Australia National University, UMass Boston, the Center for Turbulence Research at Stanford University and the National Payments Corporation of India are among those who have integrated cuPyNumeric to achieve significant improvements in their data analysis workflows.

Less Is More: Limitless GPU Scalability Without Code Changes

Python is the most common programming language for data science, machine learning and numerical computing, used by millions of researchers in scientific fields including astronomy, drug discovery, materials science and nuclear physics. Tens of thousands of packages on GitHub depend on the NumPy math and matrix library, which had over 300 million downloads last month. All of these applications could benefit from accelerated computing with cuPyNumeric.

Many of these scientists build programs that use NumPy and run on a single CPU-only node — limiting the throughput of their algorithms to crunch through increasingly large datasets collected by instruments like electron microscopes, particle colliders and radio telescopes.

cuPyNumeric helps researchers keep pace with the growing size and complexity of their datasets by providing a drop-in replacement for NumPy that can scale to thousands of GPUs. cuPyNumeric doesn’t require code changes when scaling from a single GPU to a whole supercomputer. This makes it easy for researchers to run their analyses on accelerated computing systems of any size.

Solving the Big Data Problem, Accelerating Scientific Discovery

Researchers at SLAC National Accelerator Laboratory, a U.S. Department of Energy lab operated by Stanford University, have found that cuPyNumeric helps them speed up X-ray experiments conducted at the Linac Coherent Light Source.

A SLAC team focused on materials science discovery for semiconductors found that cuPyNumeric accelerated its data analysis application by 6x, decreasing run time from minutes to seconds. This speedup allows the team to run important analyses in parallel when conducting experiments at this highly specialized facility.

By using experiment hours more efficiently, the team anticipates it will be able to discover new material properties, share results and publish work more quickly.

Other institutions using cuPyNumeric include:

- Australia National University, where researchers used cuPyNumeric to scale the Levenberg-Marquardt optimization algorithm to run on multi-GPU systems at the country’s National Computational Infrastructure. While the algorithm can be used for many applications, the researchers’ initial target is large-scale climate and weather models.

- Los Alamos National Laboratory, where researchers are applying cuPyNumeric to accelerate data science, computational science and machine learning algorithms. cuPyNumeric will provide them with additional tools to effectively use the recently launched Venado supercomputer, which features over 2,500 NVIDIA GH200 Grace Hopper Superchips.

- Stanford University’s Center for Turbulence Research, where researchers are developing Python-based computational fluid dynamics solvers that can run at scale on large accelerated computing clusters using cuPyNumeric. These solvers can seamlessly integrate large collections of fluid simulations with popular machine learning libraries like PyTorch, enabling complex applications including online training and reinforcement learning.

- UMass Boston, where a research team is accelerating linear algebra calculations to analyze microscopy videos and determine the energy dissipated by active materials. The team used cuPyNumeric to decompose a matrix of 16 million rows and 4,000 columns.

- National Payments Corporation of India, the organization behind a real-time digital payment system used by around 250 million Indians daily and expanding globally. NPCI uses complex matrix calculations to track transaction paths between payers and payees. With current methods, it takes about 5 hours to process data for a one-week transaction window on CPU systems. A trial showed that applying cuPyNumeric to accelerate the calculations on multi-node NVIDIA DGX systems could speed up matrix multiplication by 50x, enabling NPCI to process larger transaction windows in less than an hour and detect suspected money laundering in near real time.

To learn more about cuPyNumeric, see a live demo in the NVIDIA booth at the Supercomputing 2024 conference in Atlanta, join the theater talk in the expo hall and participate in the cuPyNumeric workshop.

Watch the NVIDIA special address at SC24.

]]>Since its introduction, the NVIDIA Hopper architecture has transformed the AI and high-performance computing (HPC) landscape, helping enterprises, researchers and developers tackle the world’s most complex challenges with higher performance and greater energy efficiency.

During the Supercomputing 2024 conference, NVIDIA announced the availability of the NVIDIA H200 NVL PCIe GPU — the latest addition to the Hopper family. H200 NVL is ideal for organizations with data centers looking for lower-power, air-cooled enterprise rack designs with flexible configurations to deliver acceleration for every AI and HPC workload, regardless of size.

According to a recent survey, roughly 70% of enterprise racks are 20kW and below and use air cooling. This makes PCIe GPUs essential, as they provide granularity of node deployment, whether using one, two, four or eight GPUs — enabling data centers to pack more computing power into smaller spaces. Companies can then use their existing racks and select the number of GPUs that best suits their needs.

Enterprises can use H200 NVL to accelerate AI and HPC applications, while also improving energy efficiency through reduced power consumption. With a 1.5x memory increase and 1.2x bandwidth increase over NVIDIA H100 NVL, companies can use H200 NVL to fine-tune LLMs within a few hours and deliver up to 1.7x faster inference performance. For HPC workloads, performance is boosted up to 1.3x over H100 NVL and 2.5x over the NVIDIA Ampere architecture generation.

Complementing the raw power of the H200 NVL is NVIDIA NVLink technology. The latest generation of NVLink provides GPU-to-GPU communication 7x faster than fifth-generation PCIe — delivering higher performance to meet the needs of HPC, large language model inference and fine-tuning.

The NVIDIA H200 NVL is paired with powerful software tools that enable enterprises to accelerate applications from AI to HPC. It comes with a five-year subscription for NVIDIA AI Enterprise, a cloud-native software platform for the development and deployment of production AI. NVIDIA AI Enterprise includes NVIDIA NIM microservices for the secure, reliable deployment of high-performance AI model inference.

Companies Tapping Into Power of H200 NVL

With H200 NVL, NVIDIA provides enterprises with a full-stack platform to develop and deploy their AI and HPC workloads.

Customers are seeing significant impact for multiple AI and HPC use cases across industries, such as visual AI agents and chatbots for customer service, trading algorithms for finance, medical imaging for improved anomaly detection in healthcare, pattern recognition for manufacturing, and seismic imaging for federal science organizations.

Dropbox is harnessing NVIDIA accelerated computing for its services and infrastructure.

“Dropbox handles large amounts of content, requiring advanced AI and machine learning capabilities,” said Ali Zafar, VP of Infrastructure at Dropbox. “We’re exploring H200 NVL to continually improve our services and bring more value to our customers.”

The University of New Mexico has been using NVIDIA accelerated computing in various research and academic applications.

“As a public research university, our commitment to AI enables the university to be on the forefront of scientific and technological advancements,” said Prof. Patrick Bridges, director of the UNM Center for Advanced Research Computing. “As we shift to H200 NVL, we’ll be able to accelerate a variety of applications, including data science initiatives, bioinformatics and genomics research, physics and astronomy simulations, climate modeling and more.”

H200 NVL Available Across Ecosystem

Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro are expected to deliver a wide range of configurations supporting H200 NVL.

Additionally, H200 NVL will be available in platforms from Aivres, ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, MSI, Pegatron, QCT, Wistron and Wiwynn.

Some systems are based on the NVIDIA MGX modular architecture, which enables computer makers to quickly and cost-effectively build a vast array of data center infrastructure designs.

Platforms with H200 NVL will be available from NVIDIA’s global systems partners beginning in December. To complement availability from leading global partners, NVIDIA is also developing an Enterprise Reference Architecture for H200 NVL systems.

The reference architecture will incorporate NVIDIA’s expertise and design principles, so partners and customers can design and deploy high-performance AI infrastructure based on H200 NVL at scale. This includes full-stack hardware and software recommendations, with detailed guidance on optimal server, cluster and network configurations. Networking is optimized for the highest performance with the NVIDIA Spectrum-X Ethernet platform.

NVIDIA technologies will be showcased on the showroom floor at SC24, taking place at the Georgia World Congress Center through Nov. 22. To learn more, watch NVIDIA’s special address.

See notice regarding software product information.

]]>Established 77 years ago, Mitsui & Co stays vibrant by building businesses and ecosystems with new technologies like generative AI and confidential computing.

Digital transformation takes many forms at the Tokyo-based conglomerate with 16 divisions. In one case, it’s an autonomous trucking service, in another it’s a geospatial analysis platform. Mitsui even collaborates with a partner at the leading edge of quantum computing.

One new subsidiary, Xeureka, aims to accelerate R&D in healthcare, where it can take more than a billion dollars spent over a decade to bring to market a new drug.

“We create businesses using new digital technology like AI and confidential computing,” said Katsuya Ito, a project manager in Mitsui’s digital transformation group. “Most of our work is done in collaboration with tech companies — in this case NVIDIA and Fortanix,” a San Francisco based security software company.

In Pursuit of Big Data

Though only three years old, Xeureka already completed a proof of concept addressing one of drug discovery’s biggest problems — getting enough data.

Speeding drug discovery requires powerful AI models built with datasets larger than most pharmaceutical companies have on hand. Until recently, sharing across companies has been unthinkable because data often contains private patient information as well as chemical formulas proprietary to the drug company.

Enter confidential computing, a way of processing data in a protected part of a GPU or CPU that acts like a black box for an organization’s most important secrets.

To ensure their data is kept confidential at all times, banks, government agencies and even advertisers are using the technology that’s backed by a consortium of some of the world’s largest companies.

A Proof of Concept for Privacy

To validate that confidential computing would allow its customers to safely share data, Xeureka created two imaginary companies, each with a thousand drug candidates. Each company’s dataset was used separately to train an AI model to predict the chemicals’ toxicity levels. Then the data was combined to train a similar, but larger AI model.

Xeureka ran its test on NVIDIA H100 Tensor Core GPUs using security management software from Fortanix, one of the first startups to support confidential computing.

The H100 GPUs support a trusted execution environment with hardware-based engines that ensure and validate confidential workloads are protected while in use on the GPU, without compromising performance. The Fortanix software manages data sharing, encryption keys and the overall workflow.

Up to 74% Higher Accuracy

The results were impressive. The larger model’s predictions were 65-74% more accurate, thanks to use of the combined datasets.

The models created by a single company’s data showed instability and bias issues that were not present with the larger model, Ito said.

“Confidential computing from NVIDIA and Fortanix essentially alleviates the privacy and security concerns while also improving model accuracy, which will prove to be a win-win situation for the entire industry,” said Xeureka’s CTO, Hiroki Makiguchi, in a Fortanix press release.

An AI Supercomputing Ecosystem

Now, Xeureka is exploring broad applications of this technology in drug discovery research, in collaboration with the community behind Tokyo-1, its GPU-accelerated AI supercomputer. Announced in February, Tokyo-1 aims to enhance the efficiency of pharmaceutical companies in Japan and beyond.

Initial projects may include collaborations to predict protein structures, screen ligand-base pairs and accelerate molecular dynamics simulations with trusted services. Tokyo-1 users can harness large language models for chemistry, protein, DNA and RNA data formats through the NVIDIA BioNeMo drug discovery microservices and framework.

It’s part of Mitsui’s broader strategic growth plan to develop software and services for healthcare, such as powering Japan’s $100 billion pharma industry, the world’s third largest following the U.S. and China.

Xeueka’s services will include using AI to quickly screen billions of drug candidates, to predict how useful molecules will bind with proteins and to simulate detailed chemical behaviors.

To learn more, read about NVIDIA Confidential Computing and NVIDIA BioNeMo, an AI platform for drug discovery.

]]>NVIDIA founder and CEO Jensen Huang joined the king of Denmark to launch the country’s largest sovereign AI supercomputer, aimed at breakthroughs in quantum computing, clean energy, biotechnology and other areas serving Danish society and the world.

Denmark’s first AI supercomputer, named Gefion after a goddess in Danish mythology, is an NVIDIA DGX SuperPOD driven by 1,528 NVIDIA H100 Tensor Core GPUs and interconnected using NVIDIA Quantum-2 InfiniBand networking.

Gefion is operated by the Danish Center for AI Innovation (DCAI), a company established with funding from the Novo Nordisk Foundation, the world’s wealthiest charitable foundation, and the Export and Investment Fund of Denmark. The new AI supercomputer was symbolically turned on by King Frederik X of Denmark, Huang and Nadia Carlsten, CEO of DCAI, at an event in Copenhagen.

Huang sat down with Carlsten, a quantum computing industry leader, to discuss the public-private initiative to build one of the world’s fastest AI supercomputers in collaboration with NVIDIA.

The Gefion AI supercomputer comes to Copenhagen to serve industry, startups and academia.

“Gefion is going to be a factory of intelligence. This is a new industry that never existed before. It sits on top of the IT industry. We’re inventing something fundamentally new,” Huang said.

The launch of Gefion is an important milestone for Denmark in establishing its own sovereign AI. Sovereign AI can be achieved when a nation has the capacity to produce artificial intelligence with its own data, workforce, infrastructure and business networks. Having a supercomputer on national soil provides a foundation for countries to use their own infrastructure as they build AI models and applications that reflect their unique culture and language.

“What country can afford not to have this infrastructure, just as every country realizes you have communications, transportation, healthcare, fundamental infrastructures — the fundamental infrastructure of any country surely must be the manufacturer of intelligence,” said Huang. “For Denmark to be one of the handful of countries in the world that has now initiated on this vision is really incredible.”

The new supercomputer is expected to address global challenges with insights into infectious disease, climate change and food security. Gefion is now being prepared for users, and a pilot phase will begin to bring in projects that seek to use AI to accelerate progress, including in such areas as quantum computing, drug discovery and energy efficiency.

“The era of computer-aided drug discovery must be within this decade. I’m hoping that what the computer did to the technology industry, it will do for digital biology,” Huang said.

Supporting Next Generation of Breakthroughs With Gefion

The Danish Meteorological Institute (DMI) is in the pilot and aims to deliver faster and more accurate weather forecasts. It promises to reduce forecast times from hours to minutes while greatly reducing the energy footprint required for these forecasts when compared with traditional methods.

Researchers from the University of Copenhagen are tapping into Gefion to implement and carry out a large-scale distributed simulation of quantum computer circuits. Gefion enables the simulated system to increase from 36 to 40 entangled qubits, which brings it close to what’s known as “quantum supremacy,” or essentially outperforming a traditional computer while using less resources.

The University of Copenhagen and the Technical University of Denmark are working together on a multi-modal genomic foundation model for discoveries in disease mutation analysis and vaccine design. Their model will be used to improve signal detection and the functional understanding of genomes, made possible by the capability to train LLMs on Gefion.

Startup Go Autonomous seeks training time on Gefion to develop an AI model that understands and uses multi-modal input from both text, layout and images. Another startup, Teton, is building an AI Care Companion with large video pretraining, using Gefion.

Addressing Global Challenges With Leading Supercomputer

The Gefion supercomputer and ongoing collaborations with NVIDIA will position Denmark, with its renowned research community, to pursue the world’s leading scientific challenges with enormous social impact as well as large-scale projects across industries.

With Gefion, researchers will be able to work with industry experts at NVIDIA to co-develop solutions to complex problems, including research in pharmaceuticals and biotechnology and protein design using the NVIDIA BioNeMo platform.

Scientists will also be collaborating with NVIDIA on fault-tolerant quantum computing using NVIDIA CUDA-Q, the open-source hybrid quantum computing platform.

]]>AI can help solve some of the world’s biggest challenges — whether climate change, cancer or national security — U.S. Secretary of Energy Jennifer Granholm emphasized today during her remarks at the AI for Science, Energy and Security session at the NVIDIA AI Summit, in Washington, D.C.

Granholm went on to highlight the pivotal role AI is playing in tackling major national challenges, from energy innovation to bolstering national security.

“We need to use AI for both offense and defense — offense to solve these big problems and defense to make sure the bad guys are not using AI for nefarious purposes,” she said.

Granholm, who calls the Department of Energy “America’s Solutions Department,” highlighted the agency’s focus on solving the world’s biggest problems.

“Yes, climate change, obviously, but a whole slew of other problems, too … quantum computing and all sorts of next-generation technologies,” she said, pointing out that AI is a driving force behind many of these advances.

“AI can really help to solve some of those huge problems — whether climate change, cancer or national security,” she said. “The possibilities of AI for good are awesome, awesome.”

Following Granholm’s 15-minute address, a panel of experts from government, academia and industry took the stage to further discuss how AI accelerates advancements in scientific discovery, national security and energy innovation.

“AI is going to be transformative to our mission space.… We’re going to see these big step changes in capabilities,” said Helena Fu, director of the Office of Critical and Emerging Technologies at the Department of Energy, underscoring AI’s potential in safeguarding critical infrastructure and addressing cyber threats.

During her remarks, Granholm also stressed that AI’s increasing energy demands must be met responsibly.

“We are going to see about a 15% increase in power demand on our electric grid as a result of the data centers that we want to be located in the United States,” she explained.

However, the DOE is taking steps to meet this demand with clean energy.

“This year, in 2024, the United States will have added 30 Hoover Dams’ worth of clean power to our electric grid,” Granholm announced, emphasizing that the clean energy revolution is well underway.

AI’s Impact on Scientific Discovery and National Security

The discussion then shifted to how AI is revolutionizing scientific research and national security.

Tanya Das, director of the Energy Program at the Bipartisan Policy Center, pointed out that “AI can accelerate every stage of the innovation pipeline in the energy sector … starting from scientific discovery at the very beginning … going through to deployment and permitting.”

Das also highlighted the growing interest in Congress to support AI innovations, adding, “Congress is paying attention to this issue, and, I think, very motivated to take action on updating what the national vision is for artificial intelligence.”

Fu reiterated the department’s comprehensive approach, stating, “We cross from open science through national security, and we do this at scale.… Whether they be around energy security, resilience, climate change or the national security challenges that we’re seeing every day emerging.”

She also touched on the DOE’s future goals: “Our scientific systems will need access to AI systems,” Fu said, emphasizing the need to bridge both scientific reasoning and the new kinds of models we’ll need to develop for AI.

Collaboration Across Sectors: Government, Academia and Industry

Karthik Duraisamy, director of the Michigan Institute for Computational Discovery and Engineering at the University of Michigan, highlighted the power of collaboration in advancing scientific research through AI.

“Think about the scientific endeavor as 5% creativity and innovation and 95% intense labor. AI amplifies that 5% by a bit, and then significantly accelerates the 95% part,” Duraisamy explained. “That is going to completely transform science.”

Duraisamy further elaborated on the role AI could play as a persistent collaborator, envisioning a future where AI can work alongside scientists over weeks, months and years, generating new ideas and following through on complex projects.

“Instead of replacing graduate students, I think graduate students can be smarter than the professors on day one,” he said, emphasizing the potential for AI to support long-term research and innovation.

Learn more about how this week’s AI Summit highlights how AI is shaping the future across industries and how NVIDIA’s solutions are laying the groundwork for continued innovation.

]]>A recently released joint research paper by NVIDIA, Moderna and Yale reviews how techniques from quantum machine learning (QML) may enhance drug discovery methods by better predicting molecular properties.

Ultimately, this could lead to the more efficient generation of new pharmaceutical therapies.

The review also emphasizes that the key tool for exploring these methods is GPU-accelerated simulation of quantum algorithms.

The study focuses on how future quantum neural networks can use quantum computing to enhance existing AI techniques.

Applied to the pharmaceutical industry, these advances offer researchers the ability to streamline complex tasks in drug discovery.

Researching how such quantum neural networks impact real-world use cases like drug discovery requires intensive, large-scale simulations of future noiseless quantum processing units (QPUs).

This is just one example of how, as quantum computing scales up, an increasing number of challenges are only approachable with GPU-accelerated supercomputing.

The review article explores how NVIDIA’s CUDA-Q quantum development platform provides a unique tool for running such multi-GPU accelerated simulations of QML workloads.

The study also highlights CUDA-Q’s ability to simulate multiple QPUs in parallel. This is a key ability for studying realistic large-scale devices, which, in this particular study, also allowed for the exploration of quantum machine learning tasks that batch training data.

Many of the QML techniques covered by the review — such as hybrid quantum convolution neural networks — also require CUDA-Q’s ability to write programs interweaving classical and quantum resources.

The increased reliance on GPU supercomputing demonstrated in this work is the latest example of NVIDIA’s growing involvement in developing useful quantum computers.

NVIDIA plans to further highlight its role in the future of quantum computing at the SC24 conference, Nov. 17-22 in Atlanta.

]]>NVIDIA and Foxconn are building Taiwan’s largest supercomputer, marking a milestone in the island’s AI advancement.

The project, Hon Hai Kaohsiung Super Computing Center, revealed Tuesday at Hon Hai Tech Day, will be built around NVIDIA’s groundbreaking Blackwell architecture and feature the GB200 NVL72 platform, which includes a total of 64 racks and 4,608 Tensor Core GPUs.

With an expected performance of over 90 exaflops of AI performance, the machine would easily be considered the fastest in Taiwan.

Foxconn plans to use the supercomputer, once operational, to power breakthroughs in cancer research, large language model development and smart city innovations, positioning Taiwan as a global leader in AI-driven industries.

Foxconn’s “three-platform strategy” focuses on smart manufacturing, smart cities and electric vehicles. The new supercomputer will play a pivotal role in supporting Foxconn’s ongoing efforts in digital twins, robotic automation and smart urban infrastructure, bringing AI-assisted services to urban areas like Kaohsiung.

Construction has started on the new supercomputer housed in Kaohsiung, Taiwan. The first phase is expected to be operational by mid-2025. Full deployment is targeted for 2026.

The project will integrate with NVIDIA technologies, such as NVIDIA Omniverse and Isaac robotics platforms for AI and digital twins technologies to help transform manufacturing processes.

“Powered by NVIDIA’s Blackwell platform, Foxconn’s new AI supercomputer is one of the most powerful in the world, representing a significant leap forward in AI computing and efficiency,” said Foxconn Vice President and Spokesperson James Wu.

The GB200 NVL72 is a state-of-the-art data center platform optimized for AI and accelerated computing.

Each rack features 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell GPUs connected via NVIDIA’s NVLink technology, delivering 130TB/s of bandwidth.

NVIDIA NVLink Switch allows the 72-GPU system to function as a single, unified GPU. This makes it ideal for training large AI models and executing complex inference tasks in real time on trillion-parameter models.

Taiwan-based Foxconn, officially known as Hon Hai Precision Industry Co., is the world’s largest electronics manufacturer, known for producing a wide range of products, from smartphones to servers, for the world’s top technology brands.

With a vast global workforce and manufacturing facilities across the globe, Foxconn is key in supplying the world’s technology infrastructure. It is a leader in smart manufacturing as one of the pioneers of industrial AI as it digitalizes its factories in NVIDIA Omniverse.

Foxconn was also one of the first companies to use NVIDIA NIM microservices in the development of domain-specific large language models, or LLMs, embedded into a variety of internal systems and processes in its AI factories for smart manufacturing, smart electric vehicles and smart cities.

The Hon Hai Kaohsiung Super Computing Center is part of a growing global network of advanced supercomputing facilities powered by NVIDIA. This network includes several notable installations across Europe and Asia.

These supercomputers represent a significant leap forward in computational power, putting NVIDIA’s cutting-edge technology to work to advance research and innovation across various scientific disciplines.

Learn more about Hon Hai Tech Day.

]]>Research published earlier this month in the science journal Nature used NVIDIA-powered supercomputers to validate a pathway toward the commercialization of quantum computing.

The research, led by Nobel laureate Giorgio Parisi and Massimo Bernaschi, director of technology at the National Research Council of Italy and a CUDA Fellow, focuses on quantum annealing, a method that may one day tackle complex optimization problems that are extraordinarily challenging to conventional computers.

To conduct their research, the team utilized 2 million GPU computing hours at the Leonardo facility (Cineca, in Bologna, Italy), nearly 160,000 GPU computing hours on the Meluxina-GPU cluster, in Luxembourg, and 10,000 GPU hours from the Spanish Supercomputing Network. Additionally, they accessed the Dariah cluster, in Lecce, Italy.

They used these state-of-the-art resources to simulate the behavior of a certain kind of quantum computing system known as a quantum annealer.

Quantum computers fundamentally rethink how information is computed to enable entirely new solutions.

Unlike classical computers, which process information in binary — 0s and 1s — quantum computers use quantum bits or qubits that can allow information to be processed in entirely new ways.

Quantum annealers are a special type of quantum computer that, though not universally useful, may have advantages for solving certain types of optimization problems.

The paper, “The Quantum Transition of the Two-Dimensional Ising Spin Glass,” represents a significant step in understanding the phase transition — a change in the properties of a quantum system — of Ising spin glass, a disordered magnetic material in a two-dimensional plane, a critical problem in computational physics.

The paper addresses the problem of how the properties of magnetic particles arranged in a two-dimensional plane can abruptly change their behavior.

The study also shows how GPU-powered systems play a key role in developing approaches to quantum computing.

GPU-accelerated simulations allow researchers to understand the complex systems’ behavior in developing quantum computers, illuminating the most promising paths forward.

Quantum annealers, like the systems developed by the pioneering quantum computing company D-Wave, operate by methodically decreasing a magnetic field that is applied to a set of magnetically susceptible particles.

When strong enough, the applied field will act to align the magnetic orientation of the particles — similar to how iron filings will uniformly stand to attention near a bar magnet.

If the strength of the field is varied slowly enough, the magnetic particles will arrange themselves to minimize the energy of the final arrangement.

Finding this stable, minimum-energy state is crucial in a particularly complex and disordered magnetic system known as a spin glass since quantum annealers can encode certain kinds of problems into the spin glass’s minimum-energy configuration.

Finding the stable arrangement of the spin glass then solves the problem.

Understanding these systems helps scientists develop better algorithms for solving difficult problems by mimicking how nature deals with complexity and disorder.

That’s crucial for advancing quantum annealing and its applications in solving extremely difficult computational problems that currently have no known efficient solution — problems that are pervasive in fields ranging from logistics to cryptography.

Unlike gate-model quantum computers, which operate by applying a sequence of quantum gates, quantum annealers allow a quantum system to evolve freely in time.

This is not a universal computer — a device capable of performing any computation given sufficient time and resources — but may have advantages for solving particular sets of optimization problems in application areas such as vehicle routing, portfolio optimization and protein folding.

Through extensive simulations performed on NVIDIA GPUs, the researchers learned how key parameters of the spin glasses making up quantum annealers change during their operation, allowing a better understanding of how to use these systems to achieve a quantum speedup on important problems.

Much of the work for this groundbreaking paper was first presented at NVIDIA’s GTC 2024 technology conference. Read the full paper and learn more about NVIDIA’s work in quantum computing.

]]>Enhancing Japan’s AI sovereignty and strengthening its research and development capabilities, Japan’s National Institute of Advanced Industrial Science and Technology (AIST) will integrate thousands of NVIDIA H200 Tensor Core GPUs into its AI Bridging Cloud Infrastructure 3.0 supercomputer (ABCI 3.0). The Hewlett Packard Enterprise Cray XD system will feature NVIDIA Quantum-2 InfiniBand networking for superior performance and scalability.

ABCI 3.0 is the latest iteration of Japan’s large-scale Open AI Computing Infrastructure designed to advance AI R&D. This collaboration underlines Japan’s commitment to advancing its AI capabilities and fortifying its technological independence.

“In August 2018, we launched ABCI, the world’s first large-scale open AI computing infrastructure,” said AIST Executive Officer Yoshio Tanaka. “Building on our experience over the past several years managing ABCI, we’re now upgrading to ABCI 3.0. In collaboration with NVIDIA and HPE we aim to develop ABCI 3.0 into a computing infrastructure that will advance further research and development capabilities for generative AI in Japan.”

“As generative AI prepares to catalyze global change, it’s crucial to rapidly cultivate research and development capabilities within Japan,” said AIST Solutions Co. Producer and Head of ABCI Operations Hirotaka Ogawa. “I’m confident that this major upgrade of ABCI in our collaboration with NVIDIA and HPE will enhance ABCI’s leadership in domestic industry and academia, propelling Japan towards global competitiveness in AI development and serving as the bedrock for future innovation.”

ABCI 3.0: A New Era for Japanese AI Research and Development

ABCI 3.0 is constructed and operated by AIST, its business subsidiary, AIST Solutions, and its system integrator, Hewlett Packard Enterprise (HPE).

The ABCI 3.0 project follows support from Japan’s Ministry of Economy, Trade and Industry, known as METI, for strengthening its computing resources through the Economic Security Fund and is part of a broader $1 billion initiative by METI that includes both ABCI efforts and investments in cloud AI computing.

NVIDIA is closely collaborating with METI on research and education following a visit last year by company founder and CEO, Jensen Huang, who met with political and business leaders, including Japanese Prime Minister Fumio Kishida, to discuss the future of AI.

NVIDIA’s Commitment to Japan’s Future

Huang pledged to collaborate on research, particularly in generative AI, robotics and quantum computing, to invest in AI startups and provide product support, training and education on AI.

During his visit, Huang emphasized that “AI factories” — next-generation data centers designed to handle the most computationally intensive AI tasks — are crucial for turning vast amounts of data into intelligence.

“The AI factory will become the bedrock of modern economies across the world,” Huang said during a meeting with the Japanese press in December.

With its ultra-high-density data center and energy-efficient design, ABCI provides a robust infrastructure for developing AI and big data applications.

The system is expected to come online by the end of this year and offer state-of-the-art AI research and development resources. It will be housed in Kashiwa, near Tokyo.

Unmatched Computing Performance and Efficiency

The facility will offer:

- 6 AI exaflops of computing capacity, a measure of AI-specific performance without sparsity

- 410 double-precision petaflops, a measure of general computing capacity

- Each node is connected via the Quantum-2 InfiniBand platform at 200GB/s of bisectional bandwidth.

NVIDIA technology forms the backbone of this initiative, with hundreds of nodes each equipped with 8 NVLlink-connected H200 GPUs providing unprecedented computational performance and efficiency.

NVIDIA H200 is the first GPU to offer over 140 gigabytes (GB) of HBM3e memory at 4.8 terabytes per second (TB/s). The H200’s larger and faster memory accelerates generative AI and LLMs, while advancing scientific computing for HPC workloads with better energy efficiency and lower total cost of ownership.

NVIDIA H200 GPUs are 15X more energy-efficient than ABCI’s previous-generation architecture for AI workloads such as LLM token generation.

The integration of advanced NVIDIA Quantum-2 InfiniBand with In-Network computing — where networking devices perform computations on data, offloading the work from the CPU — ensures efficient, high-speed, low-latency communication, crucial for handling intensive AI workloads and vast datasets.

ABCI boasts world-class computing and data processing power, serving as a platform to accelerate joint AI R&D with industries, academia and governments.

METI’s substantial investment is a testament to Japan’s strategic vision to enhance AI development capabilities and accelerate the use of generative AI.

By subsidizing AI supercomputer development, Japan aims to reduce the time and costs of developing next-generation AI technologies, positioning itself as a leader in the global AI landscape.

]]>In the latest ranking of the world’s most energy-efficient supercomputers, known as the Green500, NVIDIA-powered systems swept the top three spots, and took seven of the top 10.

The strong showing demonstrates how accelerated computing represents the most energy-efficient method for high-performance computing.

The top three systems were all powered by the NVIDIA GH200 Grace Hopper Superchip, showcasing the widespread adoption and efficiency of NVIDIA’s Grace Hopper architecture.

Leading the pack was the JEDI system, at Germany’s Forschungszentrum Jülich, which achieved an impressive 72.73 GFlops per Watt and is based on Eviden’s BullSequana XH3000 liquid-cooled system.

More’s coming. The ability to do more work using less power is driving the construction of more Grace Hopper supercomputers around the world.

Accelerating the Green Revolution in Supercomputing

Such achievements underscore NVIDIA’s pivotal role in advancing the global agenda for sustainable high-performance computing over the past decade.

Accelerated computing has proven to be the cornerstone of energy efficiency, with the majority of systems on the Green500 list — including 40 of the top 50 — now featuring this advanced technology.

Pioneered by NVIDIA, accelerated computing uses GPUs that optimize throughput — getting a lot done at once — to perform complex computations faster than systems based on CPUs alone.

And the Grace Hopper architecture is proving to be a game-changer by enhancing computational speed and dramatically increasing energy efficiency across multiple platforms.

For example, the GH200 chip embedded within the Grace Hopper systems offers over 1,000x more energy efficiency on mixed precision and AI tasks than previous generations.

Redefining Efficiency in Supercomputing

This capability is crucial for accelerating tasks that address complex scientific challenges, speeding up the work of researchers across various disciplines.

NVIDIA’s supercomputing technology excels in traditional benchmarks — and it’s set new standards in energy efficiency.

For instance, the Alps system, at the Swiss National Supercomputing Centre (CSCS), is equipped with NVIDIA Grace Hopper GH200. An optimized subset of the system, dubbed preAlps, placed fifth on the latest Green500. CSCS also submitted the full system to the TOP500 list, recording 270 petaflops on the High-Performance Linpack benchmark, used for solving complex linear equations.

The Green500 rankings highlight platforms that provide highly efficient FP64 performance, which is crucial for accurate simulations used in scientific computing. This result underscores NVIDIA’s commitment to powering supercomputers for tasks across a full range of capabilities.

This metric demonstrates substantial system performance, leading to its high ranking on the TOP500 list of the world’s fastest supercomputers. The high position on the Green500 list indicates that this scalable performance does not come at the cost of energy efficiency.

Such performance shows how the Grace Hopper architecture introduces a new era in processing technology, merging tightly coupled CPU and GPU functionalities to enhance not only performance but also significantly improve energy efficiency.

This advancement is supported by the incorporation of an optimized high-efficiency link that moves data between the CPU and GPU.

NVIDIA’s upcoming Blackwell platform is set to build on this by offering the computational power of the Titan supercomputer launched 10 years ago — a $100 million system the size of a tennis court — yet be efficient enough to be powered by a wall socket just like a typical home appliance.

In short, over the past decade, NVIDIA innovations have enhanced the accessibility and sustainability of high-performance computing, making scientific breakthroughs faster, cheaper and greener.

A Future Defined by Sustainable Innovation

As NVIDIA continues to push the boundaries of what’s possible in high-performance computing, it remains committed to enhancing the energy efficiency of global computing infrastructure.

The success of the Grace Hopper supercomputers in the Green500 rankings highlights NVIDIA’s leadership and its commitment to more sustainable global computing.

Explore how NVIDIA’s pioneering role in green computing is advancing scientific research, as well as shaping a more sustainable future worldwide.

Feature image: Jupiter system at Jülich supercomputer center, courtesy of Eviden.

]]>The latest advances in quantum computing include investigating molecules, deploying giant supercomputers and building the quantum workforce with a new academic program.

Researchers in Canada and the U.S. used a large language model to simplify quantum simulations that help scientists explore molecules.

“This new quantum algorithm opens the avenue to a new way of combining quantum algorithms with machine learning,” said Alan Aspuru-Guzik, a professor of chemistry and computer science at the University of Toronto, who led the team.

The effort used CUDA-Q, a hybrid programming model for GPUs, CPUs and the QPUs quantum systems use. The team ran its research on Eos, NVIDIA’s H100 GPU supercomputer.

Software from the effort will be made available for researchers in fields like healthcare and chemistry. Aspuru-Guzik will detail the work in a talk at GTC.

Quantum Scales for Fraud Detection

At HSBC, one of the world’s largest banks, researchers designed a quantum machine learning application that can detect fraud in digital payments.

The bank’s quantum machine learning algorithm simulated a whopping 165 qubits on NVIDIA GPUs. Research papers typically don’t extend beyond 40 of these fundamental calculating units quantum systems use.

HSBC used machine learning techniques implemented with CUDA-Q and cuTensorNet software on NVIDIA GPUs to overcome challenges simulating quantum circuits at scale. Mekena Metcalf, a quantum computing research scientist at HSBC (pictured above), will present her work in a session at GTC.

Raising a Quantum Generation

In education, NVIDIA is working with nearly two dozen universities to prepare the next generation of computer scientists for the quantum era. The collaboration will design curricula and teaching materials around CUDA-Q.

“Bridging the divide between traditional computers and quantum systems is essential to the future of computing,” said Theresa Mayer, vice president for research at Carnegie Mellon University. “NVIDIA is partnering with institutions of higher education, Carnegie Mellon included, to help students and researchers navigate and excel in this emerging hybrid environment.”

To help working developers get hands-on with the latest tools, NVIDIA co-sponsored QHack, a quantum hackathon in February. The winning project, developed by Gopal Dahale of Qkrishi — a quantum company in Gurgaon, India — used CUDA-Q to develop an algorithm to simulate a material critical in designing better batteries.

A Trio of New Systems

Two new systems being deployed further expand the ecosystem for hybrid quantum-classical computing.

The largest of the two, ABCI-Q at Japan’s National Institute of Advanced Industrial Science and Technology, will be one of the largest supercomputers dedicated to research in quantum computing. It will use CUDA-Q on NVIDIA H100 GPUs to advance the nation’s efforts in the field.

In Denmark, the Novo Nordisk Foundation will lead on the deployment of an NVIDIA DGX SuperPOD, a significant part of which will be dedicated to research in quantum computing in alignment with the country’s national plan to advance the technology.

The new systems join Australia’s Pawsey Supercomputing Research Centre, which announced in February it will run CUDA-Q on NVIDIA Grace Hopper Superchips at its National Supercomputing and Quantum Computing Innovation Hub.

Partners Drive CUDA-Q Forward

In other news, Israeli startup Classiq released at GTC a new integration with CUDA-Q. Classiq’s quantum circuit synthesis lets high-level functional models automatically generate optimized quantum programs, so researchers can get the most out of today’s quantum hardware and expand the scale of their work on future algorithms.

Software and service provider QC Ware is integrating its Promethium quantum chemistry package with the just-announced NVIDIA Quantum Cloud.

ORCA Computing, a quantum systems developer headquartered in London, released results running quantum machine learning on its photonics processor with CUDA-Q. In addition, ORCA was selected to build and supply a quantum computing testbed for the UK’s National Quantum Computing Centre which will include an NVIDIA GPU cluster using CUDA-Q.

Nvidia and Infleqtion, a quantum technology leader, partnered to bring cutting-edge quantum-enabled solutions to Europe’s largest cyber-defense exercise with NVIDIA-enabled Superstaq software.

A cloud-based platform for quantum computing, qBraid, is integrating CUDA-Q into its developer environment. And California-based BlueQubit described in a blog how NVIDIA’s quantum technology, used in its research and GPU service, provides the fastest and largest quantum emulations possible on GPUs.

Get the Big Picture at GTC

To learn more, watch a session about how NVIDIA is advancing quantum computing and attend an expert panel on the topic, both at NVIDIA GTC, a global AI conference, running March 18-21 at the San Jose Convention Center.

And get the full view from NVIDIA founder and CEO Jensen Huang in his GTC keynote.

]]>The journey to the future of secure communications is about to jump to warp drive.

NVIDIA cuPQC brings accelerated computing to developers working on cryptography for the age of quantum computing. The cuPQC library harnesses the parallelism of GPUs for their most demanding security algorithms.

Refactoring Security for the Quantum Era

Researchers have known for years that quantum computers will be able to break the public keys used today to secure communications. As these systems approach readiness, government and industry initiatives have been ramping up to address this vital issue.

The U.S. National Institute of Standards and Technology, for example, is expected to introduce the first standard algorithms for post-quantum cryptography as early as this year.

Cryptographers working on advanced algorithms to replace today’s public keys need powerful systems to design and test their work.

Hopper Delivers up to 500x Speedups With cuPQC

In its first benchmarks, cuPQC accelerated Kyber — an algorithm proposed as a standard for securing quantum-resistant keys — by up to 500x running on an NVIDIA H100 Tensor Core GPU compared with a CPU.

The speedups will be even greater with NVIDIA Blackwell architecture GPUs, given Blackwell’s enhancements for the integer math used in cryptography and other high performance computing workloads.

“Securing data against quantum threats is a critically important problem, and we’re excited to work with NVIDIA to optimize post-quantum cryptography,” said Douglas Stebila, co-founder of the Open Quantum Safe project, a group spearheading work in the emerging field.

Accelerating Community Efforts

The project is a part of the newly formed Post-Quantum Cryptography Alliance, hosted by the Linux Foundation.

The alliance funds open source projects to develop post-quantum libraries and applications. NVIDIA is a member of the alliance with seats on both its steering and technical committees.

NVIDIA is also collaborating with cloud service providers such as Amazon Web Services (AWS), Google Cloud and Microsoft Azure on testing cuPQC.

In addition, leading companies in post-quantum cryptography such as evolutionQ, PQShield, QuSecure and SandboxAQ are collaborating with NVIDIA, many with plans to integrate cuPQC into their offerings.

“Different use cases will require a range of approaches for optimal acceleration,” said Ben Packman, a senior vice president at PQShield. “We are delighted to explore cuPQC with NVIDIA.”

Learn More at GTC

Developers working on post-quantum cryptography can sign up for updates on cuPQC here.

To learn more, watch a session about how NVIDIA is advancing quantum computing and attend an expert panel on the topic at NVIDIA GTC, a global AI conference, running through March 21 at the San Jose Convention Center and online.

Get the full view from NVIDIA founder and CEO Jensen Huang in his GTC keynote.

]]>Moving fast to accelerate its AI journey, BNY Mellon, a global financial services company celebrating its 240th anniversary, revealed Monday that it has become the first major bank to deploy an NVIDIA DGX SuperPOD with DGX H100 systems.

Thanks to the strong collaborative relationship between NVIDIA Professional Services and BNY Mellon, the team was able to install and configure the DGX SuperPOD ahead of typical timelines.

The system, equipped with dozens of NVIDIA DGX systems and NVIDIA InfiniBand networking and based on the DGX SuperPOD reference architecture, delivers computer processing performance that hasn’t been seen before at BNY Mellon.

“Key to our technology strategy is empowering our clients through scalable, trusted platforms and solutions,” said BNY Mellon Chief Information Officer Bridget Engle. “By deploying NVIDIA’s AI supercomputer, we can accelerate our processing capacity to innovate and launch AI-enabled capabilities that help us manage, move and keep our clients’ assets safe.”

Powered by its new system, BNY Mellon plans to use NVIDIA AI Enterprise software to support the build and deployment of AI applications and manage AI infrastructure.

NVIDIA AI Software: A Key Component in BNY Mellon’s Toolbox

Founded by Alexander Hamilton in 1784, BNY Mellon oversees nearly $50 trillion in assets for its clients and helps companies and institutions worldwide access the money they need, support governments in funding local projects, safeguard investments for millions of individuals and more.

BNY Mellon has long been at the forefront of AI and accelerated computing in the financial services industry. Its AI Hub has more than 20 AI-enabled solutions in production. These solutions support predictive analytics, automation and anomaly detection, among other capabilities.

While the firm recognizes that AI presents opportunities to enhance its processes to reduce risk across the organization, it is also actively working to consider and manage potential risks associated with AI through its robust risk management and governance processes.

Some of the use cases supported by DGX SuperPOD include deposit forecasting, payment automation, predictive trade analytics and end-of-day cash balances.

More are coming. The company identified more than 600 opportunities in AI during a firmwide exercise last year, and dozens are already in development using such NVIDIA AI Enterprise software as NVIDIA NeMo, NVIDIA Triton Inference Server and NVIDIA Base Command.

Triton Inference Server is inference-serving software that streamlines AI inferencing or puts trained AI models to work.

Base Command powers the DGX SuperPOD, delivering the best of NVIDIA software that enables businesses and their data scientists to accelerate AI development.

NeMo is an end-to-end platform for developing custom generative AI, anywhere. It includes tools for training, and retrieval-augmented generation, guardrailing and toolkits, data curation tools, and pretrained models, offering enterprises an easy, cost-effective, and fast way to adopt generative AI.

Fueling Innovation Through Top Talent

With the new DGX SuperPOD, these tools will enable BNY Mellon to streamline and accelerate innovation within the firm and across the global financial system.

Hundreds of data scientists, solutions architects and risk, control and compliance professionals have been using the NVIDIA DGX platform, which delivers the world’s leading solutions for enterprise AI development at scale, for several years.

By leveraging their new NVIDIA DGX SuperPOD will help the company rapidly expand its on-premises AI infrastructure.

The new system also underscores the company’s commitment to adopting new technologies and attracting top talent across the world to help drive its innovation agenda forward.

]]>Providing a peek at the architecture powering advanced AI factories, NVIDIA Thursday released a video that offers the first public look at Eos, its latest data-center-scale supercomputer.

An extremely large-scale NVIDIA DGX SuperPOD, Eos is where NVIDIA developers create their AI breakthroughs using accelerated computing infrastructure and fully optimized software.

Eos is built with 576 NVIDIA DGX H100 systems, NVIDIA Quantum-2 InfiniBand networking and software, providing a total of 18.4 exaflops of FP8 AI performance. This system is a sister to a separate Eos DGX SuperPOD with 10,752 NVIDIA H100 GPUs, used for MLPerf training in November.

Revealed in November at the Supercomputing 2023 trade show, Eos — named for the Greek goddess said to open the gates of dawn each day — reflects NVIDIA’s commitment to advancing AI technology.

Eos Supercomputer Fuels Innovation

Each DGX H100 system is equipped with eight NVIDIA H100 Tensor Core GPUs. Eos features a total of 4,608 H100 GPUs.

As a result, Eos can handle the largest AI workloads to train large language models, recommender systems, quantum simulations and more.

It’s a showcase of what NVIDIA’s technologies can do, when working at scale.

Eos is arriving at the perfect time. People are changing the world with generative AI, from drug discovery to chatbots to autonomous machines and beyond.

To achieve these breakthroughs, they need more than AI expertise and development skills. They need an AI factory — a purpose-built AI engine that’s always available and can help ramp their capacity to build AI models at scale

Eos delivers. Ranked No. 9 in the TOP500 list of the world’s fastest supercomputers, Eos pushes the boundaries of AI technology and infrastructure.

It includes NVIDIA’s advanced accelerated computing and networking alongside sophisticated software offerings such as NVIDIA Base Command and NVIDIA AI Enterprise.

Eos’s architecture is optimized for AI workloads demanding ultra-low-latency and high-throughput interconnectivity across a large cluster of accelerated computing nodes, making it an ideal solution for enterprises looking to scale their AI capabilities.

Based on NVIDIA Quantum-2 InfiniBand with In-Network Computing technology, its network architecture supports data transfer speeds of up to 400Gb/s, facilitating the rapid movement of large datasets essential for training complex AI models.

At the heart of Eos lies the groundbreaking DGX SuperPOD architecture powered by NVIDIA’s DGX H100 systems.

The architecture is built to provide the AI and computing fields with tightly integrated full-stack systems capable of computing at an enormous scale.

As enterprises and developers worldwide seek to harness the power of AI, Eos stands as a pivotal resource, promising to accelerate the journey towards AI-infused applications that fuel every organization.

Editor’s note: This post was updated on Feb. 19, 2024, to clarify that there are two Eos systems.

]]>With advances in computing, sophisticated AI models and machine learning are having a profound impact on business and society. Industries can use AI to quickly analyze vast bodies of data, allowing them to derive meaningful insights, make predictions and automate processes for greater efficiency.

In the public sector, government agencies are achieving superior disaster preparedness. Biomedical researchers are bringing novel drugs to market faster. Telecommunications providers are building more energy-efficient networks. Manufacturers are trimming emissions from product design, development and manufacturing processes. Hollywood studios are creating impressive visual effects at a fraction of the cost and time. Robots are being deployed on important missions to help preserve the Earth. And investment advisors are running more trade scenarios to optimize portfolios.

Eighty-two percent of companies surveyed are already using or exploring AI, and 84% report that they’re increasing investments in data and AI initiatives. Any organization that delays AI implementation risks missing out on new efficiency gains and becoming obsolete.

However, AI workloads are computationally demanding, and legacy computing systems are ill-equipped for the development and deployment of AI. CPU-based compute requires linear growth in power input to meet the increased processing needs of AI and data-heavy workloads. If data centers are using carbon-based energy, it’s impossible for enterprises to innovate using AI while controlling greenhouse gas emissions and meeting sustainability commitments. Plus, many countries are introducing tougher regulations to enforce data center carbon reporting.

Accelerated computing — the use of GPUs and special hardware, software and parallel computing techniques — has exponentially improved the performance and energy efficiency of data centers.

Below, read more on how industries are using energy-efficient computing to scale AI, improve products and services, and reduce emissions and operational costs.

The Public Sector Drives Research, Delivers Improved Citizen Services