Robots are moving goods in warehouses, packaging foods and helping assemble vehicles — bringing enhanced automation to use cases across industries.

There are two keys to their success: Physical AI and robotics simulation.

Physical AI describes AI models that can understand and interact with the physical world. Physical AI embodies the next wave of autonomous machines and robots, such as self-driving cars, industrial manipulators, mobile robots, humanoids and even robot-run infrastructure like factories and warehouses.

With virtual commissioning of robots in digital worlds, robots are first trained using robotic simulation software before they are deployed for real-world use cases.

Robotics Simulation Summarized

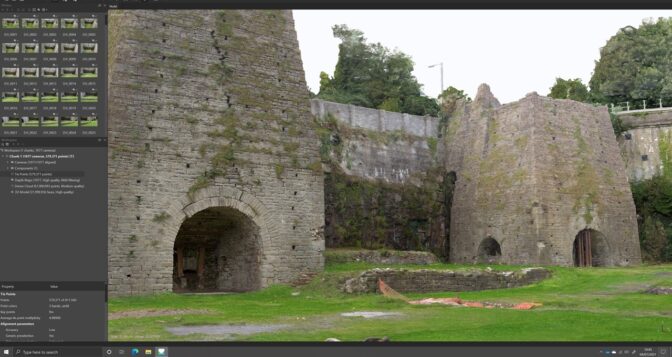

An advanced robotics simulator facilitates robot learning and testing of virtual robots without requiring the physical robot. By applying physics principles and replicating real-world conditions, these simulators generate synthetic datasets to train machine learning models for deployment on physical robots.

Simulations are used for initial AI model training and then to validate the entire software stack, minimizing the need for physical robots during testing. NVIDIA Isaac Sim, a reference application built on the NVIDIA Omniverse platform, provides accurate visualizations and supports Universal Scene Description (OpenUSD)-based workflows for advanced robot simulation and validation.

NVIDIA’s 3 Computer Framework Facilitates Robot Simulation

Three computers are needed to train and deploy robot technology.

- A supercomputer to train and fine-tune powerful foundation and generative AI models.

- A development platform for robotics simulation and testing.

- An onboard runtime computer to deploy trained models to physical robots.

Only after adequate training in simulated environments can physical robots be commissioned.

The NVIDIA DGX platform can serve as the first computing system to train models.

NVIDIA Omniverse running on NVIDIA OVX servers functions as the second computer system, providing the development platform and simulation environment for testing, optimizing and debugging physical AI.

NVIDIA Jetson Thor robotics computers designed for onboard computing serve as the third runtime computer.

Who Uses Robotics Simulation?

Today, robot technology and robot simulations boost operations massively across use cases.

Global leader in power and thermal technologies Delta Electronics uses simulation to test out its optical inspection algorithms to detect product defects on production lines.

Deep tech startup Wandelbots is building a custom simulator by integrating Isaac Sim into its application, making it easy for end users to program robotic work cells in simulation and seamlessly transfer models to a real robot.

Boston Dynamics is activating researchers and developers through its reinforcement learning researcher kit.

Robotics company Fourier is simulating real-world conditions to train humanoid robots with the precision and agility needed for close robot-human collaboration.

Using NVIDIA Isaac Sim, robotics company Galbot built DexGraspNet, a comprehensive simulated dataset for dexterous robotic grasps containing over 1 million ShadowHand grasps on 5,300+ objects. The dataset can be applied to any dexterous robotic hand to accomplish complex tasks that require fine-motor skills.

Using Robotics Simulation for Planning and Control Outcomes

In complex and dynamic industrial settings, robotics simulation is evolving to integrate digital twins, enhancing planning, control and learning outcomes.

Developers import computer-aided design models into a robotics simulator to build virtual scenes and employ algorithms to create the robot operating system and enable task and motion planning. While traditional methods involve prescribing control signals, the shift toward machine learning allows robots to learn behaviors through methods like imitation and reinforcement learning, using simulated sensor signals.

This evolution continues with digital twins in complex facilities like manufacturing assembly lines, where developers can test and refine real-time AIs entirely in simulation. This approach saves software development time and costs, and reduces downtime by anticipating issues. For instance, using NVIDIA Omniverse, Metropolis and cuOpt, developers can use digital twins to develop, test and refine physical AI in simulation before deploying in industrial infrastructure.

High-Fidelity, Physics-Based Simulation Breakthroughs

High-fidelity, physics-based simulations have supercharged industrial robotics through real-world experimentation in virtual environments.

NVIDIA PhysX, integrated into Omniverse and Isaac Sim, empowers roboticists to develop fine- and gross-motor skills for robot manipulators, rigid and soft body dynamics, vehicle dynamics and other critical features that ensure the robot obeys the laws of physics. This includes precise control over actuators and modeling of kinematics, which are essential for accurate robot movements.

To close the sim-to-real gap, Isaac Lab offers a high-fidelity, open-source framework for reinforcement learning and imitation learning that facilitates seamless policy transfer from simulated environments to physical robots. With GPU parallelization, Isaac Lab accelerates training and improves performance, making complex tasks more achievable and safe for industrial robots.

To learn more about creating a locomotion reinforcement learning policy with Isaac Sim and Isaac Lab, read this developer blog.

Teaching Collision-Free Motion for Autonomy

Industrial robot training often occurs in specific settings like factories or fulfillment centers, where simulations help address challenges related to various robot types and chaotic environments. A critical aspect of these simulations is generating collision-free motion in unknown, cluttered environments.

Traditional motion planning approaches that attempt to address these challenges can come up short in unknown or dynamic environments. SLAM, or simultaneous localization and mapping, can be used to generate 3D maps of environments with camera images from multiple viewpoints. However, these maps require revisions when objects move and environments are changed.

The NVIDIA Robotics research team and the University of Washington introduced Motion Policy Networks (MπNets), an end-to-end neural policy that generates real-time, collision-free motion using a single fixed camera’s data stream. Trained on over 3 million motion planning problems and 700 million simulated point clouds, MπNets navigates unknown real-world environments effectively.

While the MπNets model applies direct learning for trajectories, the team also developed a point cloud-based collision model called CabiNet, trained on over 650,000 procedurally generated simulated scenes.

With the CabiNet model, developers can deploy general-purpose, pick-and-place policies of unknown objects beyond a flat tabletop setup. Training with a large synthetic dataset allowed the model to generalize to out-of-distribution scenes in a real kitchen environment, without needing any real data.

How Developers Can Get Started Building Robotic Simulators

Get started with technical resources, reference applications and other solutions for developing physically accurate simulation pipelines by visiting the NVIDIA Robotics simulation use case page.

Robot developers can tap into NVIDIA Isaac Sim, which supports multiple robot training techniques:

- Synthetic data generation for training perception AI models

- Software-in-the-loop testing for the entire robot stack

- Robot policy training with Isaac Lab

Developers can also pair ROS 2 with Isaac Sim to train, simulate and validate their robot systems. The Isaac Sim to ROS 2 workflow is similar to workflows executed with other robot simulators such as Gazebo. It starts with bringing a robot model into a prebuilt Isaac Sim environment, adding sensors to the robot, and then connecting the relevant components to the ROS 2 action graph and simulating the robot by controlling it through ROS 2 packages.

Stay up to date by subscribing to our newsletter and follow NVIDIA Robotics on LinkedIn, Instagram, X and Facebook.

]]>Editor’s note: This article, originally published on November 15, 2023, has been updated.

To understand the latest advance in generative AI, imagine a courtroom.

Judges hear and decide cases based on their general understanding of the law. Sometimes a case — like a malpractice suit or a labor dispute — requires special expertise, so judges send court clerks to a law library, looking for precedents and specific cases they can cite.

Like a good judge, large language models (LLMs) can respond to a wide variety of human queries. But to deliver authoritative answers that cite sources, the model needs an assistant to do some research.

The court clerk of AI is a process called retrieval-augmented generation, or RAG for short.

How It Got Named ‘RAG’

Patrick Lewis, lead author of the 2020 paper that coined the term, apologized for the unflattering acronym that now describes a growing family of methods across hundreds of papers and dozens of commercial services he believes represent the future of generative AI.

“We definitely would have put more thought into the name had we known our work would become so widespread,” Lewis said in an interview from Singapore, where he was sharing his ideas with a regional conference of database developers.

“We always planned to have a nicer sounding name, but when it came time to write the paper, no one had a better idea,” said Lewis, who now leads a RAG team at AI startup Cohere.

So, What Is Retrieval-Augmented Generation (RAG)?

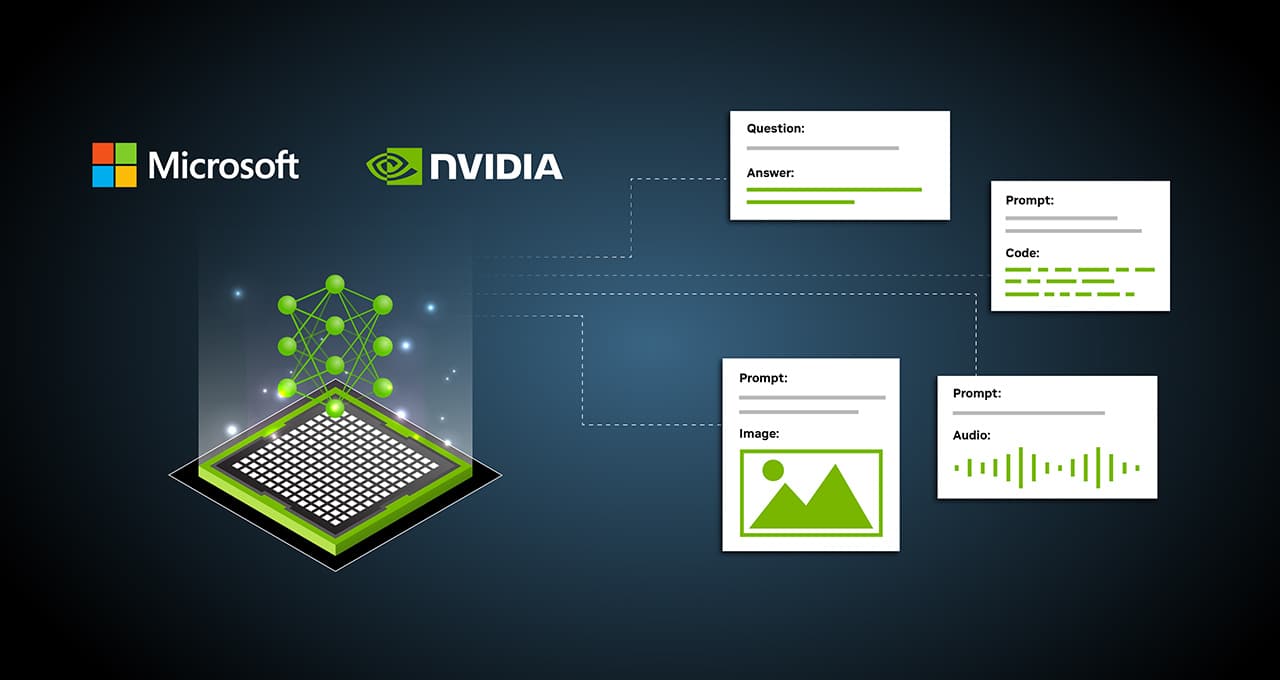

Retrieval-augmented generation (RAG) is a technique for enhancing the accuracy and reliability of generative AI models with facts fetched from external sources.

In other words, it fills a gap in how LLMs work. Under the hood, LLMs are neural networks, typically measured by how many parameters they contain. An LLM’s parameters essentially represent the general patterns of how humans use words to form sentences.

That deep understanding, sometimes called parameterized knowledge, makes LLMs useful in responding to general prompts at light speed. However, it does not serve users who want a deeper dive into a current or more specific topic.

Combining Internal, External Resources

Lewis and colleagues developed retrieval-augmented generation to link generative AI services to external resources, especially ones rich in the latest technical details.

The paper, with coauthors from the former Facebook AI Research (now Meta AI), University College London and New York University, called RAG “a general-purpose fine-tuning recipe” because it can be used by nearly any LLM to connect with practically any external resource.

Building User Trust

Retrieval-augmented generation gives models sources they can cite, like footnotes in a research paper, so users can check any claims. That builds trust.

What’s more, the technique can help models clear up ambiguity in a user query. It also reduces the possibility a model will make a wrong guess, a phenomenon sometimes called hallucination.

Another great advantage of RAG is it’s relatively easy. A blog by Lewis and three of the paper’s coauthors said developers can implement the process with as few as five lines of code.

That makes the method faster and less expensive than retraining a model with additional datasets. And it lets users hot-swap new sources on the fly.

How People Are Using RAG

With retrieval-augmented generation, users can essentially have conversations with data repositories, opening up new kinds of experiences. This means the applications for RAG could be multiple times the number of available datasets.

For example, a generative AI model supplemented with a medical index could be a great assistant for a doctor or nurse. Financial analysts would benefit from an assistant linked to market data.

In fact, almost any business can turn its technical or policy manuals, videos or logs into resources called knowledge bases that can enhance LLMs. These sources can enable use cases such as customer or field support, employee training and developer productivity.

The broad potential is why companies including AWS, IBM, Glean, Google, Microsoft, NVIDIA, Oracle and Pinecone are adopting RAG.

Getting Started With Retrieval-Augmented Generation

To help users get started, NVIDIA developed an AI Blueprint for building virtual assistants. Organizations can use this reference architecture to quickly scale their customer service operations with generative AI and RAG, or get started building a new customer-centric solution.

The blueprint uses some of the latest AI-building methodologies and NVIDIA NeMo Retriever, a collection of easy-to-use NVIDIA NIM microservices for large-scale information retrieval. NIM eases the deployment of secure, high-performance AI model inferencing across clouds, data centers and workstations.

These components are all part of NVIDIA AI Enterprise, a software platform that accelerates the development and deployment of production-ready AI with the security, support and stability businesses need.

There is also a free hands-on NVIDIA LaunchPad lab for developing AI chatbots using RAG so developers and IT teams can quickly and accurately generate responses based on enterprise data.

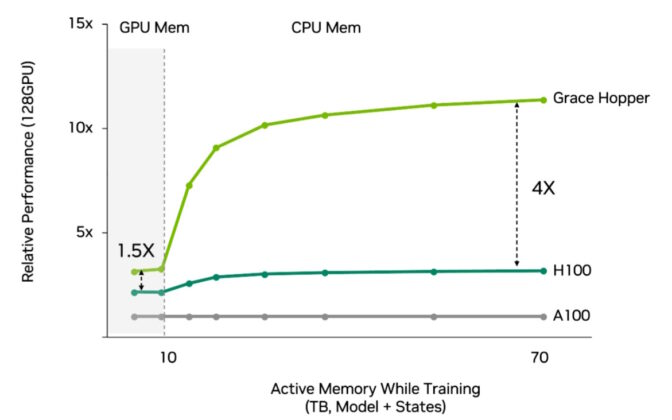

Getting the best performance for RAG workflows requires massive amounts of memory and compute to move and process data. The NVIDIA GH200 Grace Hopper Superchip, with its 288GB of fast HBM3e memory and 8 petaflops of compute, is ideal — it can deliver a 150x speedup over using a CPU.

Once companies get familiar with RAG, they can combine a variety of off-the-shelf or custom LLMs with internal or external knowledge bases to create a wide range of assistants that help their employees and customers.

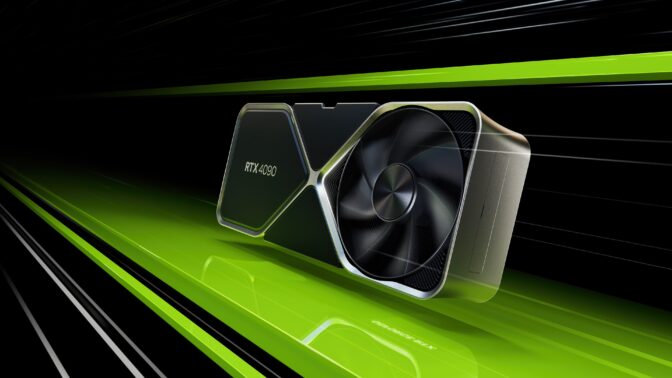

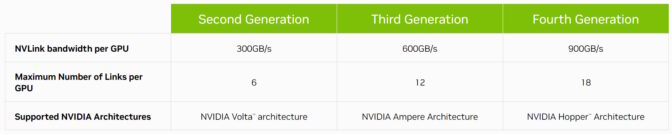

RAG doesn’t require a data center. LLMs are debuting on Windows PCs, thanks to NVIDIA software that enables all sorts of applications users can access even on their laptops.

PCs equipped with NVIDIA RTX GPUs can now run some AI models locally. By using RAG on a PC, users can link to a private knowledge source – whether that be emails, notes or articles – to improve responses. The user can then feel confident that their data source, prompts and response all remain private and secure.

A recent blog provides an example of RAG accelerated by TensorRT-LLM for Windows to get better results fast.

The History of RAG

The roots of the technique go back at least to the early 1970s. That’s when researchers in information retrieval prototyped what they called question-answering systems, apps that use natural language processing (NLP) to access text, initially in narrow topics such as baseball.

The concepts behind this kind of text mining have remained fairly constant over the years. But the machine learning engines driving them have grown significantly, increasing their usefulness and popularity.

In the mid-1990s, the Ask Jeeves service, now Ask.com, popularized question answering with its mascot of a well-dressed valet. IBM’s Watson became a TV celebrity in 2011 when it handily beat two human champions on the Jeopardy! game show.

Today, LLMs are taking question-answering systems to a whole new level.

Insights From a London Lab

The seminal 2020 paper arrived as Lewis was pursuing a doctorate in NLP at University College London and working for Meta at a new London AI lab. The team was searching for ways to pack more knowledge into an LLM’s parameters and using a benchmark it developed to measure its progress.

Building on earlier methods and inspired by a paper from Google researchers, the group “had this compelling vision of a trained system that had a retrieval index in the middle of it, so it could learn and generate any text output you wanted,” Lewis recalled.

When Lewis plugged into the work in progress a promising retrieval system from another Meta team, the first results were unexpectedly impressive.

“I showed my supervisor and he said, ‘Whoa, take the win. This sort of thing doesn’t happen very often,’ because these workflows can be hard to set up correctly the first time,” he said.

Lewis also credits major contributions from team members Ethan Perez and Douwe Kiela, then of New York University and Facebook AI Research, respectively.

When complete, the work, which ran on a cluster of NVIDIA GPUs, showed how to make generative AI models more authoritative and trustworthy. It’s since been cited by hundreds of papers that amplified and extended the concepts in what continues to be an active area of research.

How Retrieval-Augmented Generation Works

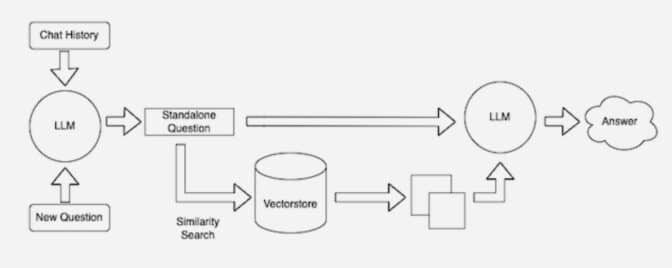

At a high level, here’s how an NVIDIA technical brief describes the RAG process.

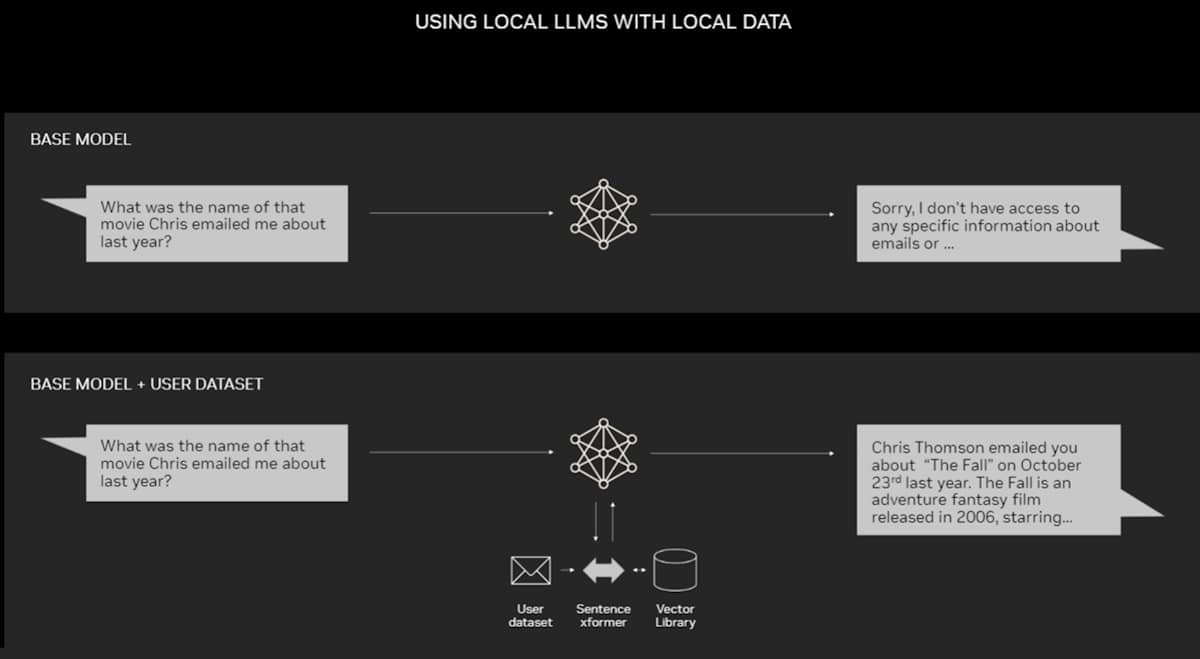

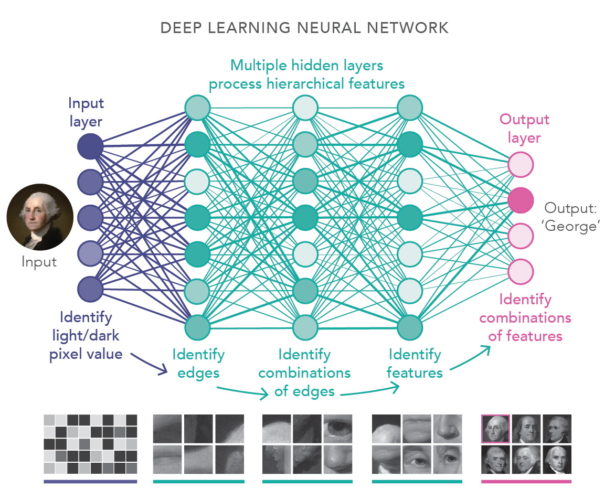

When users ask an LLM a question, the AI model sends the query to another model that converts it into a numeric format so machines can read it. The numeric version of the query is sometimes called an embedding or a vector.

The embedding model then compares these numeric values to vectors in a machine-readable index of an available knowledge base. When it finds a match or multiple matches, it retrieves the related data, converts it to human-readable words and passes it back to the LLM.

Finally, the LLM combines the retrieved words and its own response to the query into a final answer it presents to the user, potentially citing sources the embedding model found.

Keeping Sources Current

In the background, the embedding model continuously creates and updates machine-readable indices, sometimes called vector databases, for new and updated knowledge bases as they become available.

Many developers find LangChain, an open-source library, can be particularly useful in chaining together LLMs, embedding models and knowledge bases. NVIDIA uses LangChain in its reference architecture for retrieval-augmented generation.

The LangChain community provides its own description of a RAG process.

Looking forward, the future of generative AI lies in creatively chaining all sorts of LLMs and knowledge bases together to create new kinds of assistants that deliver authoritative results users can verify.

Explore generative AI sessions and experiences at NVIDIA GTC, the global conference on AI and accelerated computing, running March 18-21 in San Jose, Calif., and online.

]]>Editor’s note: The name of NIM Agent Blueprints was changed to NVIDIA Blueprints in October 2024. All references to the name have been updated in this blog.

AI chatbots use generative AI to provide responses based on a single interaction. A person makes a query and the chatbot uses natural language processing to reply.

The next frontier of artificial intelligence is agentic AI, which uses sophisticated reasoning and iterative planning to autonomously solve complex, multi-step problems. And it’s set to enhance productivity and operations across industries.

Agentic AI systems ingest vast amounts of data from multiple sources to independently analyze challenges, develop strategies and execute tasks like supply chain optimization, cybersecurity vulnerability analysis and helping doctors with time-consuming tasks.

Agentic AI uses sophisticated reasoning and iterative planning to solve complex, multi-step problems.

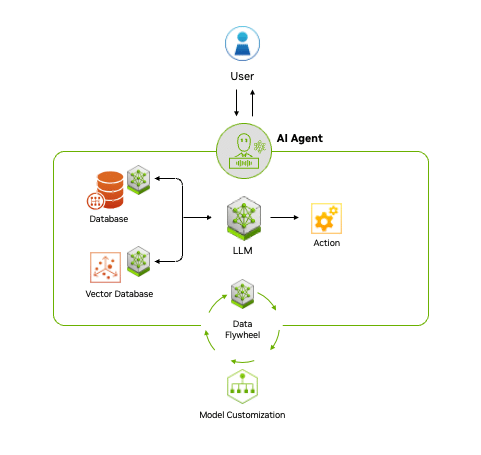

How Does Agentic AI Work?

Agentic AI uses a four-step process for problem-solving:

- Perceive: AI agents gather and process data from various sources, such as sensors, databases and digital interfaces. This involves extracting meaningful features, recognizing objects or identifying relevant entities in the environment.

- Reason: A large language model acts as the orchestrator, or reasoning engine, that understands tasks, generates solutions and coordinates specialized models for specific functions like content creation, vision processing or recommendation systems. This step uses techniques like retrieval-augmented generation (RAG) to access proprietary data sources and deliver accurate, relevant outputs.

- Act: By integrating with external tools and software via application programming interfaces, agentic AI can quickly execute tasks based on the plans it has formulated. Guardrails can be built into AI agents to help ensure they execute tasks correctly. For example, a customer service AI agent may be able to process claims up to a certain amount, while claims above the amount would have to be approved by a human.

- Learn: Agentic AI continuously improves through a feedback loop, or

“data flywheel,” where the data generated from its interactions is fed into the system to enhance models. This ability to adapt and become more effective over time offers businesses a powerful tool for driving better decision-making and operational efficiency.

Fueling Agentic AI With Enterprise Data

Fueling Agentic AI With Enterprise Data

Across industries and job functions, generative AI is transforming organizations by turning vast amounts of data into actionable knowledge, helping employees work more efficiently.

AI agents build on this potential by accessing diverse data through accelerated AI query engines, which process, store and retrieve information to enhance generative AI models. A key technique for achieving this is RAG, which allows AI to tap into a broader range of data sources.

Over time, AI agents learn and improve by creating a data flywheel, where data generated through interactions is fed back into the system, refining models and increasing their effectiveness.

The end-to-end NVIDIA AI platform, including NVIDIA NeMo microservices, provides the ability to manage and access data efficiently, which is crucial for building responsive agentic AI applications.

Agentic AI in Action

The potential applications of agentic AI are vast, limited only by creativity and expertise. From simple tasks like generating and distributing content to more complex use cases such as orchestrating enterprise software, AI agents are transforming industries.

Customer Service: AI agents are improving customer support by enhancing self-service capabilities and automating routine communications. Over half of service professionals report significant improvements in customer interactions, reducing response times and boosting satisfaction.

There’s also growing interest in digital humans — AI-powered agents that embody a company’s brand and offer lifelike, real-time interactions to help sales representatives answer customer queries or solve issues directly when call volumes are high.

Content Creation: Agentic AI can help quickly create high-quality, personalized marketing content. Generative AI agents can save marketers an average of three hours per content piece, allowing them to focus on strategy and innovation. By streamlining content creation, businesses can stay competitive while improving customer engagement.

Software Engineering: AI agents are boosting developer productivity by automating repetitive coding tasks. It’s projected that by 2030 AI could automate up to 30% of work hours, freeing developers to focus on more complex challenges and drive innovation.

Healthcare: For doctors analyzing vast amounts of medical and patient data, AI agents can distill critical information to help them make better-informed care decisions. Automating administrative tasks and capturing clinical notes in patient appointments reduces the burden of time-consuming tasks, allowing doctors to focus on developing a doctor-patient connection.

AI agents can also provide 24/7 support, offering information on prescribed medication usage, appointment scheduling and reminders, and more to help patients adhere to treatment plans.

How to Get Started

With its ability to plan and interact with a wide variety of tools and software, agentic AI marks the next chapter of artificial intelligence, offering the potential to enhance productivity and revolutionize the way organizations operate.

To accelerate the adoption of generative AI-powered applications and agents, NVIDIA Blueprints provide sample applications, reference code, sample data, tools and comprehensive documentation.

NVIDIA partners including Accenture are helping enterprises use agentic AI with solutions built with NVIDIA Blueprints.

Visit ai.nvidia.com to learn more about the tools and software NVIDIA offers to help enterprises build their own AI agents.

]]>AI isn’t just about building smarter machines. It’s about building a greener world.

From optimizing energy use to reducing emissions, AI and accelerated computing are helping industries tackle some of the world’s toughest environmental challenges.

As Joshua Parker, senior director of corporate sustainability at NVIDIA, explains in the latest episode of NVIDIA’s AI Podcast, these technologies are powering a new era of energy efficiency.

Parker, a seasoned sustainability professional with a background in law and engineering, has led sustainability initiatives at major companies like Western Digital, where he developed corporate sustainability strategies. His expertise spans corporate sustainability, intellectual property and environmental impact.

AI can, in fact, help reduce energy consumption. And it’s doing it in some surprising ways.

AI systems themselves use energy, of course, but the big story is how AI and accelerated computing are helping other systems save energy. Take data centers, for instance.

They’re the backbone of AI, housing the powerful systems that crunch the data needed for AI to work in services like chatbots, AI-powered search and content generation. Globally, data centers account for about 2% of total energy consumption, and AI-specific centers represent only a tiny fraction of that.

“AI still accounts for a tiny, tiny fraction of overall energy consumption globally,” Parker said. Yet, the potential for AI to optimize energy use is vast.

Despite this, AI’s real superpower lies in its ability to optimize.

How? By using accelerated computing platforms that combine GPUs and CPUs. GPUs are designed to handle complex computations quickly and efficiently.

In fact, these systems can be up to 20x more energy-efficient than traditional CPU-only systems for AI inference and training, Parker notes.

This progress has contributed to massive gains in energy efficiency over the past eight years, which is part of the reason AI is now able to tackle increasingly complex problems.

What Is Accelerated Computing?

At its core, accelerated computing is about doing more with less.

It involves using specialized hardware — like GPUs — to perform tasks faster and with less energy.

This isn’t just theoretical. “The change in efficiency is really, really dramatic,” Parker emphasized.

“If you compare the energy efficiency for AI inference from eight years ago until today, [it’s] 45,000 times more energy efficient,” Parker said.

This matters because as AI becomes more widespread, the demand for computing power grows.

Accelerated computing helps companies scale their AI operations without consuming massive amounts of energy.

This energy efficiency is key to AI’s ability to tackle some of today’s biggest sustainability challenges.

AI in Action: Tackling Climate Change

AI isn’t just saving energy — it’s helping to fight climate change.

“AI and accelerated computing in general are game-changers when it comes to weather and climate modeling and simulation,” Parker said.

For instance, AI-enhanced weather forecasting is becoming more accurate, allowing industries and governments to prepare for climate-related events like hurricanes or floods, Parker explained.

The better we can predict these events, the better we can prepare for them, which means fewer resources wasted and less damage done.

Another key area is the rise of digital twins — virtual models of physical environments.

These AI-powered simulations allow companies to optimize energy consumption in real time, without having to make costly changes in the physical world. In one case, using a digital twin helped a company achieve a 10% reduction in energy use, Parker said.

That may sound small, but scale it across industries, and the impact is huge.

AI is also playing a crucial role in developing new materials for renewable energy technologies like solar panels and electric vehicles, helping accelerate the transition to clean energy.

Can AI Make Data Centers More Sustainable?

AI needs data centers to operate, and, as AI grows, so does the demand for computing power. But data centers don’t have to be energy hogs. In fact, they can be part of the sustainability solution.

One major innovation is direct-to-chip liquid cooling. This technology allows data centers to cool their systems much more efficiently than traditional air conditioning methods, which are often energy-intensive.

“Our recommended design for the data centers for our new B200 chip is focused all on direct-to-chip liquid cooling,” Parker explained. By cooling directly at the chip level, this method saves energy, helping data centers stay cool without guzzling power.

As AI scales up, the future of data centers will depend on designing for energy efficiency from the ground up.

That means integrating renewable energy, using energy storage solutions and continuing to innovate with cooling technologies.

The goal is to create green data centers that can meet the world’s growing demand for compute power without increasing their carbon footprint.

“The compute density is so high that it makes more sense to invest in the cooling because you’re getting so much more compute for that same single direct-to-chip cooling element,” Parker said.

The Role of AI in Building a Sustainable Future

AI is not just a tool for optimizing systems — it’s a driver of sustainable innovation.

From improving the efficiency of energy grids to enhancing supply chain logistics, AI is leading the charge in reducing waste and emissions.

“AI, I firmly believe, is going to be the best tool that we’ve ever seen to help us achieve more sustainability and more sustainable outcomes,” Parker said.

Register for the NVIDIA AI Summit DC to explore how AI and accelerated computing are shaping the future of energy efficiency and climate solutions.

]]>Artificial intelligence, like any transformative technology, is a work in progress — continually growing in its capabilities and its societal impact. Trustworthy AI initiatives recognize the real-world effects that AI can have on people and society, and aim to channel that power responsibly for positive change.

What Is Trustworthy AI?

Trustworthy AI is an approach to AI development that prioritizes safety and transparency for those who interact with it. Developers of trustworthy AI understand that no model is perfect, and take steps to help customers and the general public understand how the technology was built, its intended use cases and its limitations.

In addition to complying with privacy and consumer protection laws, trustworthy AI models are tested for safety, security and mitigation of unwanted bias. They’re also transparent — providing information such as accuracy benchmarks or a description of the training dataset — to various audiences including regulatory authorities, developers and consumers.

Principles of Trustworthy AI

Trustworthy AI principles are foundational to NVIDIA’s end-to-end AI development. They have a simple goal: to enable trust and transparency in AI and support the work of partners, customers and developers.

Privacy: Complying With Regulations, Safeguarding Data

AI is often described as data hungry. Often, the more data an algorithm is trained on, the more accurate its predictions.

But data has to come from somewhere. To develop trustworthy AI, it’s key to consider not just what data is legally available to use, but what data is socially responsible to use.

Developers of AI models that rely on data such as a person’s image, voice, artistic work or health records should evaluate whether individuals have provided appropriate consent for their personal information to be used in this way.

For institutions like hospitals and banks, building AI models means balancing the responsibility of keeping patient or customer data private while training a robust algorithm. NVIDIA has created technology that enables federated learning, where researchers develop AI models trained on data from multiple institutions without confidential information leaving a company’s private servers.

NVIDIA DGX systems and NVIDIA FLARE software have enabled several federated learning projects in healthcare and financial services, facilitating secure collaboration by multiple data providers on more accurate, generalizable AI models for medical image analysis and fraud detection.

Safety and Security: Avoiding Unintended Harm, Malicious Threats

Once deployed, AI systems have real-world impact, so it’s essential they perform as intended to preserve user safety.

The freedom to use publicly available AI algorithms creates immense possibilities for positive applications, but also means the technology can be used for unintended purposes.

To help mitigate risks, NVIDIA NeMo Guardrails keeps AI language models on track by allowing enterprise developers to set boundaries for their applications. Topical guardrails ensure that chatbots stick to specific subjects. Safety guardrails set limits on the language and data sources the apps use in their responses. Security guardrails seek to prevent malicious use of a large language model that’s connected to third-party applications or application programming interfaces.

NVIDIA Research is working with the DARPA-run SemaFor program to help digital forensics experts identify AI-generated images. Last year, researchers published a novel method for addressing social bias using ChatGPT. They’re also creating methods for avatar fingerprinting — a way to detect if someone is using an AI-animated likeness of another individual without their consent.

To protect data and AI applications from security threats, NVIDIA H100 and H200 Tensor Core GPUs are built with confidential computing, which ensures sensitive data is protected while in use, whether deployed on premises, in the cloud or at the edge. NVIDIA Confidential Computing uses hardware-based security methods to ensure unauthorized entities can’t view or modify data or applications while they’re running — traditionally a time when data is left vulnerable.

Transparency: Making AI Explainable

To create a trustworthy AI model, the algorithm can’t be a black box — its creators, users and stakeholders must be able to understand how the AI works to trust its results.

Transparency in AI is a set of best practices, tools and design principles that helps users and other stakeholders understand how an AI model was trained and how it works. Explainable AI, or XAI, is a subset of transparency covering tools that inform stakeholders how an AI model makes certain predictions and decisions.

Transparency and XAI are crucial to establishing trust in AI systems, but there’s no universal solution to fit every kind of AI model and stakeholder. Finding the right solution involves a systematic approach to identify who the AI affects, analyze the associated risks and implement effective mechanisms to provide information about the AI system.

Retrieval-augmented generation, or RAG, is a technique that advances AI transparency by connecting generative AI services to authoritative external databases, enabling models to cite their sources and provide more accurate answers. NVIDIA is helping developers get started with a RAG workflow that uses the NVIDIA NeMo framework for developing and customizing generative AI models.

NVIDIA is also part of the National Institute of Standards and Technology’s U.S. Artificial Intelligence Safety Institute Consortium, or AISIC, to help create tools and standards for responsible AI development and deployment. As a consortium member, NVIDIA will promote trustworthy AI by leveraging best practices for implementing AI model transparency.

And on NVIDIA’s hub for accelerated software, NGC, model cards offer detailed information about how each AI model works and was built. NVIDIA’s Model Card ++ format describes the datasets, training methods and performance measures used, licensing information, as well as specific ethical considerations.

Nondiscrimination: Minimizing Bias

AI models are trained by humans, often using data that is limited by size, scope and diversity. To ensure that all people and communities have the opportunity to benefit from this technology, it’s important to reduce unwanted bias in AI systems.

Beyond following government guidelines and antidiscrimination laws, trustworthy AI developers mitigate potential unwanted bias by looking for clues and patterns that suggest an algorithm is discriminatory, or involves the inappropriate use of certain characteristics. Racial and gender bias in data are well-known, but other considerations include cultural bias and bias introduced during data labeling. To reduce unwanted bias, developers might incorporate different variables into their models.

Synthetic datasets offer one solution to reduce unwanted bias in training data used to develop AI for autonomous vehicles and robotics. If data used to train self-driving cars underrepresents uncommon scenes such as extreme weather conditions or traffic accidents, synthetic data can help augment the diversity of these datasets to better represent the real world, helping improve AI accuracy.

NVIDIA Omniverse Replicator, a framework built on the NVIDIA Omniverse platform for creating and operating 3D pipelines and virtual worlds, helps developers set up custom pipelines for synthetic data generation. And by integrating the NVIDIA TAO Toolkit for transfer learning with Innotescus, a web platform for curating unbiased datasets for computer vision, developers can better understand dataset patterns and biases to help address statistical imbalances.

Learn more about trustworthy AI on NVIDIA.com and the NVIDIA Blog. For more on tackling unwanted bias in AI, watch this talk from NVIDIA GTC and attend the trustworthy AI track at the upcoming conference, taking place March 18-21 in San Jose, Calif, and online.

]]>Nations have long invested in domestic infrastructure to advance their economies, control their own data and take advantage of technology opportunities in areas such as transportation, communications, commerce, entertainment and healthcare.

AI, the most important technology of our time, is turbocharging innovation across every facet of society. It’s expected to generate trillions of dollars in economic dividends and productivity gains.

Countries are investing in sovereign AI to develop and harness such benefits on their own. Sovereign AI refers to a nation’s capabilities to produce artificial intelligence using its own infrastructure, data, workforce and business networks.

Why Sovereign AI Is Important

The global imperative for nations to invest in sovereign AI capabilities has grown since the rise of generative AI, which is reshaping markets, challenging governance models, inspiring new industries and transforming others — from gaming to biopharma. It’s also rewriting the nature of work, as people in many fields start using AI-powered “copilots.”

Sovereign AI encompasses both physical and data infrastructures. The latter includes sovereign foundation models, such as large language models, developed by local teams and trained on local datasets to promote inclusiveness with specific dialects, cultures and practices.

For example, speech AI models can help preserve, promote and revitalize indigenous languages. And LLMs aren’t just for teaching AIs human languages, but for writing software code, protecting consumers from financial fraud, teaching robots physical skills and much more.

In addition, as artificial intelligence and accelerated computing become increasingly critical tools for combating climate change, boosting energy efficiency and protecting against cybersecurity threats, sovereign AI has a pivotal role to play in equipping every nation to bolster its sustainability efforts.

Factoring In AI Factories

Comprising new, essential infrastructure for AI production are “AI factories,” where data comes in and intelligence comes out. These are next-generation data centers that host advanced, full-stack accelerated computing platforms for the most computationally intensive tasks.

Nations are building up domestic computing capacity through various models. Some are procuring and operating sovereign AI clouds in collaboration with state-owned telecommunications providers or utilities. Others are sponsoring local cloud partners to provide a shared AI computing platform for public- and private-sector use.

“The AI factory will become the bedrock of modern economies across the world,” NVIDIA founder and CEO Jensen Huang said in a recent media Q&A.

Sovereign AI Efforts Underway

Nations around the world are already investing in sovereign AI.

Since 2019, NVIDIA’s AI Nations initiative has helped countries spanning every region of the globe to build sovereign AI capabilities, including ecosystem enablement and workforce development, creating the conditions for engineers, developers, scientists, entrepreneurs, creators and public sector officials to pursue their AI ambitions at home.

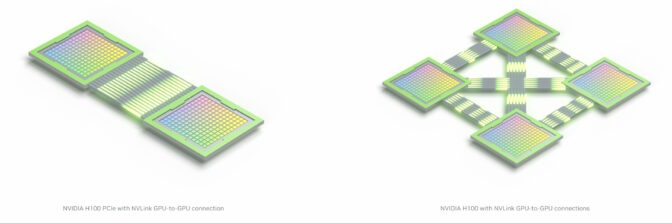

France-based Scaleway, a subsidiary of the iliad Group, is building Europe’s most powerful cloud-native AI supercomputer. The NVIDIA DGX SuperPOD comprises 127 DGX H100 systems, representing 1,016 NVIDIA H100 Tensor Core GPUs interconnected by NVIDIA NVLink technology and the NVIDIA Quantum-2 InfiniBand platform. NVIDIA DGX systems also include NVIDIA AI Enterprise software for secure, supported and stable AI development and deployment.

Swisscom Group, majority-owned by the Swiss government, recently announced its Italian subsidiary, Fastweb, will build Italy’s first and most powerful NVIDIA DGX-powered supercomputer — also using NVIDIA AI Enterprise software — to develop the first LLM natively trained in the Italian language.

With these NVIDIA technologies and its own cloud and cybersecurity infrastructures, Fastweb plans to launch an end-to-end system with which Italian companies, public-administration organizations and startups can develop generative AI applications for any industry.

The government of India has also announced sovereign AI initiatives promoting workforce development, sustainable computing and private-sector investment in domestic compute capacity. India-based Tata Group, for example, is building a large-scale AI infrastructure powered by the NVIDIA GH200 Grace Hopper Superchip, while Reliance Industries will develop a foundation LLM tailored for generative AI and trained on the diverse languages of the world’s most populous nation. NVIDIA is also working with India’s top universities to support and expand local researcher and developer communities.

Japan is going all in with sovereign AI, collaborating with NVIDIA to upskill its workforce, support Japanese language model development, and expand AI adoption for natural disaster response and climate resilience. These efforts include public-private partnerships that are incentivizing leaders like SoftBank Corp. to collaborate with NVIDIA on building a generative AI platform for 5G and 6G applications as well as a network of distributed AI factories.

Finally, Singapore is fostering a range of sovereign AI programs, including by partnering with NVIDIA to upgrade its National Super Computer Center, or NSCC, with NVIDIA H100 GPUs. In addition, Singtel, a leading communications services provider building energy-efficient AI factories across Southeast Asia, is accelerated by NVIDIA Hopper architecture GPUs and NVIDIA AI reference architectures.

Read more about sovereign AI and its transformative potential.

Explore generative AI sessions and experiences at NVIDIA GTC, the global conference on AI and accelerated computing, running March 18-21 in San Jose, Calif., and online.

]]>The Wild West had gunslingers, bank robberies and bounties — today’s digital frontier has identity theft, credit card fraud and chargebacks.

Cashing in on financial fraud has become a multibillion-dollar criminal enterprise. And generative AI in the hands of fraudsters only promises to make this more profitable.

Credit card losses worldwide are expected to reach $43 billion by 2026, according to the Nilson Report.

Financial fraud is perpetrated in a growing number of ways, like harvesting hacked data from the dark web for credit card theft, using generative AI for phishing personal information, and laundering money between cryptocurrency, digital wallets and fiat currencies. Many other financial schemes are lurking in the digital underworld.

To keep up, financial services firms are wielding AI for fraud detection. That’s because many of these digital crimes need to be halted in their tracks in real time so that consumers and financial firms can stop losses right away.

So how is AI used for fraud detection?

AI for fraud detection uses multiple machine learning models to detect anomalies in customer behaviors and connections as well as patterns of accounts and behaviors that fit fraudulent characteristics.

Generative AI Can Be Tapped as Fraud Copilot

Much of financial services involves text and numbers. Generative AI and large language models (LLMs), capable of learning meaning and context, promise disruptive capabilities across industries with new levels of output and productivity. Financial services firms can harness generative AI to develop more intelligent and capable chatbots and improve fraud detection.

On the opposite side, bad actors can circumvent AI guardrails with crafty generative AI prompts to use it for fraud. And LLMs are delivering human-like writing, enabling fraudsters to draft more contextually relevant emails without typos and grammar mistakes. Many different tailored versions of phishing emails can be quickly created, making generative AI an excellent copilot for perpetrating scams. There are also a number of dark web tools like FraudGPT, which can exploit generative AI for cybercrimes.

Generative AI can be exploited for financial harm in voice authentication security measures as well. Some banks are using voice authentication to help authorize users. A banking customer’s voice can be cloned using deep fake technology if an attacker can obtain voice samples in an effort to breach such systems. The voice data can be gathered with spam phone calls that attempt to lure the call recipient into responding by voice.

Chatbot scams are such a problem that the U.S. Federal Trade Commission called out concerns for the use of LLMs and other technology to simulate human behavior for deep fake videos and voice clones applied in imposter scams and financial fraud.

How Is Generative AI Tackling Misuse and Fraud Detection?

Fraud review has a powerful new tool. Workers handling manual fraud reviews can now be assisted with LLM-based assistants running RAG on the backend to tap into information from policy documents that can help expedite decision-making on whether cases are fraudulent, vastly accelerating the process.

LLMs are being adopted to predict the next transaction of a customer, which can help payments firms preemptively assess risks and block fraudulent transactions.

Generative AI also helps combat transaction fraud by improving accuracy, generating reports, reducing investigations and mitigating compliance risk.

Generating synthetic data is another important application of generative AI for fraud prevention. Synthetic data can improve the number of data records used to train fraud detection models and increase the variety and sophistication of examples to teach the AI to recognize the latest techniques employed by fraudsters.

NVIDIA offers tools to help enterprises embrace generative AI to build chatbots and virtual agents with a workflow that uses retrieval-augmented generation. RAG enables companies to use natural language prompts to access vast datasets for information retrieval.

Harnessing NVIDIA AI workflows can help accelerate building and deploying enterprise-grade capabilities to accurately produce responses for various use cases, using foundation models, the NVIDIA NeMo framework, NVIDIA Triton Inference Server and GPU-accelerated vector database to deploy RAG-powered chatbots.

There’s an industry focus on safety to ensure generative AI isn’t easily exploited for harm. NVIDIA released NeMo Guardrails to help ensure that intelligent applications powered by LLMs, such as OpenAI’s ChatGPT, are accurate, appropriate, on topic and secure.

The open-source software is designed to help keep AI-powered applications from being exploited for fraud and other misuses.

What Are the Benefits of AI for Fraud Detection?

Fraud detection has been a challenge across banking, finance, retail and e-commerce. Fraud doesn’t only hurt organizations financially, it can also do reputational harm.

It’s a headache for consumers, as well, when fraud models from financial services firms overreact and register false positives that shut down legitimate transactions.

So financial services sectors are developing more advanced models using more data to fortify themselves against losses financially and reputationally. They’re also aiming to reduce false positives in fraud detection for transactions to improve customer satisfaction and win greater share among merchants.

Financial Services Firms Embrace AI for Identity Verification

The financial services industry is developing AI for identity verification. AI-driven applications using deep learning with graph neural networks (GNNs), natural language processing (NLP) and computer vision can improve identity verification for know-your customer (KYC) and anti-money laundering (AML) requirements, leading to improved regulatory compliance and reduced costs.

Computer vision analyzes photo documentation such as drivers licenses and passports to identify fakes. At the same time, NLP reads the documents to measure the veracity of the data on the documents as the AI analyzes them to look for fraudulent records.

Gains in KYC and AML requirements have massive regulatory and economic implications. Financial institutions, including banks, were fined nearly $5 billion for AML, breaching sanctions as well as failures in KYC systems in 2022, according to the Financial Times.

Harnessing Graph Neural Networks and NVIDIA GPUs

GNNs have been embraced for their ability to reveal suspicious activity. They’re capable of looking at billions of records and identifying previously unknown patterns of activity to make correlations about whether an account has in the past sent a transaction to a suspicious account.

NVIDIA has an alliance with the Deep Graph Library team, as well as the PyTorch Geometric team, which provides a GNN framework containerized offering that includes the latest updates, NVIDIA RAPIDS libraries and more to help users stay up to date on cutting-edge techniques.

These GNN framework containers are NVIDIA-optimized and performance-tuned and tested to get the most out of NVIDIA GPUs.

With access to the NVIDIA AI Enterprise software platform, developers can tap into NVIDIA RAPIDS, NVIDIA Triton Inference Server and the NVIDIA TensorRT software development kit to support enterprise deployments at scale.

Improving Anomaly Detection With GNNs

Fraudsters have sophisticated techniques and can learn ways to outmaneuver fraud detection systems. One way is by unleashing complex chains of transactions to avoid notice. This is where traditional rules-based systems can miss patterns and fail.

GNNs build on a concept of representation within the model of local structure and feature context. The information from the edge and node features is propagated with aggregation and message passing among neighboring nodes.

When GNNs run multiple layers of graph convolution, the final node states contain information from nodes multiple hops away. The larger receptive field of GNNs can track the more complex and longer transaction chains used by financial fraud perpetrators in attempts to obscure their tracks.

GNNs Enable Training Unsupervised or Self-Supervised

Detecting financial fraud patterns at massive scale is challenged by the tens of terabytes of transaction data that needs to be analyzed in the blink of an eye and a relative lack of labeled data for real fraud activity needed to train models.

While GNNs can cast a wider detection net on fraud patterns, they can also train on an unsupervised or self-supervised task.

By using techniques such as Bootstrapped Graph Latents — a graph representation learning method — or link prediction with negative sampling, GNN developers can pretrain models without labels and fine-tune models with far fewer labels, producing strong graph representations. The output of this can be used for models like XGBoost, GNNs or techniques for clustering, offering better results when deployed for inference.

Tackling Model Explainability and Bias

GNNs also enable model explainability with a suite of tools. Explainable AI is an industry practice that enables organizations to use such tools and techniques to explain how AI models make decisions, allowing them to safeguard against bias.

Heterogeneous graph transformer and graph attention network, which are GNN models, enable attention mechanisms across each layer of the GNN, allowing developers to identify message paths that GNNs use to reach a final output.

Even without an attention mechanism, techniques such as GNNExplainer, PGExplainer and GraphMask have been suggested to explain GNN outputs.

Leading Financial Services Firms Embrace AI for Gains

- American Express: Improved fraud detection accuracy by 6% with deep learning models and used NVIDIA TensorRT on NVIDIA Triton Inference Server.

- BNY Mellon: Bank of New York Mellon improved fraud detection accuracy by 20% with federated learning. BNY built a collaborative fraud detection framework that runs Inpher’s secure multi-party computation, which safeguards third-party data on NVIDIA DGX systems.

- PayPal: PayPal sought a new fraud detection system that could operate worldwide continuously to protect customer transactions from potential fraud in real time. The company delivered a new level of service, using NVIDIA GPU-powered inference to improve real-time fraud detection by 10% while lowering server capacity nearly 8x.

- Swedbank: Among Sweden’s largest banks, Swedbank trained NVIDIA GPU-driven generative adversarial networks to detect suspicious activities in efforts to stop fraud and money laundering.

Learn how NVIDIA AI Enterprise addresses fraud detection at this webinar.

]]>Great companies thrive on stories. Sid Siddeek, who runs NVIDIA’s venture capital arm, knows this well.

Siddeek still remembers one of his first jobs, schlepping presentation materials from one investor meeting to another, helping the startup’s CEO and management team get the story out while working from a trailer that “shook when the door opened,” he said.

That CEO was Jensen Huang. The startup was NVIDIA.

Siddeek, who has worked as an investor and an entrepreneur, knows how important it is to find the right people to share your company’s story with early on, whether they’re customers or partners, employees or investors.

It’s this very principle that underpins NVIDIA’s multifaceted approach to investing in the next wave of innovation, a strategy also championed by Vishal Bhagwati, who leads NVIDIA’s corporate development efforts.

It’s an effort that’s resulted in more than two dozen investments so far this year, accelerating as the pace of innovation in AI and accelerated computing quickens.

NVIDIA’s Three-Pronged Strategy to Support the AI Ecosystem

There are three ways that NVIDIA invests in the ecosystem, driving the transformation unleashed by accelerated computing. First, through NVIDIA’s corporate investments, overseen by Bhagwati. Second, through NVentures, our venture capital arm, led by Siddeek. And finally, through NVIDIA Inception, our vehicle for supporting startups and connecting them to venture capital.

There couldn’t be a better time to support companies harnessing NVIDIA technologies. AI alone could contribute more than $15 trillion to the global economy by 2030, according to PwC.

And if you’re working in AI and accelerated computing right now, NVIDIA stands ready to help. Developers across every industry in every country are building accelerated computing applications. And they’re just getting going.

The result is a collection of companies that are advancing the story of AI every day. They include Cohere, CoreWeave, Hugging Face, Inflection, Inceptive and many more. And we’re right alongside them.

“Partnering with NVIDIA is a game-changer,” said Ed Mehr, CEO of Machina Labs. “Their unmatched expertise will supercharge our AI and simulation capabilities.”

Corporate Investments: Growing Our Ecosystem

NVIDIA’s corporate investments arm focuses on strategic collaborations. These partnerships stimulate joint innovation, enhance the NVIDIA platform and expand the ecosystem. Since the beginning of 2023, announcements have been made about 14 investments.

These target companies include Ayar Labs, specializing in chip-to-chip optical connectivity, and Hugging Face, a hub for advanced AI models.

The portfolio also includes next-generation enterprise solutions. Databricks offers an industry-leading data platform for machine learning, while Cohere provides enterprise automation through AI. Other notable companies are Recursion, Kore.ai and Utilidata, each contributing unique solutions in drug discovery, conversational AI and smart electricity grids, respectively.

Consumer services are another investment focus. Inflection is crafting a personal AI for creative expression, while Runway serves as a platform for art and creativity through generative AI.

The investment strategy extends to autonomous machines. Ready Robotics is developing an operating system for industrial robotics, and Skydio builds autonomous drones.

NVIDIA’s most recent investments are in cloud service providers like CoreWeave. These platforms cater to a diverse clientele, from startups to Fortune 500 companies seeking to build next-generation AI services.

NVentures: Investing Alongside Entrepreneurs

Through NVentures, we support innovators who are deeply relevant to NVIDIA. We aim to generate strong financial returns and expand the ecosystem by funding companies that use our platforms across a wide range of industries.

To date, NVentures has made 19 investments in companies in healthcare, manufacturing and other key verticals. Some examples of our portfolio companies include:

- Genesis Therapeutics, Inceptive, Terray, Charm, Evozyne, Generate, Superluminal: revolutionizing drug discovery

- Machina Labs, Seurat Technologies: disrupting industrial processes to improve manufacturing

- PassiveLogic: automating building systems with AI

- MindsDB: for developers that need to connect enterprise data to AI

- Moon Surgical: improving laparoscopic surgery with AI

- Twelve Labs: developing multimodal foundation models for video understanding

- Flywheel: accelerating medical imaging data development

- Luma AI: developers of visual and multimodal models

- Outrider: automating logistics hub operation

- Synthesia: AI Video for the enterprise

- Replicate: developer platform for open-source and custom models

All these companies are building on work being done inside and outside NVIDIA.

“NVentures has a network, not just within NVIDIA, but throughout the industry, to make sure we have access to the best technology and the best people to build all the different modules that have to come together to define the distribution and supply chain of the future,” said Andrew Smith, CEO of Outrider.

NVIDIA Inception: Supporting Startups and Connecting Them to Investors

In addition, we’re continuing to support startups with NVIDIA Inception. Launched in 2016, this free global program offers technology and marketing support to over 17,000 startups across multiple industries and over 125 countries.

And, as part of Inception, we’re partnering with venture capitalists through our VC Alliance, a program that offers benefits to our valued network of venture capital firms, including connecting startups with potential investors.

Partnering With Innovators in Every Industry

Whatever our relationship, whether as a partner or investor, we can offer companies unique forms of support.

NVIDIA has the technology. NVIDIA has the richest set of libraries and the deepest understanding of the frameworks needed to optimize training and inference pipelines.

We have the go-to-market skills. NVIDIA has tremendous field sales, solution architect and developer relations organizations with a long track record of working with the most innovative startups and the largest companies in the world.

We know how to grow. We have people throughout our organization who are recognized leaders in their respective fields and can offer expert advice to companies of all sizes and industries.

“Partnering with NVIDIA was an easy choice,” said Victor Riparbelli, cofounder and CEO of Synthesia. “We use their hardware, benefit from their AI expertise and get valuable insights, allowing us to build better products faster.”

Accelerating the Greatest Breakthroughs of Our Time

In turn, these investments augment our R&D in the software, systems and semiconductors undergirding this ecosystem.

With NVIDIA’s technologies poised to accelerate the work of researchers and scientists, entrepreneurs, startups and Fortune 500 companies, finding ways to support companies that rely on our technologies— with engineering resources, marketing support and capital — is more vital than ever.

]]>GPUs have been called the rare Earth metals — even the gold — of artificial intelligence, because they’re foundational for today’s generative AI era.

Three technical reasons, and many stories, explain why that’s so. Each reason has multiple facets well worth exploring, but at a high level:

- GPUs employ parallel processing.

- GPU systems scale up to supercomputing heights.

- The GPU software stack for AI is broad and deep.

The net result is GPUs perform technical calculations faster and with greater energy efficiency than CPUs. That means they deliver leading performance for AI training and inference as well as gains across a wide array of applications that use accelerated computing.

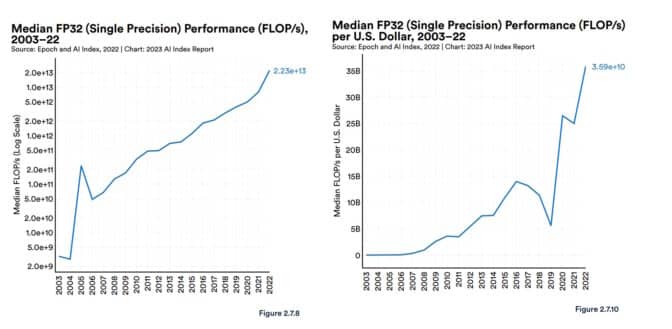

In its recent report on AI, Stanford’s Human-Centered AI group provided some context. GPU performance “has increased roughly 7,000 times” since 2003 and price per performance is “5,600 times greater,” it reported.

The report also cited analysis from Epoch, an independent research group that measures and forecasts AI advances.

“GPUs are the dominant computing platform for accelerating machine learning workloads, and most (if not all) of the biggest models over the last five years have been trained on GPUs … [they have] thereby centrally contributed to the recent progress in AI,” Epoch said on its site.

A 2020 study assessing AI technology for the U.S. government drew similar conclusions.

“We expect [leading-edge] AI chips are one to three orders of magnitude more cost-effective than leading-node CPUs when counting production and operating costs,” it said.

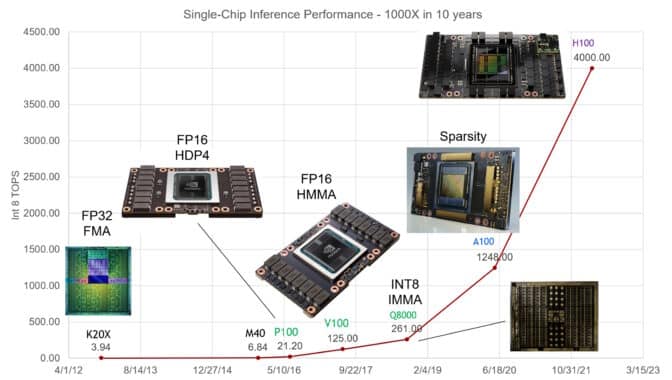

NVIDIA GPUs have increased performance on AI inference 1,000x in the last ten years, said Bill Dally, the company’s chief scientist in a keynote at Hot Chips, an annual gathering of semiconductor and systems engineers.

ChatGPT Spread the News

ChatGPT provided a powerful example of how GPUs are great for AI. The large language model (LLM), trained and run on thousands of NVIDIA GPUs, runs generative AI services used by more than 100 million people.

Since its 2018 launch, MLPerf, the industry-standard benchmark for AI, has provided numbers that detail the leading performance of NVIDIA GPUs on both AI training and inference.

For example, NVIDIA Grace Hopper Superchips swept the latest round of inference tests. NVIDIA TensorRT-LLM, inference software released since that test, delivers up to an 8x boost in performance and more than a 5x reduction in energy use and total cost of ownership. Indeed, NVIDIA GPUs have won every round of MLPerf training and inference tests since the benchmark was released in 2019.

In February, NVIDIA GPUs delivered leading results for inference, serving up thousands of inferences per second on the most demanding models in the STAC-ML Markets benchmark, a key technology performance gauge for the financial services industry.

A RedHat software engineering team put it succinctly in a blog: “GPUs have become the foundation of artificial intelligence.”

AI Under the Hood

A brief look under the hood shows why GPUs and AI make a powerful pairing.

An AI model, also called a neural network, is essentially a mathematical lasagna, made from layer upon layer of linear algebra equations. Each equation represents the likelihood that one piece of data is related to another.

For their part, GPUs pack thousands of cores, tiny calculators working in parallel to slice through the math that makes up an AI model. This, at a high level, is how AI computing works.

Highly Tuned Tensor Cores

Over time, NVIDIA’s engineers have tuned GPU cores to the evolving needs of AI models. The latest GPUs include Tensor Cores that are 60x more powerful than the first-generation designs for processing the matrix math neural networks use.

In addition, NVIDIA Hopper Tensor Core GPUs include a Transformer Engine that can automatically adjust to the optimal precision needed to process transformer models, the class of neural networks that spawned generative AI.

Along the way, each GPU generation has packed more memory and optimized techniques to store an entire AI model in a single GPU or set of GPUs.

Models Grow, Systems Expand

The complexity of AI models is expanding a whopping 10x a year.

The current state-of-the-art LLM, GPT4, packs more than a trillion parameters, a metric of its mathematical density. That’s up from less than 100 million parameters for a popular LLM in 2018.

GPU systems have kept pace by ganging up on the challenge. They scale up to supercomputers, thanks to their fast NVLink interconnects and NVIDIA Quantum InfiniBand networks.

For example, the DGX GH200, a large-memory AI supercomputer, combines up to 256 NVIDIA GH200 Grace Hopper Superchips into a single data-center-sized GPU with 144 terabytes of shared memory.

Each GH200 superchip is a single server with 72 Arm Neoverse CPU cores and four petaflops of AI performance. A new four-way Grace Hopper systems configuration puts in a single compute node a whopping 288 Arm cores and 16 petaflops of AI performance with up to 2.3 terabytes of high-speed memory.

And NVIDIA H200 Tensor Core GPUs announced in November pack up to 288 gigabytes of the latest HBM3e memory technology.

Software Covers the Waterfront

An expanding ocean of GPU software has evolved since 2007 to enable every facet of AI, from deep-tech features to high-level applications.

The NVIDIA AI platform includes hundreds of software libraries and apps. The CUDA programming language and the cuDNN-X library for deep learning provide a base on top of which developers have created software like NVIDIA NeMo, a framework to let users build, customize and run inference on their own generative AI models.

Many of these elements are available as open-source software, the grab-and-go staple of software developers. More than a hundred of them are packaged into the NVIDIA AI Enterprise platform for companies that require full security and support. Increasingly, they’re also available from major cloud service providers as APIs and services on NVIDIA DGX Cloud.

SteerLM, one of the latest AI software updates for NVIDIA GPUs, lets users fine tune models during inference.

A 70x Speedup in 2008

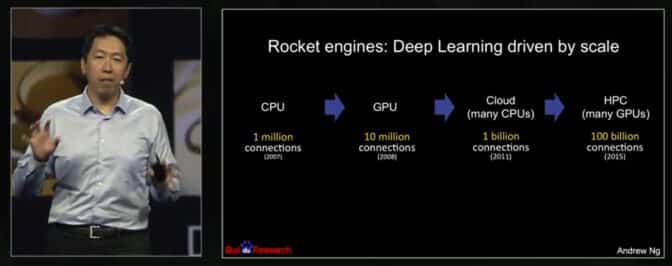

Success stories date back to a 2008 paper from AI pioneer Andrew Ng, then a Stanford researcher. Using two NVIDIA GeForce GTX 280 GPUs, his three-person team achieved a 70x speedup over CPUs processing an AI model with 100 million parameters, finishing work that used to require several weeks in a single day.

“Modern graphics processors far surpass the computational capabilities of multicore CPUs, and have the potential to revolutionize the applicability of deep unsupervised learning methods,” they reported.

In a 2015 talk at NVIDIA GTC, Ng described how he continued using more GPUs to scale up his work, running larger models at Google Brain and Baidu. Later, he helped found Coursera, an online education platform where he taught hundreds of thousands of AI students.

Ng counts Geoff Hinton, one of the godfathers of modern AI, among the people he influenced. “I remember going to Geoff Hinton saying check out CUDA, I think it can help build bigger neural networks,” he said in the GTC talk.

The University of Toronto professor spread the word. “In 2009, I remember giving a talk at NIPS [now NeurIPS], where I told about 1,000 researchers they should all buy GPUs because GPUs are going to be the future of machine learning,” Hinton said in a press report.

Fast Forward With GPUs

AI’s gains are expected to ripple across the global economy.

A McKinsey report in June estimated that generative AI could add the equivalent of $2.6 trillion to $4.4 trillion annually across the 63 use cases it analyzed in industries like banking, healthcare and retail. So, it’s no surprise Stanford’s 2023 AI report said that a majority of business leaders expect to increase their investments in AI.

Today, more than 40,000 companies use NVIDIA GPUs for AI and accelerated computing, attracting a global community of 4 million developers. Together they’re advancing science, healthcare, finance and virtually every industry.

Among the latest achievements, NVIDIA described a whopping 700,000x speedup using AI to ease climate change by keeping carbon dioxide out of the atmosphere (see video below). It’s one of many ways NVIDIA is applying the performance of GPUs to AI and beyond.

Learn how GPUs put AI into production.

Explore generative AI sessions and experiences at NVIDIA GTC, the global conference on AI and accelerated computing, running March 18-21 in San Jose, Calif., and online.

]]>Generative AI is the latest turn in the fast-changing digital landscape. One of the groundbreaking innovations making it possible is a relatively new term: SuperNIC.

What Is a SuperNIC?

SuperNIC is a new class of network accelerators designed to supercharge hyperscale AI workloads in Ethernet-based clouds. It provides lightning-fast network connectivity for GPU-to-GPU communication, achieving speeds reaching 400Gb/s using remote direct memory access (RDMA) over converged Ethernet (RoCE) technology.

SuperNICs combine the following unique attributes:

- High-speed packet reordering, featured when combined with an NVIDIA network switch, ensure that data packets are received and processed in the same order they were originally transmitted. This maintains the sequential integrity of the data flow.

- Advanced congestion control using real-time telemetry data and network-aware algorithms to manage and prevent congestion in AI networks.

- Programmable compute on the input/output (I/O) path to enable customization and extensibility of network infrastructure in AI cloud data centers.

- Power-efficient, low-profile design to efficiently accommodate AI workloads within constrained power budgets.

- Full-stack AI optimization, including compute, networking, storage, system software, communication libraries and application frameworks.

NVIDIA recently unveiled the world’s first SuperNIC tailored for AI computing, based on the BlueField-3 networking platform. It’s a part of the NVIDIA Spectrum-X platform, where it integrates seamlessly with the Spectrum-4 Ethernet switch system.

Together, the NVIDIA BlueField-3 SuperNIC and Spectrum-4 switch system form the foundation of an accelerated computing fabric specifically designed to optimize AI workloads. Spectrum-X consistently delivers high network efficiency levels, outperforming traditional Ethernet environments.

“In a world where AI is driving the next wave of technological innovation, the BlueField-3 SuperNIC is a vital cog in the machinery,” said Yael Shenhav, vice president of DPU and NIC products at NVIDIA. “SuperNICs ensure that your AI workloads are executed with efficiency and speed, making them foundational components for enabling the future of AI computing.”

The Evolving Landscape of AI and Networking

The AI field is undergoing a seismic shift, thanks to the advent of generative AI and large language models. These powerful technologies have unlocked new possibilities, enabling computers to handle new tasks.

AI success relies heavily on GPU-accelerated computing to process mountains of data, train large AI models, and enable real-time inference. This new compute power has opened new possibilities, but it has also challenged Ethernet cloud networks.

Traditional Ethernet, the technology that underpins internet infrastructure, was conceived to offer broad compatibility and connect loosely coupled applications. It wasn’t designed to handle the demanding computational needs of modern AI workloads, which involve tightly coupled parallel processing, rapid data transfers and unique communication patterns — all of which demand optimized network connectivity.

Foundational network interface cards (NICs) were designed for general-purpose computing, universal data transmission and interoperability. They were never designed to cope with the unique challenges posed by the computational intensity of AI workloads.

Standard NICs lack the requisite features and capabilities for efficient data transfer, low latency and the deterministic performance crucial for AI tasks. SuperNICs, on the other hand, are purpose-built for modern AI workloads.

SuperNIC Advantages in AI Computing Environments

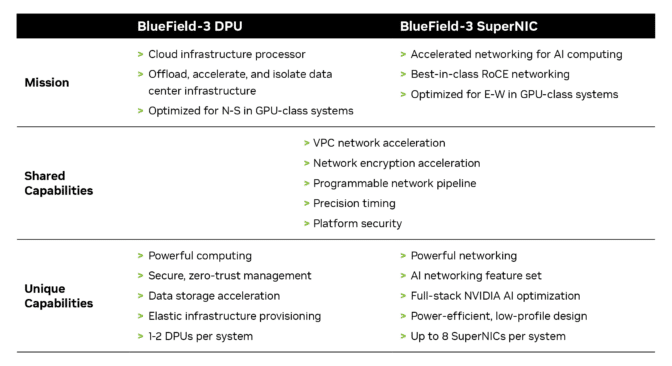

Data processing units (DPUs) deliver a wealth of advanced features, offering high throughput, low-latency network connectivity and more. Since their introduction in 2020, DPUs have gained popularity in the realm of cloud computing, primarily due to their capacity to offload, accelerate and isolate data center infrastructure processing.

Although DPUs and SuperNICs share a range of features and capabilities, SuperNICs are uniquely optimized for accelerating networks for AI. The chart below shows how they compare:

Distributed AI training and inference communication flows depend heavily on network bandwidth availability for success. SuperNICs, distinguished by their sleek design, scale more effectively than DPUs, delivering an impressive 400Gb/s of network bandwidth per GPU.

The 1:1 ratio between GPUs and SuperNICs within a system can significantly enhance AI workload efficiency, leading to greater productivity and superior outcomes for enterprises.

The sole purpose of SuperNICs is to accelerate networking for AI cloud computing. Consequently, it achieves this goal using less computing power than a DPU, which requires substantial computational resources to offload applications from a host CPU.

The reduced computing requirements also translate to lower power consumption, which is especially crucial in systems containing up to eight SuperNICs.

Additional distinguishing features of the SuperNIC include its dedicated AI networking capabilities. When tightly integrated with an AI-optimized NVIDIA Spectrum-4 switch, it offers adaptive routing, out-of-order packet handling and optimized congestion control. These advanced features are instrumental in accelerating Ethernet AI cloud environments.

Revolutionizing AI Cloud Computing

The NVIDIA BlueField-3 SuperNIC offers several benefits that make it key for AI-ready infrastructure:

- Peak AI workload efficiency: The BlueField-3 SuperNIC is purpose-built for network-intensive, massively parallel computing, making it ideal for AI workloads. It ensures that AI tasks run efficiently — without bottlenecks.

- Consistent and predictable performance: In multi-tenant data centers where numerous tasks are processed simultaneously, the BlueField-3 SuperNIC ensures that each job and tenant’s performance is isolated, predictable and unaffected by other network activities.

- Secure multi-tenant cloud infrastructure: Security is a top priority, especially in data centers handling sensitive information. The BlueField-3 SuperNIC maintains high security levels, enabling multiple tenants to coexist while keeping data and processing isolated.

- Extensible network infrastructure: The BlueField-3 SuperNIC isn’t limited in scope — it’s highly flexible and adaptable to a myriad of other network infrastructure needs.

- Broad server manufacturer support: The BlueField-3 SuperNIC fits seamlessly into most enterprise-class servers without excessive power consumption in data centers.

Learn more about NVIDIA BlueField-3 SuperNICs, including how they integrate across NVIDIA’s data center platforms, in the whitepaper: Next-Generation Networking for the Next Wave of AI.

]]>In the wake of ChatGPT, every company is trying to figure out its AI strategy, work that quickly raises the question: What about security?

Some may feel overwhelmed at the prospect of securing new technology. The good news is policies and practices in place today provide excellent starting points.

Indeed, the way forward lies in extending the existing foundations of enterprise and cloud security. It’s a journey that can be summarized in six steps:

- Expand analysis of the threats

- Broaden response mechanisms