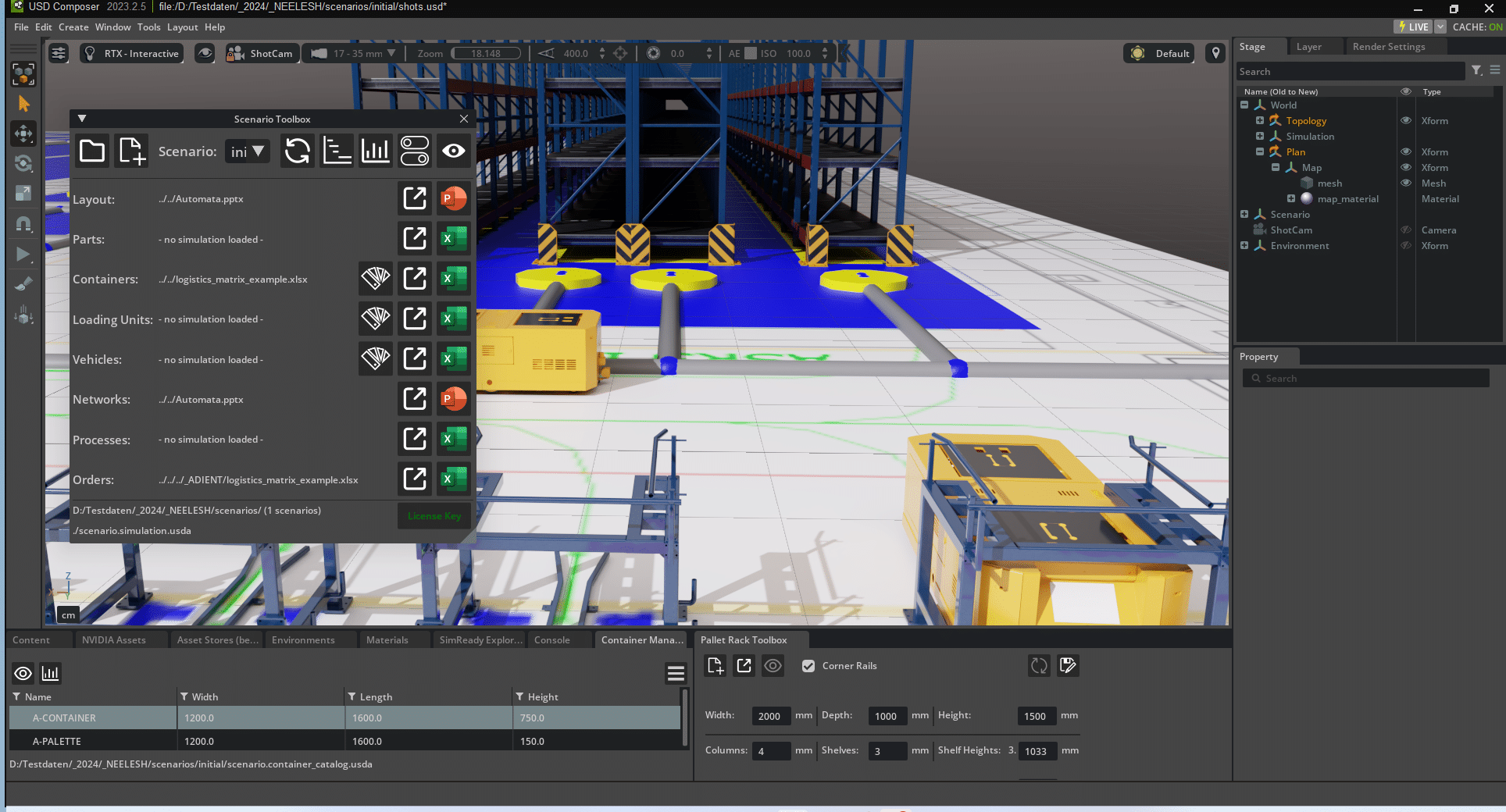

Editor’s note: This post is part of Into the Omniverse, a blog series focused on how developers, 3D artists and enterprises can transform their workflows using the latest advances in OpenUSD and NVIDIA Omniverse.

3D product configurators are changing the way industries like retail and automotive engage with customers by offering interactive, customizable 3D visualizations of products.

Using physically accurate product digital twins, even non-3D artists can streamline content creation and generate stunning marketing visuals.

With the new NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI, developers can start using the NVIDIA Omniverse platform and Universal Scene Description (OpenUSD) to easily build personalized, on-brand and product-accurate marketing content at scale.

By integrating generative AI into product configurators, developers can optimize operations and reduce production costs. With repetitive tasks automated, teams can focus on the creative aspects of their jobs.

Developing Controllable Generative AI for Content Production

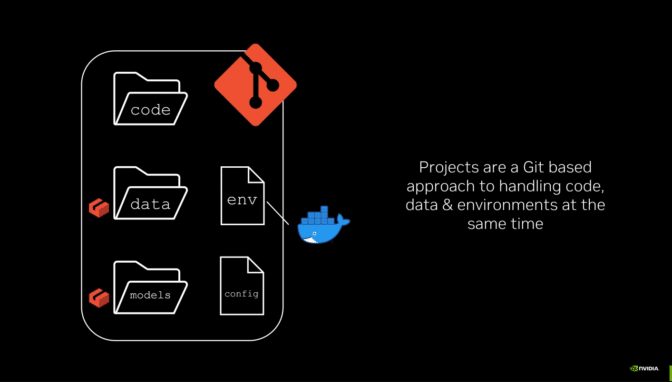

The new Omniverse Blueprint introduces a robust framework for integrating generative AI into 3D workflows to enable precise and controlled asset creation.

Example images created using the NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI.

Example images created using the NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI.

Key highlights of the blueprint include:

- Model conditioning to ensure that the AI-generated visuals adhere to specific brand requirements like colors and logos.

- Multimodal approach that combines 3D and 2D techniques to offer developers complete control over final visual outputs while ensuring the product’s digital twin remains accurate.

- Key components such as an on-brand hero asset, a simple and untextured 3D scene, and a customizable application built with the Omniverse Kit App Template.

- OpenUSD integration to enhance development of 3D visuals with precise visual generative AI.

- Integration of NVIDIA NIM, such as the Edify 360 NIM, Edify 3D NIM, USD Code NIM and USD Search NIM microservices, allows the blueprint to be extensible and customizable. The microservices are available to preview on build.nvidia.com.

How Developers Are Building AI-Enabled Content Pipelines

Katana Studio developed a content creation tool with OpenUSD called COATcreate that empowers marketing teams to rapidly produce 3D content for automotive advertising. By using 3D data prepared by creative experts and vetted by product specialists in OpenUSD, even users with limited artistic experience can quickly create customized, high-fidelity, on-brand content for any region or use case without adding to production costs.

Global marketing leader WPP has built a generative AI content engine for brand advertising with OpenUSD. The Omniverse Blueprint for precise visual generative AI helped facilitate the integration of controllable generative AI in its content creation tools. Leading global brands like The Coca-Cola Company are already beginning to adopt tools from WPP to accelerate iteration on its creative campaigns at scale.

Watch the replay of a recent livestream with WPP for more on its generative AI- and OpenUSD-enabled workflow:

The NVIDIA creative team developed a reference workflow called CineBuilder on Omniverse that allows companies to use text prompts to generate ads personalized to consumers based on region, weather, time of day, lifestyle and aesthetic preferences.

Developers at independent software vendors and production services agencies are building content creation solutions infused with controllable generative AI and built on OpenUSD. Accenture Song, Collective World, Grip, Monks and WPP are among those adopting Omniverse Blueprints to accelerate development.

Read the tech blog on developing product configurators with OpenUSD and get started developing solutions using the DENZA N7 3D configurator and CineBuilder reference workflow.

Get Plugged Into the World of OpenUSD

Various resources are available to help developers get started building AI-enabled product configuration solutions:

- Omniverse Blueprint: 3D Conditioning for Precise Visual Generative AI

- Reference Architecture: 3D Conditioning for Precise Visual Generative AI

- Reference Architecture: Generative AI Workflow for Content Creation

- Reference Architecture: Product Configurator

- End-to-End Configurator Example Guide

- DLI Course: Building a 3D Product Configurator With OpenUSD

- Livestream: OpenUSD for Marketing and Advertising

For more on optimizing OpenUSD workflows, explore the new self-paced Learn OpenUSD training curriculum that includes free Deep Learning Institute courses for 3D practitioners and developers. For more resources on OpenUSD, attend our instructor-led Learn OpenUSD courses at SIGGRAPH Asia on December 3, explore the Alliance for OpenUSD forum and visit the AOUSD website.

Don’t miss the CES keynote delivered by NVIDIA founder and CEO Jensen Huang live in Las Vegas on Monday, Jan. 6, at 6:30 p.m. PT for more on the future of AI and graphics.

Stay up to date by subscribing to NVIDIA news, joining the community and following NVIDIA Omniverse on Instagram, LinkedIn, Medium and X.

]]>The NVIDIA app — officially releasing today — is a companion platform for content creators, GeForce gamers and AI enthusiasts using GeForce RTX GPUs.

Featuring a GPU control center, the NVIDIA app allows users to access all their GPU settings in one place. From the app, users can do everything from updating to the latest drivers and configuring NVIDIA G-SYNC monitor settings, to tapping AI video enhancements through RTX Video and discovering exclusive AI-powered NVIDIA apps.

In addition, NVIDIA RTX Remix has a new update that improves performance and streamlines workflows.

For a deeper dive on gaming-exclusive benefits, check out the GeForce article.

The GPU’s PC Companion

The NVIDIA app turbocharges GeForce RTX GPUs with a bevy of applications, features and tools.

Keep NVIDIA Studio Drivers up to date — The NVIDIA app automatically notifies users when the latest Studio Driver is available. These graphics drivers, fine-tuned in collaboration with developers, enhance performance in top creative applications and are tested extensively to deliver maximum stability. They’re released once a month.

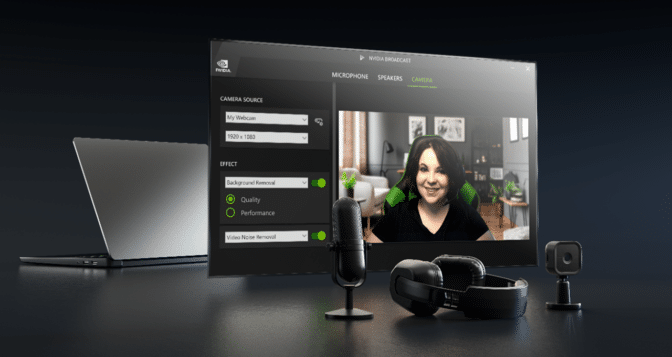

Discover AI creator apps — Millions have used the NVIDIA Broadcast app to turn offices and dorm rooms into home studios using AI-powered features that improve audio and video quality — without the need for expensive, specialized equipment. It’s user-friendly, works in virtually any app and includes AI features like Noise and Acoustic Echo Removal, Virtual Backgrounds, Eye Contact, Auto Frame, Vignettes and Video Noise Removal.

NVIDIA RTX Remix is a modding platform built on NVIDIA Omniverse that allows users to capture game assets, automatically enhance materials with generative AI tools and create stunning RTX remasters with full ray tracing, including DLSS 3.5 support featuring Ray Reconstruction.

NVIDIA Canvas uses AI to turn simple brushstrokes into realistic landscape images. Artists can create backgrounds quickly or speed up concept exploration, enabling them to visualize more ideas.

Enhance video streams with AI — The NVIDIA app includes a System tab as a one-stop destination for display, video and GPU options. It also includes an AI feature called RTX Video that enhances all videos streamed on browsers.

RTX Video Super Resolution uses AI to enhance video streaming on GeForce RTX GPUs by removing compression artifacts and sharpening edges when upscaling.

RTX Video HDR converts any standard dynamic range video into vibrant high dynamic range (HDR) when played in Google Chrome, Microsoft Edge, Mozilla Firefox or the VLC media player. HDR enables more vivid, dynamic colors to enhance gaming and content creation. A compatible HDR10 monitor is required.

Give game streams or video on demand a unique look with AI filters — Content creators looking to elevate their streamed or recorded gaming sessions can access the NVIDIA app’s redesigned Overlay feature with AI-powered game filters.

Freestyle RTX filters allow livestreamers and content creators to apply fun post-processing filters, changing the look and mood of content with tweaks to color and saturation.

Joining these Freestyle RTX game filters is RTX Dynamic Vibrance, which enhances visual clarity on a per-app basis. Colors pop more on screen, and color crushing is minimized to preserve image quality and immersion. The filter is accelerated by Tensor Cores on GeForce RTX GPUs, making it easier for viewers to enjoy all the action.

Freestyle RTX filters empower gamers to personalize the visual aesthetics of their favorite games through real-time post-processing filters. This feature boasts compatibility with a vast library of more than 1,200 games.

Download the NVIDIA app today.

RTX Remix 0.6 Release

The new RTX Remix update offers modders significantly improved mod performance, as well as quality of life improvements that help streamline the mod-making process.

RTX Remix now supports the ability to test experimental features under active development. It includes a new Stage Manager that makes it easier to see and change every mesh, texture, light or element in scenes in real time.

To learn more about the RTX Remix 0.6 release, check out the release notes.

With RTX Remix in the NVIDIA app launcher, modders have direct access to Remix’s powerful features. Through the NVIDIA app, RTX Remix modders can benefit from faster start-up times, lower CPU usage and direct control over updates with an optimized user interface.

To the 3D Victor Go the Spoils

NVIDIA Studio in June kicked off a 3D character contest for artists in collaboration with Reallusion, a company that develops 2D and 3D character creation and animation software. Today, we’re celebrating the winners from that contest.

In the category of Best Realistic Character Animation, Robert Lundqvist won for the piece Lisa and Fia.

In the category of Best Stylized Character Animation, Loic Bramoulle won for the piece HellGal.

Both winners will receive an NVIDIA Studio-validated laptop to help further their creative efforts.

View over 250 imaginative and impressive entries here.

Follow NVIDIA Studio on Instagram, X and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

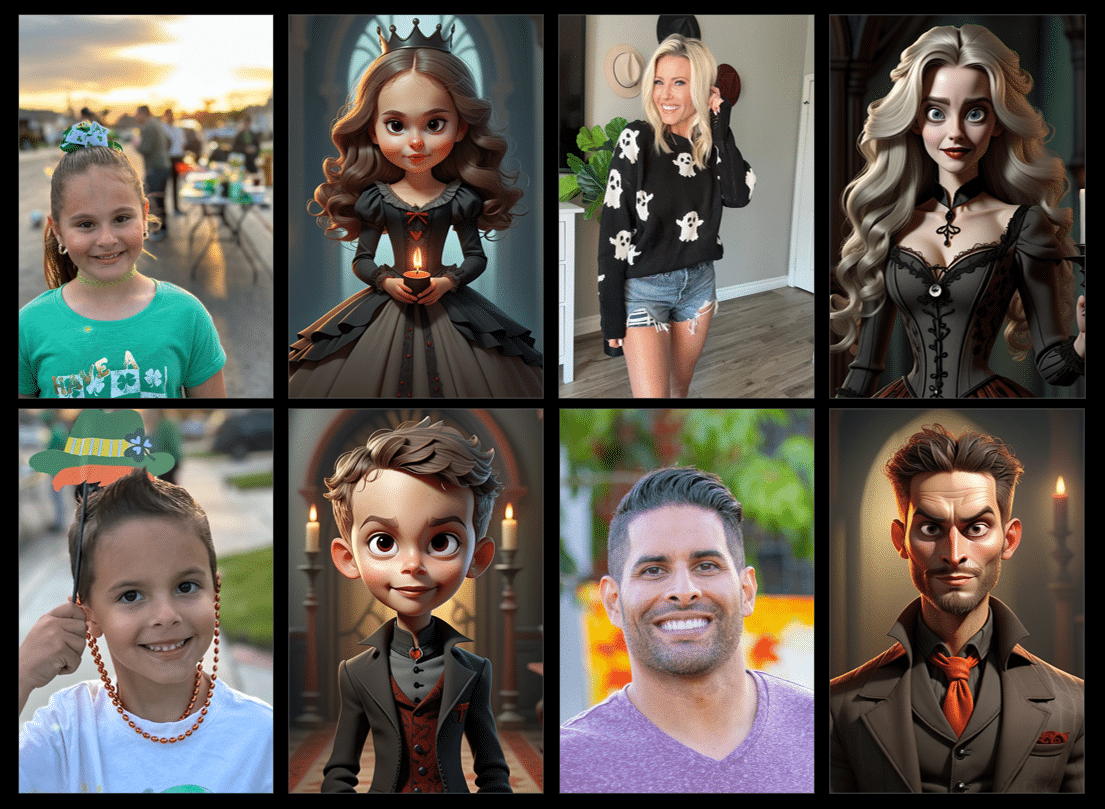

]]>Among the artists using AI to enhance and accelerate their creative endeavors is Sabour Amirazodi, a creator and tech marketing and workflow specialist at NVIDIA.

Using his over 20 years of multi-platform experience in location-based entertainment and media production, he decorates his home every year with an incredible Halloween installation — dubbed the Haunted Sanctuary.

The project is a massive undertaking requiring projection mapping, the creation and assembly of 3D scenes, compositing and editing in Adobe After Effects and Premiere Pro, and more. The creation process was accelerated using the NVIDIA Studio content creation platform and Amirazodi’s NVIDIA RTX 6000 GPU.

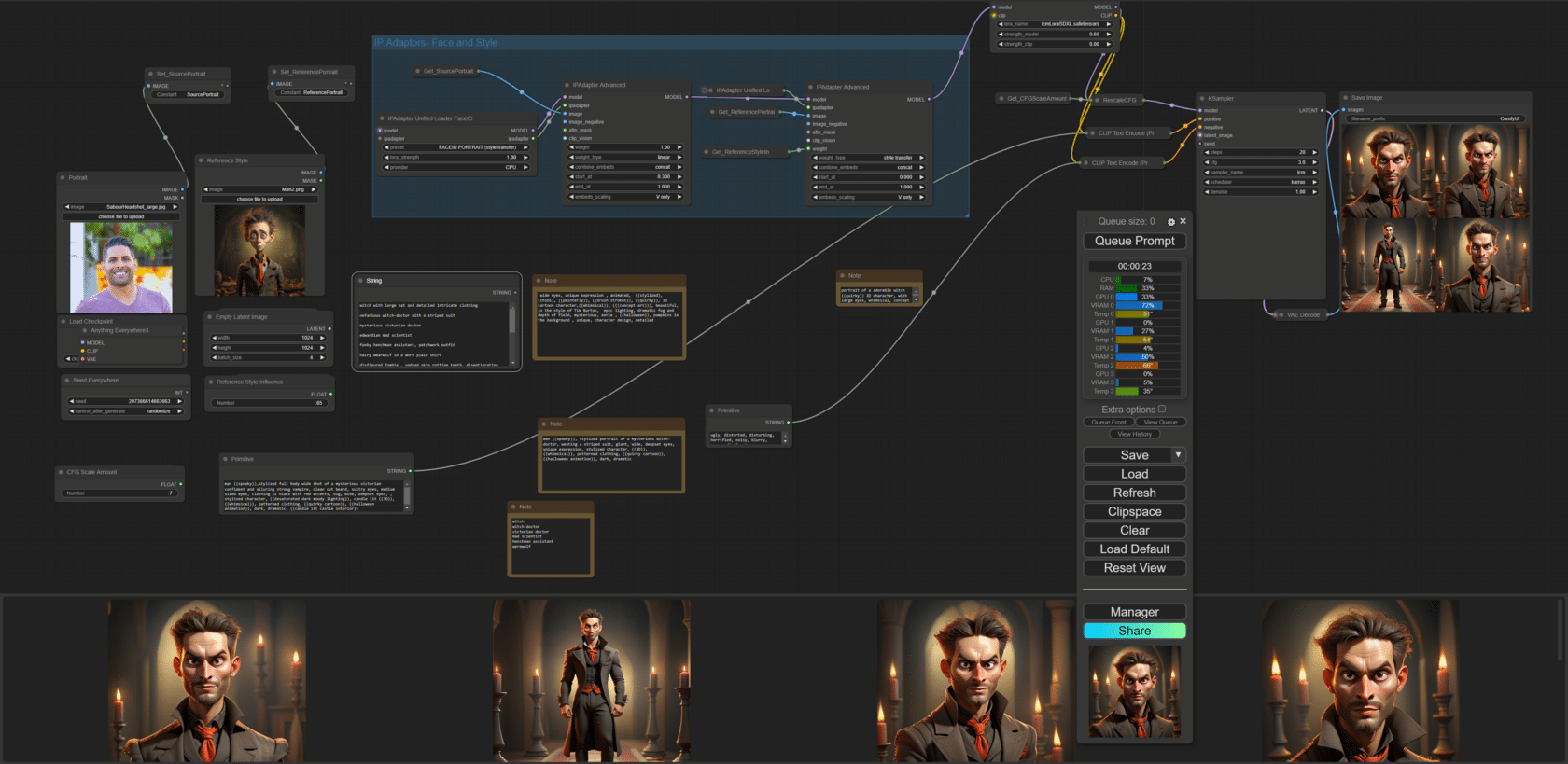

This year, Amirazodi deployed new AI workflows in ComfyUI, Adobe Firefly and Photoshop to create digital portraits — inspired by his family — as part of the installation.

Give ’em Pumpkin to Talk About

ComfyUI is a node-based interface that generates images and videos from text. It’s designed to be highly customizable, allowing users to design workflows, adjust settings and see results immediately. It can combine various AI models and third-party extensions to achieve a higher degree of control.

For example, this workflow below requires entering a prompt, the details and characteristics of the desired image, and a negative prompt to help omit any undesired visual effects.

Since Amirazodi wanted his digital creations to closely resemble his family, he started by applying Run IP Adapters, which use reference images to inform generated content.

From there, he tinkered with the settings to achieve the desired look and feel of each character.

ComfyUI has NVIDIA TensorRT acceleration, so RTX users can generate images from prompts up to 60% faster.

Get started with ComfyUI.

In Darkness, Let There Be Light

Adobe Firefly is a family of creative generative AI models that offer new ways to ideate and create while assisting creative workflows. They’re designed to be safe for commercial use and were trained, using NVIDIA GPUs, on licensed content like Adobe Stock Images and public domain content where copyright has expired.

To make the digital portraits fit as desired, Amirazodi needed to expand the background.

Adobe Photoshop features a Generative Fill tool called Generative Expand that allows artists to extend the border of their image with the Crop tool and automatically fill the space with content that matches the existing image.

Photoshop also features “Neural Filters that allow artists to explore creative ideas and make complex adjustments to images in just seconds, saving them hours of tedious, manual work.

With Smart Portrait Neural Filters, artists can easily experiment with facial characteristics such as gaze direction and lighting angles simply by dragging a slider. Amirazodi used the feature to apply the final touches to his portraits, adjusting colors, textures, depth blur and facial expressions.

NVIDIA RTX GPUs help power AI-based tasks, accelerating the Neural Filters in Photoshop.

Learn more about the latest Adobe features and tools in this blog.

AI is already helping accelerate and automate tasks across content creation, gaming and everyday life — and the speedups are only multiplied with an NVIDIA RTX- or GeForce RTX GPU-equipped system.

Check out and share Halloween- and fall-themed art as a part of the NVIDIA Studio #HarvestofCreativity challenge on Instagram, X, Facebook and Threads for a chance to be featured on the social media channels.

]]>At the Adobe MAX creativity conference this week, Adobe announced updates to its Adobe Creative Cloud products, including Premiere Pro and After Effects, as well as to Substance 3D products and the Adobe video ecosystem.

These apps are accelerated by NVIDIA RTX and GeForce RTX GPUs — in the cloud or running locally on RTX AI PCs and workstations.

One of the most highly anticipated features is Generative Extend in Premiere Pro (beta), which uses generative AI to seamlessly add frames to the beginning or end of a clip. Powered by the Firefly Video Model, it’s designed to be commercially safe and only trained on content Adobe has permission to use, so artists can create with confidence.

Adobe Substance 3D Collection apps offer numerous RTX-accelerated features for 3D content creation, including ray tracing, AI delighting and upscaling, and image-to-material workflows powered by Adobe Firefly.

Substance 3D Viewer, entering open beta at Adobe MAX, is designed to unlock 3D in 2D design workflows by allowing 3D files to be opened, viewed and used across design teams. This will improve interoperability with other RTX-accelerated Adobe apps like Photoshop.

Adobe Firefly integrations have also been added to Substance 3D Collection apps, including Text to Texture, Text to Pattern and Image to Texture tools in Substance 3D Sampler, as well as Generative Background in Substance 3D Stager, to further enhance the 3D content creation with generative AI.

The October NVIDIA Studio Driver, designed to optimize creative apps, will be available for download tomorrow. For automatic Studio Driver notifications, as well as easy access to apps like NVIDIA Broadcast, download the NVIDIA app beta.

Video Editing Evolved

Adobe Premiere Pro has transformed video editing workflows over the last four years with features like Auto Reframe and Scene Edit Detection.

The recently launched GPU-accelerated Enhance Speech, AI Audio Category Tagging and Filler Word Detection features allow editors to use AI to intelligently cut and modify video scenes.

The Adobe Firefly Video Model — now available in limited beta at Firefly.Adobe.com — brings generative AI to video, marking the next advancement in video editing. It allows users to create and edit video clips using simple text prompts or images, helping fill in content gaps without having to reshoot, extend or reframe takes. It can also be used to create video clip prototypes as inspiration for future shots.

Topaz Labs has introduced a new plug-in for Adobe After Effects, a video enhancement software that uses AI models to improve video quality. This gives users access to enhancement and motion deblur models for sharper, clearer video quality. Accelerated on GeForce RTX GPUs, these models run nearly 2.5x faster on the GeForce RTX 4090 Laptop GPU compared with the MacBook Pro M3 Max.

Stay tuned for NVIDIA TensorRT enhancements and more Topaz Video AI effects coming to the After Effects plug-in soon.

3D Super Powered

The Substance 3D Collection is revolutionizing the ideation stage of 3D creation with powerful generative AI features in Substance 3D Sampler and Stager.

Sampler’s Text to Texture, Text to Pattern and Image to Texture tools, powered by Adobe Firefly, allow artists to rapidly generate reference images from simple prompts that can be used to create parametric materials.

Stager’s Generative Background feature helps designers explore backgrounds for staging 3D models, using text descriptions to generate images. Stager can then match lighting and camera perspective, allowing designers to explore more variations faster when iterating and mocking up concepts.

Substance 3D Viewer also offers a connected workflow with Photoshop, where 3D models can be placed into Photoshop projects and edits made to the model in Viewer will be automatically sent back to the Photoshop project. GeForce RTX GPU hardware acceleration and ray tracing provide smooth movement in the viewport, producing up to 80% higher frames per second on the GeForce RTX 4060 Laptop GPU compared to the MacBook M3 Pro.

There are also new Firefly-powered features in Substance 3D Viewer, like Text to 3D and 3D Model to Image, that combine text prompts and 3D objects to give artists more control when generating new scenes and variations.

The latest After Effects release features an expanded range of 3D tools that enable creators to embed 3D animations, cast ultra-realistic shadows on 2D objects and isolate effects in 3D space.

After Effects now also has an RTX GPU-powered Advanced 3D Renderer that accelerates the processing-intensive and time-consuming task of applying HDRI lighting — lowering creative barriers to entry while improving content realism. Rendering can be done 30% faster on a GeForce RTX 4090 GPU over the previous generation.

Pairing Substance 3D with After Effects native and fast 3D integration allows artists to significantly boost the visual quality of 3D in After Effects with precision texturing and access to more than 20,000 parametric 3D materials, IBL environment lights and 3D models.

Follow NVIDIA Studio on Instagram, X and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

]]>At TwitchCon — a global convention for the Twitch livestreaming platform—livestreamers and content creators this week can experience the latest technologies for accelerating creative workflows and improving video quality.

That includes the closed beta release of Twitch Enhanced Broadcasting support for HEVC when using the NVIDIA encoder.

Content creators can also use the NVIDIA Broadcast app, eighth-generation NVIDIA NVENC and RTX-powered optimizations in streaming and video editing apps to enhance their productions.

Plus, the September NVIDIA Studio Driver, designed to optimize creative apps, is now ready for download. Studio Drivers undergo extensive testing to ensure seamless compatibility while enhancing features, automating processes and accelerating workflows.

Twitch Enhanced Broadcasting With HEVC

The tradeoff between higher-resolution video quality and reliable streaming is a common issue livestreamers struggle with.

Higher-quality video provides more enjoyable viewing experiences but can cause streams to buffer for viewers with lower bandwidth or older devices. Streaming lower-bitrate video allows more people to watch content seamlessly but introduces artifacts that can interfere with viewing quality.

To address this issue, NVIDIA and Twitch collaborated to develop Twitch Enhanced Broadcasting. The feature adds the capability to send multiple streams — different versions of encoded video with different resolutions or bitrates — directly from NVIDIA GeForce RTX-equipped PCs or NVIDIA RTX workstations to deliver the highest-quality video a viewer’s internet connection can handle.

Twitch supports HEVC (H.265) in the Enhanced Broadcasting closed beta. With the NVIDIA encoder, Twitch streamers get 25% improved efficiency and quality over H.264.

This means that video will look as if it were being streamed with 25% more bitrate — in higher quality and with reduced artifacts or encoding errors. The feature is ideal for streaming fast-paced gameplay, enabling cleaner, sharper video with minimal lag.

Because all stream versions are generated with a dedicated hardware encoder on GeForce RTX GPUs, the rest of the system’s GPU and CPU are free to focus on running games more smoothly to maximize performance.

Learn how to get started on twitch.com.

AI-Enhanced Microphones and Webcams

Streaming is easier than ever with NVIDIA technologies.

For starters, PC performance and video quality are incredibly high quality thanks to NVIDIA’s dedicated encoder. And, NVIDIA GPUs include Tensor Cores that efficiently run AI.

Livestreamers can use AI to enhance their hardware peripherals and devices, which is especially helpful for those who haven’t had the time or resources to assemble extensive audio and video setups.

NVIDIA Broadcast transforms any home office or dorm room into a home studio — without the need to purchase specialized equipment. Its AI-powered features include Noise and Echo Removal for microphones, and Virtual Background, Auto Frame, Video Noise Removal and Eye Contact for cameras.

Livestreamers can download the Broadcast app or access its effects across popular creative apps, including Corsair iCUE, Elgato Camera Hub, OBS, Streamlabs, VTube Studio and Wave Link.

Spotlight the Highlights

GeForce RTX GPUs make it lightning-fast to edit and enhance video footage on the most popular video editing apps, from Adobe Premiere Pro to CapCut Pro.

Streamers can use AI-powered, RTX-accelerated features like Enhance Speech to remove noise and improve the quality of dialogue clips; Auto Reframe to automatically size social media videos; and Scene Edit Detection to break up long videos, like B-roll stringouts, into individual clips.

NVIDIA encoders help turbocharge the export process. For those looking for extreme performance, the GeForce RTX 4070 Ti GPU and up come equipped with dual encoders that can be used in parallel to halve export times on apps like CapCut, the most widely used video editing app on TikTok.

Clearer, Sharper Viewing Experiences With RTX Video

NVIDIA RTX Video — available exclusively for NVIDIA and GeForce RTX GPU owners — can turn any online and native video into pristine 4K high dynamic range (HDR) content with two technologies: Video Super Resolution and Video HDR.

RTX Video Super Resolution de-artifacts and upscales streamed video to remove errors that occur during encoding or transport, then runs an AI super-resolution effect. The result is cleaner, sharper video that’s ideal for streaming on platforms like YouTube and Twitch. RTX Video is available in popular web browsers including Opera, Google Chrome, Mozilla Firefox and Microsoft Edge.

Many users have HDR displays, but there isn’t much HDR content online. RTX Video HDR addresses this by turning any standard dynamic range (SDR) video into HDR10 quality that delivers a wider range of brights and darks and makes visuals more vibrant and colorful. This feature is especially helpful when watching dark-lit scenes in video games.

RTX Video HDR requires an RTX GPU connected to an HDR10-compatible monitor or TV. For more information, see the RTX Video FAQ.

Check out TwitchCon — taking place in San Diego and online from Sept. 20-22 — for the latest streaming updates.

]]>Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for RTX PC users.

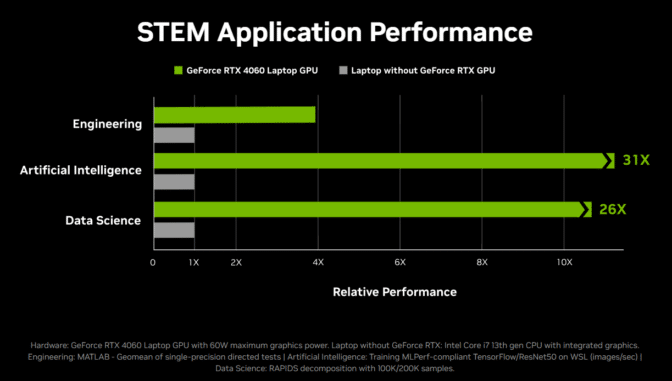

AI powered by NVIDIA GPUs is accelerating nearly every industry, creating high demand for graduates, especially from STEM fields, who are proficient in using the technology. Millions of students worldwide are participating in university STEM programs to learn skills that will set them up for career success.

To prepare students for the future job market, NVIDIA has worked with top universities to develop a GPU-accelerated AI curriculum that’s now taught in more than 5,000 schools globally. Students can get a jumpstart outside of class with NVIDIA’s AI Learning Essentials, a set of resources that equips individuals with the necessary knowledge, skills and certifications for the rapidly evolving AI workforce.

NVIDIA GPUs — whether running in university data centers, GeForce RTX laptops or NVIDIA RTX workstations — are accelerating studies, helping enhance the learning experience and enabling students to gain hands-on experience with hardware used widely in real-world applications.

Supercharged AI Studies

NVIDIA provides several tools to help students accelerate their studies.

The RTX AI Toolkit is a powerful resource for students looking to develop and customize AI models for projects in computer science, data science, and other STEM fields. It allows students to train and fine-tune the latest generative AI models, including Gemma, Llama 3 and Phi 3, up to 30x faster — enabling them to iterate and innovate more efficiently, advancing their studies and research projects.

Students studying data science and economics can use NVIDIA RAPIDS AI and data science software libraries to run traditional machine learning models up to 25x faster than conventional methods, helping them handle large datasets more efficiently, perform complex analyses in record time and gain deeper insights from data.

AI-deal for Robotics, Architecture and Design

Students studying robotics can tap the NVIDIA Isaac platform for developing, testing and deploying AI-powered robotics applications. Powered by NVIDIA GPUs, the platform consists of NVIDIA-accelerated libraries, applications frameworks and AI models that supercharge the development of AI-powered robots like autonomous mobile robots, arms and manipulators, and humanoids.

While GPUs have long been used for 3D design, modeling and simulation, their role has significantly expanded with the advancement of AI. GPUs are today used to run AI models that dramatically accelerate rendering processes.

Some industry-standard design tools powered by NVIDIA GPUs and AI include:

- SOLIDWORKS Visualize: This 3D computer-aided design rendering software uses NVIDIA Optix AI-powered denoising to produce high-quality ray-traced visuals, streamlining the design process by providing faster, more accurate visual feedback.

- Blender: This popular 3D creation suite uses NVIDIA Optix AI-powered denoising to deliver stunning ray-traced visuals, significantly accelerating content creation workflows.

- D5 Render: Commonly used by architects, interior designers and engineers, D5 Render incorporates NVIDIA DLSS technology for real-time viewport rendering, enabling smoother, more detailed visualizations without sacrificing performance. Powered by fourth-generation Tensor Cores and the NVIDIA Optical Flow Accelerator on GeForce RTX 40 Series GPUs and NVIDIA RTX Ada Generation GPUs, DLSS uses AI to create additional frames and improve image quality.

- Enscape: Enscape makes it possible to ray trace more geometry at a higher resolution, at exactly the same frame rate. It uses DLSS to enhance real-time rendering capabilities, providing architects and designers with seamless, high-fidelity visual previews of their projects.

Beyond STEM

Students, hobbyists and aspiring artists use the NVIDIA Studio platform to supercharge their creative processes with RTX and AI. RTX GPUs power creative apps such as Adobe Creative Cloud, Autodesk, Unity and more, accelerating a variety of processes such as exporting videos and rendering art.

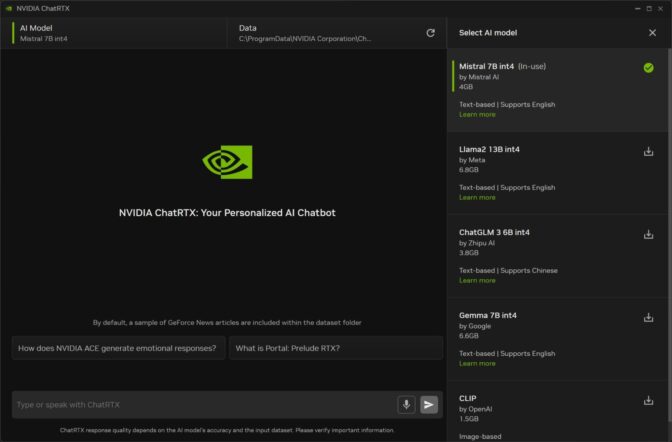

ChatRTX is a demo app that lets students create a personalized GPT large language model connected to their own content and study materials, including text, images or other data. Powered by advanced AI, ChatRTX functions like a personalized chatbot that can quickly provide students relevant answers to questions based on their connected content. The app runs locally on a Windows RTX PC or workstation, meaning students can get fast, secure results personalized to their needs.

Schools are increasingly adopting remote learning as a teaching modality. NVIDIA Broadcast — a free application that delivers professional-level audio and video with AI-powered features on RTX PCs and workstations — integrates seamlessly with remote learning applications including BlueJeans, Discord, Google Meet, Microsoft Teams, Webex and Zoom. It uses AI to enhance remote learning experiences by removing background noise, improving image quality in low-light scenarios, and enabling background blur and background replacement.

From Data Centers to School Laptops

NVIDIA RTX-powered mobile workstations and GeForce RTX and Studio RTX 40 Series laptops offer supercharged development, learning, gaming and creating experiences with AI-enabled tools and apps. They also include exclusive access to the NVIDIA Studio platform of creative tools and technologies, and Max-Q technologies that optimize battery life and acoustics — giving students an ideal platform for all aspects of campus life.

Say goodbye to late nights in the computer lab — GeForce RTX laptops and NVIDIA RTX workstations share the same architecture as the NVIDIA GPUs powering many university labs and data centers. That means students can study, create and play — all on the same PC.

Learn more about GeForce RTX laptops and NVIDIA RTX workstations.

]]>Editor’s note: This post is part of our In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows. We’re also deep diving on new GeForce RTX GPU features, technologies and resources, and how they dramatically accelerate content creation.

Every month brings new creative app updates and optimizations powered by the NVIDIA Studio platform — supercharging creative processes with NVIDIA RTX and AI.

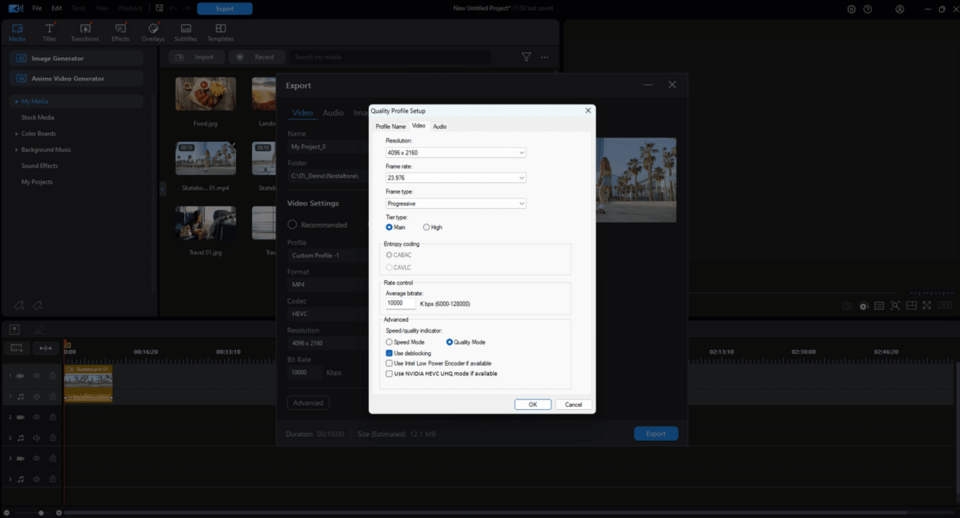

RTX-powered video editing app CyberLink PowerDirector now has a setting for high-efficiency video encoding (HEVC). 3D artists can access new features and faster workflows in Adobe Substance 3D Modeler and SideFX: Houdini. And content creators using Topaz Video AI Pro can now scale their photo and video touchups faster with NVIDIA TensorRT acceleration.

The August Studio Driver is ready to install via the NVIDIA app beta — the essential companion for creators and gamers — to keep GeForce RTX PCs up to date with the latest NVIDIA drivers and technology.

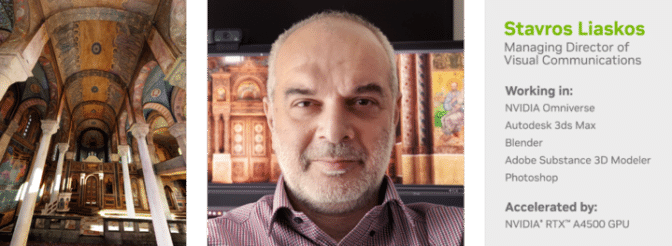

And this week’s featured In the NVIDIA Studio artist Stavros Liaskos is creating physically accurate 3D digital replicas of Greek Orthodox churches, holy temples, monasteries and other buildings using the NVIDIA Omniverse platform for building and connecting Universal Scene Description (OpenUSD) apps.

Discover the latest breakthroughs in graphics and generative AI by watching the replay of NVIDIA founder and CEO Jensen Huang’s firechat chats with Lauren Goode, senior writer at WIRED, and Meta founder and CEO Mark Zuckerberg at SIGGRAPH.

There’s a Creative App for That

The NVIDIA NVENC video encoder is built into every RTX graphics card, offloading the compute-intensive task of video encoding from the CPU to a dedicated part of the GPU.

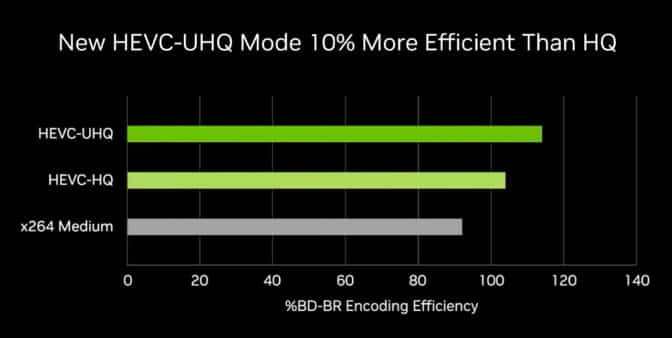

CyberLink PowerDirector, a popular video editing program that recently added support for RTX Video HDR, now has a setting to increase HEVC with NVIDIA NVENC HEVC Ultra-High-Quality mode.

The new functionality reduces bit rates and improves encoding efficiency by 10%, significantly boosting video quality. Using the custom setting, content creators can offer audiences superior viewing experiences.

Alpha exporting allows users to add overlay effects to videos by exporting HEVC video with an alpha channel. This technique can be used to create transparent backgrounds and rapidly process animated overlays, making it ideal for creating social media content.

With an alpha channel, users can export HEVC videos up to 8x faster compared with run-length encoding supported by other processors, and with a 100x reduction in file size.

Adobe Substance 3D Modeler, a multisurface 3D sculpting tool for artists, virtual effects specialists and designers, released Block to Stock, an AI-powered, geometry-based feature for accelerating the prototyping of complex shapes.

It allows rough 3D shapes to be quickly replaced with pre-existing, similarly shaped 3D models that have greater detail. The result is a highly detailed shape crafted in no time.

The recently released version 20.5 of SideFX: Houdini, a 3D procedural software for modeling, animation and lighting, introduced NVIDIA OptiX 8 and NVIDIA’s Shader Execution Reordering feature to its Karma XPU renderer — exclusively on NVIDIA RTX GPUs.

With these additions, computationally intensive tasks can now be executed up to 4x faster on RTX GPUs.

Topaz Video AI Pro, a photo and video enhancement software for noise reduction, sharpening and upscaling, added TensorRT acceleration for multi-GPU configurations, enabling parallelization across multiple GPUs for supercharged rendering speeds — up to 2x faster with two GPUs over a single GPU system, with further acceleration in systems with additional GPUs.

Virtual Cultural Sites to G(r)eek Out About

Anyone can now explore over 30 Greek cultural sites in virtual reality, thanks to the immersive work of Stavros Liaskos, managing director of visual communications company Reyelise.

“Many historical and religious sites are at risk due to environmental conditions, neglect and socio-political issues,” he said. “By creating detailed 3D replicas, we’re helping to ensure their architectural splendor is preserved digitally for future generations.”

Liaskos dedicated the project to his father, who passed away last year.

“He taught me the value of patience and instilled in me the belief that nothing is unattainable,” he said. “His wisdom and guidance continue to inspire me every day.”

Churches are architecturally complex structures. To create physically accurate 3D models of them, Liaskos used the advanced real-time rendering capabilities of Omniverse, connected with a slew of content-creation apps.

The OpenUSD framework enabled a seamless workflow across the various apps Liaskos used. For example, after using Trimble X7 for highly accurate 3D scanning of structures, Liaskos easily moved to Autodesk 3ds Max and Blender for modeling and animation.

Then, with ZBrush, he sculpted intricate architectural details on the models and refined textures with Adobe Photoshop and Substance 3D. It was all brought together in Omniverse for real-time lighting and rendering.

For post-production work, like adding visual effects and compiling rendered scenes, Liaskos used OpenUSD to transfer his projects to Adobe After Effects, where he finalized the video output. Nearly every element of his creative workflow was accelerated by his NVIDIA RTX A4500 GPU.

Liaskos also explored developing extended reality (XR) applications that allow users to navigate his 3D projects in real time in virtual reality (VR).

First, he used laser scanning and photogrammetry to capture the detailed geometries and textures of the churches.

Then, he tapped Autodesk 3ds Max and Maxon ZBrush for retopology, ensuring the models were optimized for real-time rendering without compromising detail.

After importing them into NVIDIA Omniverse with OpenUSD, Liaskos packaged the XR scenes so they could be streamed to VR headsets using either the NVIDIA Omniverse Create XR spatial computing app or Unity Engine, enabling immersive viewing experiences.

“This approach will even more strikingly showcase the architectural beauty and cultural significance of these sites,” Liaskos said. “The simulation must be as good as possible to recreate the overwhelming, impactful feeling of calm and safety that comes with visiting a deeply spiritual space.”

The project is co-funded by the European Union within the framework of the operational program Digital Transformation 2021-2027 for the Greek Holy Archbishopric of Athens.

Follow NVIDIA Studio on Instagram, X and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

Stay up to date on NVIDIA Omniverse with Instagram, Medium and X. For more, join the Omniverse community and check out the Omniverse forums, Discord server, Twitch and YouTube channels.

]]>Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for RTX PC users.

NVIDIA is spotlighting the latest NVIDIA RTX-powered tools and apps at SIGGRAPH, an annual trade show at the intersection of graphics and AI.

These AI technologies provide advanced ray-tracing and rendering techniques, enabling highly realistic graphics and immersive experiences in gaming, virtual reality, animation and cinematic special effects. RTX AI PCs and workstations are helping drive the future of interactive digital media, content creation, productivity and development.

ACE’s AI Magic

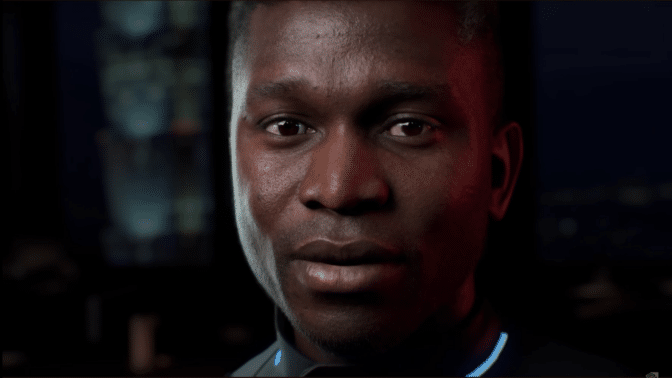

During a SIGGRAPH fireside chat, NVIDIA founder and CEO Jensen Huang introduced “James” — an interactive digital human built on NVIDIA NIM microservices — that showcases the potential of AI-driven customer interactions.

Using NVIDIA ACE technology and based on a customer-service workflow, James is a virtual assistant that can connect with people using emotions, humor and contextually accurate responses. Soon, users will be able to interact with James in real time at ai.nvidia.com.

NVIDIA also introduced the latest advancements in the NVIDIA Maxine AI platform for telepresence, as well as companies adopting NVIDIA ACE, a suite of technologies for bringing digital humans to life with generative AI. These technologies enable digital human development with AI models for speech and translation, vision, intelligence, realistic animation and behavior, and lifelike appearance.

Maxine features two AI technologies that enhance the digital human experience in telepresence scenarios: Maxine 3D and Audio2Face-2D.

Developers can harness Maxine and ACE technologies to drive more engaging and natural interactions for people using digital interfaces across customer service, gaming and other interactive experiences.

Tapping advanced AI, NVIDIA ACE technologies allow developers to design avatars that can respond to users in real time with lifelike animations, speech and emotions. RTX GPUs provide the necessary computational power and graphical fidelity to render ACE avatars with stunning detail and fluidity.

With ongoing advancements and increasing adoption, ACE is setting new benchmarks for building virtual worlds and sparking innovation across industries. Developers tapping into the power of ACE with RTX GPUs can build more immersive applications and advanced, AI-based, interactive digital media experiences.

RTX Updates Unleash AI-rtistry for Creators

NVIDIA GeForce RTX PCs and NVIDIA RTX workstations are getting an upgrade with GPU accelerations that provide users with enhanced AI content-creation experiences.

For video editors, RTX Video HDR is now available through Wondershare Filmora and DaVinci Resolve. With this technology, users can transform any content into high dynamic range video with richer colors and greater detail in light and dark scenes — making it ideal for gaming videos, travel vlogs or event filmmaking. Combining RTX Video HDR with RTX Video Super Resolution further improves visual quality by removing encoding artifacts and enhancing details.

RTX Video HDR requires an RTX GPU connected to an HDR10-compatible monitor or TV. Users with an RTX GPU-powered PC can send files to the Filmora desktop app and continue to edit with local RTX acceleration, doubling the speed of the export process with dual encoders on GeForce RTX 4070 Ti or above GPUs. Popular media player VLC in June added support for RTX Video Super Resolution and RTX Video HDR, adding AI-enhanced video playback.

Read this blog on RTX-powered video editing and the RTX Video FAQ for more information. Learn more about Wondershare Filmora’s AI-powered features.

In addition, 3D artists are gaining more AI applications and tools that simplify and enhance workflows, including Replikant, Adobe, Topaz and Getty Images.

Replikant, an AI-assisted 3D animation platform, is integrating NVIDIA Audio2Face, an ACE technology, to enable improved lip sync and facial animation. By taking advantage of NVIDIA-accelerated generative models, users can enjoy real-time visuals enhanced by RTX and NVIDIA DLSS technology. Replikant is now available on Steam.

Adobe Substance 3D Modeler has added Search Asset Library by Shape, an AI-powered feature designed to streamline the replacement and enhancement of complex shapes using existing 3D models. This new capability significantly accelerates prototyping and enhances design workflows.

New AI features in Adobe Substance 3D integrate advanced generative AI capabilities, enhancing its texturing and material-creation tools. Adobe has launched the first integration of its Firefly generative AI capabilities into Substance 3D Sampler and Stager, making 3D workflows more seamless and productive for industrial designers, game developers and visual effects professionals.

For tasks like text-to-texture generation and prompt descriptions, Substance 3D users can generate photorealistic or stylized textures. These textures can then be applied directly to 3D models. The new Text to Texture and Generative Background features significantly accelerate traditionally time-consuming and intricate 3D texturing and staging tasks.

Powered by NVIDIA RTX Tensor Cores, Substance 3D can significantly accelerate computations and allows for more intuitive and creative design processes. This development builds on Adobe’s innovation with Firefly-powered Creative Cloud upgrades in Substance 3D workflows.

Topaz AI has added NVIDIA TensorRT acceleration for multi-GPU workflows, enabling parallelization across multiple GPUs for supercharged rendering speeds — up to 2x faster with two GPUs over a single GPU system, and scaling further with additional GPUs.

Getty Images has updated its Generative AI by iStock service with new features to enhance image generation and quality. Powered by NVIDIA Edify models, the latest enhancement delivers generation speeds set to reach around six seconds for four images, doubling the performance of the previous model, with speeds at the forefront of the industry. The improved Text-2-Image and Image-2-Image functionalities provide higher-quality results and greater adherence to user prompts.

Generative AI by iStock users can now also designate camera settings such as focal length (narrow, standard or wide) and depth of field (near or far). Improvements to generative AI super-resolution enhances image quality by using AI to create new pixels, significantly improving resolution without over-sharpening the image.

LLM-azing AI

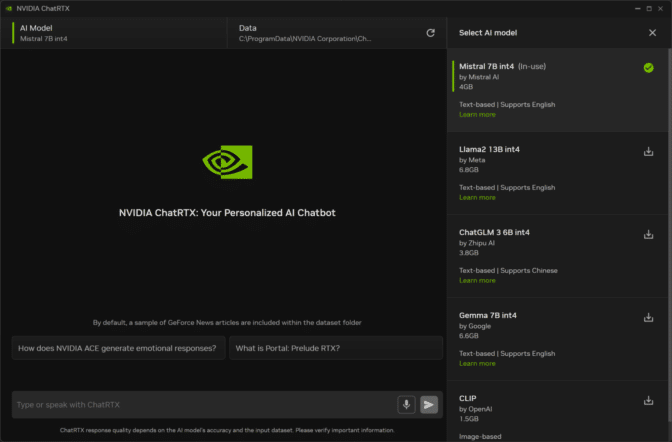

ChatRTX — a tech demo that connects a large language model (LLM), like Meta’s Llama, to a user’s data for quickly querying notes, documents or images — is getting a user interface (UI) makeover, offering a cleaner, more polished experience.

ChatRTX also serves as an open-source reference project that shows developers how to build powerful, local, retrieval-augmented applications (RAG) applications accelerated by RTX.

The latest version of ChatRTX, released today, uses the Electron + Material UI framework, which lets developers more easily add their own UI elements or extend the technology’s functionality. The update also includes a new architecture that simplifies the integration of different UIs and streamlines the building of new chat and RAG applications on top of the ChatRTX backend application programming interface.

End users can download the latest version of ChatRTX from the ChatRTX web page. Developers can find the source code for the new release on the ChatRTX GitHub repository.

Meta Llama 3.1-8B models are now optimized for inference on NVIDIA GeForce RTX PCs and NVIDIA RTX workstations. These models are natively supported with NVIDIA TensorRT-LLM, open-source software that accelerates LLM inference performance.

Dell’s AI Chatbots: Harnessing RTX Rocket Fuel

Dell is presenting how enterprises can boost AI development with an optimized RAG chatbot using NVIDIA AI Workbench and an NVIDIA NIM microservice for Llama 3. Using the NVIDIA AI Workbench Hybrid RAG Project, Dell is demonstrating how the chatbot can be used to converse with enterprise data that’s embedded in a local vector database, with inference running in one of three ways:

- Locally on a Hugging Face TGI server

- In the cloud using NVIDIA inference endpoints

- On self-hosted NVIDIA NIM microservices

Learn more about the AI Workbench Hybrid RAG Project. SIGGRAPH attendees can experience this technology firsthand at Dell Technologies’ booth 301.

HP AI Studio: Innovate Faster With CUDA-X and Galileo

At SIGGRAPH, HP is presenting the Z by HP AI Studio, a centralized data science platform. Announced in October 2023, AI Studio has now been enhanced with the latest NVIDIA CUDA-X libraries as well as HP’s recent partnership with Galileo, a generative AI trust-layer company. Key benefits include:

- Deploy projects faster: Configure, connect and share local and remote projects quickly.

- Collaborate with ease: Access and share data, templates and experiments effortlessly.

- Work your way: Choose where to work on your data, easily switching between online and offline modes.

Designed to enhance productivity and streamline AI development, AI Studio allows data science teams to focus on innovation. Visit HP’s booth 501 to see how AI Studio with RAPIDS cuDF can boost data preprocessing to accelerate AI pipelines. Apply for early access to AI Studio.

An RTX Speed Surge for Stable Diffusion

Stable Diffusion 3.0, the latest model from Stability AI, has been optimized with TensorRT to provide a 60% speedup.

A NIM microservice for Stable Diffusion 3 with optimized performance is available for preview on ai.nvidia.com.

There’s still time to join NVIDIA at SIGGRAPH to see how RTX AI is transforming the future of content creation and visual media experiences. The conference runs through Aug. 1.

Generative AI is transforming graphics and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

]]>Editor’s note: This post is part of our In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows. We’re also deep diving on new GeForce RTX GPU features, technologies and resources, and how they dramatically accelerate content creation.

Wondershare Filmora — a video editing app with AI-powered tools — now supports NVIDIA RTX Video HDR, joining editing software like Blackmagic Design’s DaVinci Resolve and Cyberlink PowerDirector.

RTX Video HDR significantly enhances video quality, ensuring the final output is suitable for the best monitors available today.

Livestreaming software OBS Studio and XSplit Broadcaster now support Twitch Enhanced Broadcasting, giving streamers more control over video quality through client-side encoding and automatic configurations. The feature, developed in collaboration between Twitch, OBS and NVIDIA, also paves the way for more advancements, including vertical live video and advanced codecs such as HEVC and AV1.

A summer’s worth of creative app updates are included in the July Studio Driver, ready for download today. Install the NVIDIA app beta — the essential companion for creators and gamers — to keep GeForce RTX PCs up to date with the latest NVIDIA drivers and technology.

Join NVIDIA at SIGGRAPH to learn about the latest breakthroughs in graphics and generative AI, and tune in to a fireside chat featuring NVIDIA founder and CEO Jensen Huang and Lauren Goode, senior writer at WIRED, on Monday, July 29 at 2:30 p.m. MT. Register now.

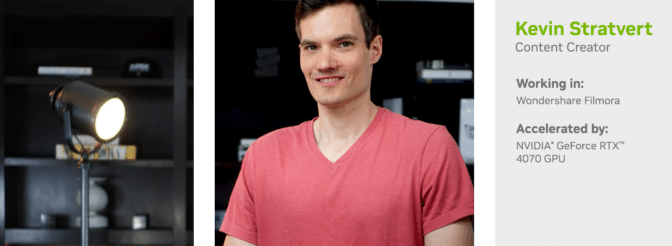

And this week’s featured In the NVIDIA Studio artist, Kevin Stratvert, shares all about AI-powered content creation in Wondershare Filmora.

(Wonder)share the Beauty of RTX Video

RTX Video HDR analyzes standard dynamic range video and transforms it into HDR10-quality video, expanding the color gamut to produce clearer, more vibrant frames and enhancing the sense of depth for greater immersion.

With RTX Video HDR, Filmora users can create high-quality content that’s ideal for gaming videos, travel vlogs or event filmmaking.

Combining RTX Video HDR with RTX Video Super Resolution — another AI-powered tool that uses trained models to sharpen edges, restore features and remove artifacts in video — further enhances visual quality. RTX Video HDR requires an NVIDIA RTX GPU connected to an HDR10-compatible monitor or TV. For more information, check out the RTX Video FAQ.

Those with a RTX GPU-powered PC can send files to the Filmora desktop app and continue to edit with local RTX acceleration, doubling the speed of the export process with dual encoders on GeForce RTX 4070 Ti or above GPUs.

Learn more about Wondershare Filmora’s AI-powered features.

Maximizing AI Features in Filmora

Kevin Stratvert has the heart of a teacher — he’s always loved to share his technical knowledge and tips with others.

One day, he thought, “Why not make a YouTube video to explain stuff directly to users?” His first big hit was a tutorial on how to get Microsoft Office for free through Office.com. The video garnered millions of views and tons of engagement — and he’s continued creating content ever since.

“The more content I created, the more questions and feedback I got from viewers, sparking this cycle of creativity and connection that I just couldn’t get enough of,” said Stratvert.

Explaining the benefits of AI has been an area of particular interest for Stratvert, especially as it relates to AI-powered features in Wondershare Filmora. In one YouTube video, Filmora Video Editor Tutorial for Beginners, he breaks down the AI effects video editors can use to accelerate their workflows.

Examples include:

- Smart Edit: Edit footage-based transcripts generated automatically, including in multiple languages.

- Smart Cutout: Remove unwanted objects or change the background in seconds.

- Speech-to-Text: Automatically generate compelling descriptions, titles and captions.

“AI has become a crucial part of my creative toolkit, especially for refining details that really make a difference,” said Stratvert. “By handling these technical tasks, AI frees up my time to focus more on creating content, making the whole process smoother and more efficient.”

Stratvert has also been experimenting with NVIDIA ChatRTX, a technology that lets users interact with their local data, installing and configuring various AI models, effectively prompting AI for both text and image outputs using CLIP and more.

NVIDIA Broadcast has been instrumental in giving Stratvert a professional setup for web conferences and livestreams. The app’s features, including background noise removal and virtual background, help maintain a professional appearance on screen. It’s especially useful in home studio settings, where controlling variables in the environment can be challenging.

“NVIDIA Broadcast has been instrumental in professionalizing my setup for web conferences and livestreams.” — Kevin Stratvert

Stratvert stresses the importance of his GeForce RTX 4070 graphics card in the content creation process.

“With an RTX GPU, I’ve noticed a dramatic improvement in render times and the smoothness of playback, even in demanding scenarios,” he said. “Additionally, the advanced capabilities of RTX GPUs support more intensive tasks like real-time ray tracing and AI-driven editing features, which can open up new creative possibilities in my edits.”

Check out Stratvert’s video tutorials on his website.

Follow NVIDIA Studio on Instagram, X and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

]]>NVIDIA founder and CEO Jensen Huang and Meta founder and CEO Mark Zuckerberg will hold a public fireside chat on Monday, July 29, at the 51st edition of the SIGGRAPH graphics conference in Denver.

The two leaders will discuss the future of AI and simulation and the pivotal role of research at SIGGRAPH, which focuses on the intersection of graphics and technology.

Before the discussion, Huang will also appear in a fireside chat with WIRED senior writer Lauren Goode to discuss AI and graphics for the new computing revolution.

Both conversations will be available live and on replay at NVIDIA.com.

The appearances at the conference, which runs July 28-Aug. 1, highlight SIGGRAPH’s continued role in technological innovation. Nearly 100 exhibitors will showcase how graphics are stepping into the future.

Attendees exploring the SIGGRAPH Innovation Zone will encounter startups at the forefront of computing and graphics while insights from industry leaders like Huang deliver a glimpse into the technological horizon.

Since the conference’s 1974 inception in Boulder, Colorado, SIGGRAPH has been at the forefront of innovation.

It introduced the world to demos such as the “Aspen Movie Map” — a precursor to Google Street View decades ahead of its time — and one of the first screenings of Pixar’s Luxo Jr., which redefined the art of animation.

The conference remains the leading venue for groundbreaking research in computer graphics.

Publications that redefined modern visual culture — including Ed Catmull’s 1974 paper on texture mapping, Turner Whitted’s 1980 paper on ray-tracing techniques, and James T. Kajiya’s 1986 “The Rendering Equation” — first made their debut at SIGGRAPH.

Innovations like these are now spilling out across the world’s industries.

Throughout the Innovation Zone, over a dozen startups are showcasing how they’re bringing advancements rooted in graphics into diverse fields — from robotics and manufacturing to autonomous vehicles and scientific research, including climate science.

Highlights include Tomorrow.io, which leverages NVIDIA Earth-2 to provide precise weather insights and offers early warning systems to help organizations adapt to climate changes.

Looking Glass is pioneering holographic technology that enables 3D content experiences without headsets. The company is using NVIDIA RTX 6000 Ada Generation GPUs and NVIDIA Maxine technology to enhance real-time audio, video and augmented-reality effects to make this possible.

Manufacturing startup nTop developed a computer-aided design tool using NVIDIA GPU-powered signed distance fields. The tool uses the NVIDIA OptiX rendering engine and a two-way NVIDIA Omniverse LiveLink connector to enable real-time, high-fidelity visualization and collaboration across design and simulation platforms.

Conference attendees can also explore how generative AI — a technology deeply rooted in visual computing — is remaking professional graphics.

On July 31, industry leaders and developers will gather in room 607 at the Colorado Convention Center for Generative AI Day, exploring cutting-edge solutions for visual effects, animation and game development with leaders from Bria AI, Cuebric, Getty Images, Replikant, Shutterstock and others.

The conference’s speaker lineup is equally compelling.

In addition to Huang and Zuckerberg, notable presenters include Dava Newman of MIT Media Lab and Mark Sagar from Soul Machines, who’ll delve into the intersections of bioengineering, design and digital humans.

Join the global technology community in Denver later this month to discover why SIGGRAPH remains at the forefront of demonstrating, predicting and shaping the future of technology.

]]>NVIDIA is taking an array of advancements in rendering, simulation and generative AI to SIGGRAPH 2024, the premier computer graphics conference, which will take place July 28 – Aug. 1 in Denver.

More than 20 papers from NVIDIA Research introduce innovations advancing synthetic data generators and inverse rendering tools that can help train next-generation models. NVIDIA’s AI research is making simulation better by boosting image quality and unlocking new ways to create 3D representations of real or imagined worlds.

The papers focus on diffusion models for visual generative AI, physics-based simulation and increasingly realistic AI-powered rendering. They include two technical Best Paper Award winners and collaborations with universities across the U.S., Canada, China, Israel and Japan as well as researchers at companies including Adobe and Roblox.

These initiatives will help create tools that developers and businesses can use to generate complex virtual objects, characters and environments. Synthetic data generation can then be harnessed to tell powerful visual stories, aid scientists’ understanding of natural phenomena or assist in simulation-based training of robots and autonomous vehicles.

Diffusion Models Improve Texture Painting, Text-to-Image Generation

Diffusion models, a popular tool for transforming text prompts into images, can help artists, designers and other creators rapidly generate visuals for storyboards or production, reducing the time it takes to bring ideas to life.

Two NVIDIA-authored papers are advancing the capabilities of these generative AI models.

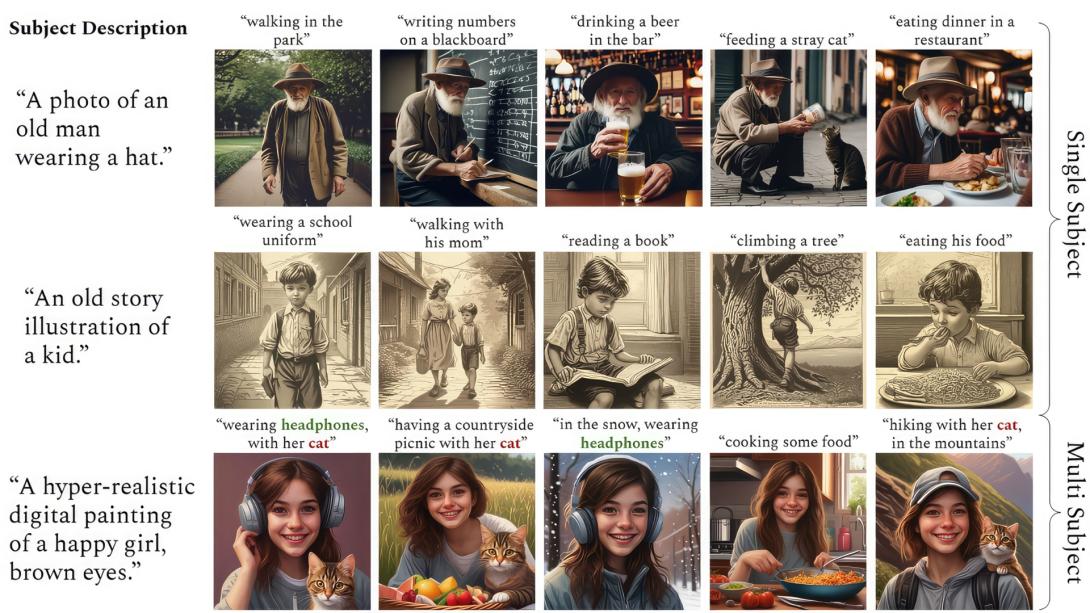

ConsiStory, a collaboration between researchers at NVIDIA and Tel Aviv University, makes it easier to generate multiple images with a consistent main character — an essential capability for storytelling use cases such as illustrating a comic strip or developing a storyboard. The researchers’ approach introduces a technique called subject-driven shared attention, which reduces the time it takes to generate consistent imagery from 13 minutes to around 30 seconds.

NVIDIA researchers last year won the Best in Show award at SIGGRAPH’s Real-Time Live event for AI models that turn text or image prompts into custom textured materials. This year, they’re presenting a paper that applies 2D generative diffusion models to interactive texture painting on 3D meshes, enabling artists to paint in real time with complex textures based on any reference image.

Kick-Starting Developments in Physics-Based Simulation

Graphics researchers are narrowing the gap between physical objects and their virtual representations with physics-based simulation — a range of techniques to make digital objects and characters move the same way they would in the real world.

Several NVIDIA Research papers feature breakthroughs in the field, including SuperPADL, a project that tackles the challenge of simulating complex human motions based on text prompts (see video at top).

Using a combination of reinforcement learning and supervised learning, the researchers demonstrated how the SuperPADL framework can be trained to reproduce the motion of more than 5,000 skills — and can run in real time on a consumer-grade NVIDIA GPU.

Another NVIDIA paper features a neural physics method that applies AI to learn how objects — whether represented as a 3D mesh, a NeRF or a solid object generated by a text-to-3D model — would behave as they are moved in an environment.

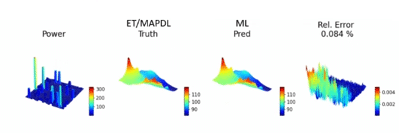

A paper written in collaboration with Carnegie Mellon University researchers develops a new kind of renderer — one that, instead of modeling physical light, can perform thermal analysis, electrostatics and fluid mechanics. Named one of five best papers at SIGGRAPH, the method is easy to parallelize and doesn’t require cumbersome model cleanup, offering new opportunities for speeding up engineering design cycles.

In the example above, the renderer performs a thermal analysis of the Mars Curiosity rover, where keeping temperatures within a specific range is critical to mission success.

Additional simulation papers introduce a more efficient technique for modeling hair strands and a pipeline that accelerates fluid simulation by 10x.

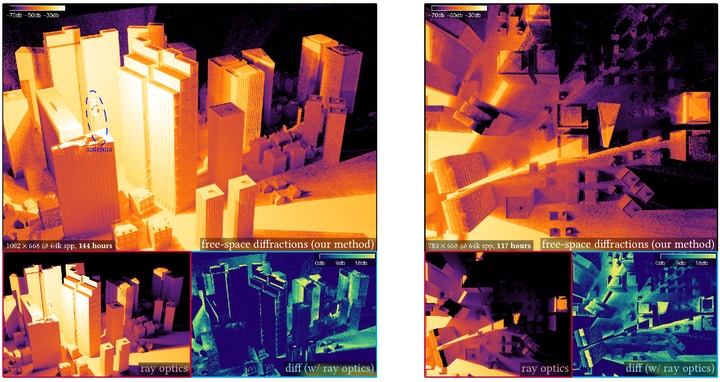

Raising the Bar for Rendering Realism, Diffraction Simulation

Another set of NVIDIA-authored papers present new techniques to model visible light up to 25x faster and simulate diffraction effects — such as those used in radar simulation for training self-driving cars — up to 1,000x faster.

A paper by NVIDIA and University of Waterloo researchers tackles free-space diffraction, an optical phenomenon where light spreads out or bends around the edges of objects. The team’s method can integrate with path-tracing workflows to increase the efficiency of simulating diffraction in complex scenes, offering up to 1,000x acceleration. Beyond rendering visible light, the model could also be used to simulate the longer wavelengths of radar, sound or radio waves.

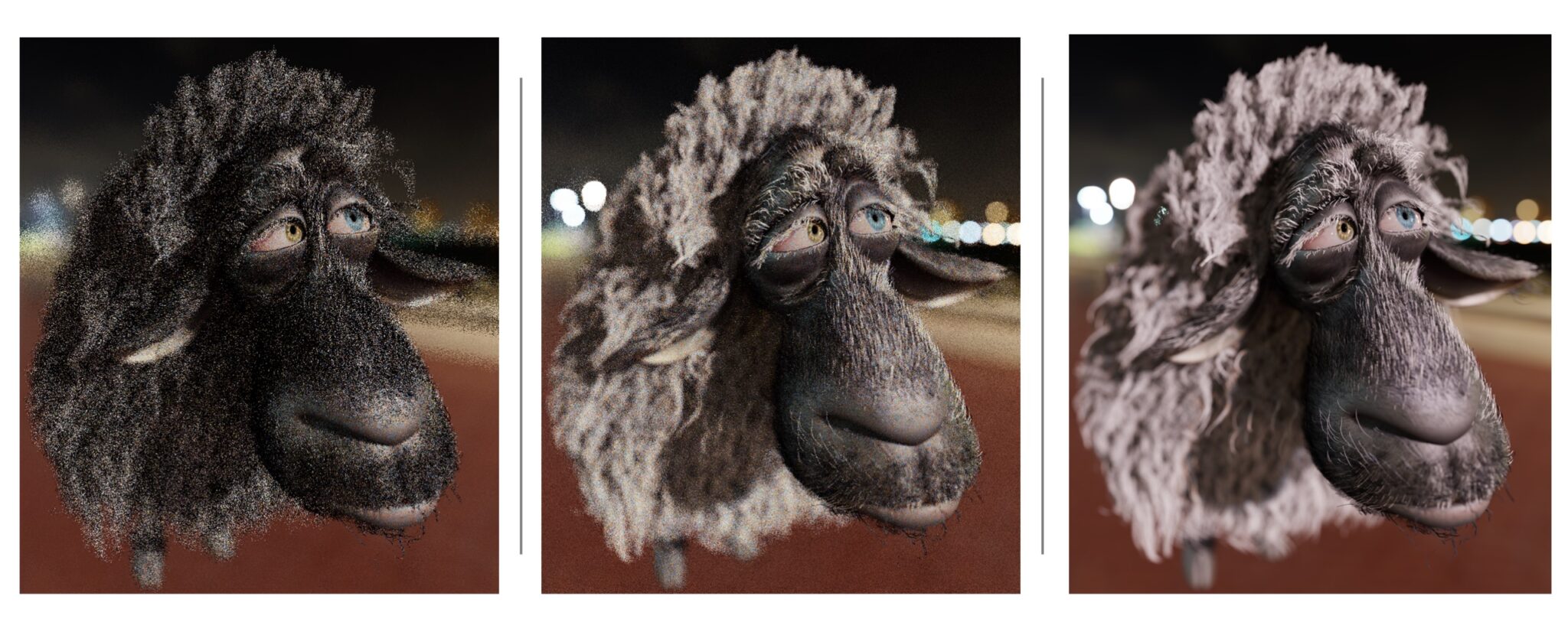

Path tracing samples numerous paths — multi-bounce light rays traveling through a scene — to create a photorealistic picture. Two SIGGRAPH papers improve sampling quality for ReSTIR, a path-tracing algorithm first introduced by NVIDIA and Dartmouth College researchers at SIGGRAPH 2020 that has been key to bringing path tracing to games and other real-time rendering products.

One of these papers, a collaboration with the University of Utah, shares a new way to reuse calculated paths that increases effective sample count by up to 25x, significantly boosting image quality. The other improves sample quality by randomly mutating a subset of the light’s path. This helps denoising algorithms perform better, producing fewer visual artifacts in the final render.

Teaching AI to Think in 3D

NVIDIA researchers are also showcasing multipurpose AI tools for 3D representations and design at SIGGRAPH.

One paper introduces fVDB, a GPU-optimized framework for 3D deep learning that matches the scale of the real world. The fVDB framework provides AI infrastructure for the large spatial scale and high resolution of city-scale 3D models and NeRFs, and segmentation and reconstruction of large-scale point clouds.

A Best Technical Paper award winner written in collaboration with Dartmouth College researchers introduces a theory for representing how 3D objects interact with light. The theory unifies a diverse spectrum of appearances into a single model.

And a collaboration with University of Tokyo, University of Toronto and Adobe Research introduces an algorithm that generates smooth, space-filling curves on 3D meshes in real time. While previous methods took hours, this framework runs in seconds and offers users a high degree of control over the output to enable interactive design.

NVIDIA at SIGGRAPH

Learn more about NVIDIA at SIGGRAPH. Special events include a fireside chat between NVIDIA founder and CEO Jensen Huang and Meta founder and CEO Mark Zuckerberg, as well as a fireside chat with Huang and Lauren Goode, senior writer at WIRED, on the impact of robotics and AI in industrial digitalization.

NVIDIA researchers will also present OpenUSD Day by NVIDIA, a full-day event showcasing how developers and industry leaders are adopting and evolving OpenUSD to build AI-enabled 3D pipelines.

NVIDIA Research has hundreds of scientists and engineers worldwide, with teams focused on topics including AI, computer graphics, computer vision, self-driving cars and robotics. See more of their latest work.

]]>Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible and showcases new hardware, software, tools and accelerations for NVIDIA RTX PC and workstation users.

In the rapidly evolving world of artificial intelligence, generative AI is captivating imaginations and transforming industries. Behind the scenes, an unsung hero is making it all possible: microservices architecture.

The Building Blocks of Modern AI Applications

Microservices have emerged as a powerful architecture, fundamentally changing how people design, build and deploy software.

A microservices architecture breaks down an application into a collection of loosely coupled, independently deployable services. Each service is responsible for a specific capability and communicates with other services through well-defined application programming interfaces, or APIs. This modular approach stands in stark contrast to traditional all-in-one architectures, in which all functionality is bundled into a single, tightly integrated application.

By decoupling services, teams can work on different components simultaneously, accelerating development processes and allowing updates to be rolled out independently without affecting the entire application. Developers can focus on building and improving specific services, leading to better code quality and faster problem resolution. Such specialization allows developers to become experts in their particular domain.

Services can be scaled independently based on demand, optimizing resource utilization and improving overall system performance. In addition, different services can use different technologies, allowing developers to choose the best tools for each specific task.

A Perfect Match: Microservices and Generative AI

The microservices architecture is particularly well-suited for developing generative AI applications due to its scalability, enhanced modularity and flexibility.

AI models, especially large language models, require significant computational resources. Microservices allow for efficient scaling of these resource-intensive components without affecting the entire system.

Generative AI applications often involve multiple steps, such as data preprocessing, model inference and post-processing. Microservices enable each step to be developed, optimized and scaled independently. Plus, as AI models and techniques evolve rapidly, a microservices architecture allows for easier integration of new models as well as the replacement of existing ones without disrupting the entire application.

NVIDIA NIM: Simplifying Generative AI Deployment

As the demand for AI-powered applications grows, developers face challenges in efficiently deploying and managing AI models.

NVIDIA NIM inference microservices provide models as optimized containers to deploy in the cloud, data centers, workstations, desktops and laptops. Each NIM container includes the pretrained AI models and all the necessary runtime components, making it simple to integrate AI capabilities into applications.

NIM offers a game-changing approach for application developers looking to incorporate AI functionality by providing simplified integration, production-readiness and flexibility. Developers can focus on building their applications without worrying about the complexities of data preparation, model training or customization, as NIM inference microservices are optimized for performance, come with runtime optimizations and support industry-standard APIs.

AI at Your Fingertips: NVIDIA NIM on Workstations and PCs

Building enterprise generative AI applications comes with many challenges. While cloud-hosted model APIs can help developers get started, issues related to data privacy, security, model response latency, accuracy, API costs and scaling often hinder the path to production.

Workstations with NIM provide developers with secure access to a broad range of models and performance-optimized inference microservices.

By avoiding the latency, cost and compliance concerns associated with cloud-hosted APIs as well as the complexities of model deployment, developers can focus on application development. This accelerates the delivery of production-ready generative AI applications — enabling seamless, automatic scale out with performance optimization in data centers and the cloud.

The recently announced general availability of the Meta Llama 3 8B model as a NIM, which can run locally on RTX systems, brings state-of-the-art language model capabilities to individual developers, enabling local testing and experimentation without the need for cloud resources. With NIM running locally, developers can create sophisticated retrieval-augmented generation (RAG) projects right on their workstations.

Local RAG refers to implementing RAG systems entirely on local hardware, without relying on cloud-based services or external APIs.

Developers can use the Llama 3 8B NIM on workstations with one or more NVIDIA RTX 6000 Ada Generation GPUs or on NVIDIA RTX systems to build end-to-end RAG systems entirely on local hardware. This setup allows developers to tap the full power of Llama 3 8B, ensuring high performance and low latency.

By running the entire RAG pipeline locally, developers can maintain complete control over their data, ensuring privacy and security. This approach is particularly helpful for developers building applications that require real-time responses and high accuracy, such as customer-support chatbots, personalized content-generation tools and interactive virtual assistants.

Hybrid RAG combines local and cloud-based resources to optimize performance and flexibility in AI applications. With NVIDIA AI Workbench, developers can get started with the hybrid-RAG Workbench Project — an example application that can be used to run vector databases and embedding models locally while performing inference using NIM in the cloud or data center, offering a flexible approach to resource allocation.

This hybrid setup allows developers to balance the computational load between local and cloud resources, optimizing performance and cost. For example, the vector database and embedding models can be hosted on local workstations to ensure fast data retrieval and processing, while the more computationally intensive inference tasks can be offloaded to powerful cloud-based NIM inference microservices. This flexibility enables developers to scale their applications seamlessly, accommodating varying workloads and ensuring consistent performance.

NVIDIA ACE NIM inference microservices bring digital humans, AI non-playable characters (NPCs) and interactive avatars for customer service to life with generative AI, running on RTX PCs and workstations.

ACE NIM inference microservices for speech — including Riva automatic speech recognition, text-to-speech and neural machine translation — allow accurate transcription, translation and realistic voices.

The NVIDIA Nemotron small language model is a NIM for intelligence that includes INT4 quantization for minimal memory usage and supports roleplay and RAG use cases.

And ACE NIM inference microservices for appearance include Audio2Face and Omniverse RTX for lifelike animation with ultrarealistic visuals. These provide more immersive and engaging gaming characters, as well as more satisfying experiences for users interacting with virtual customer-service agents.

Dive Into NIM

As AI progresses, the ability to rapidly deploy and scale its capabilities will become increasingly crucial.

NVIDIA NIM microservices provide the foundation for this new era of AI application development, enabling breakthrough innovations. Whether building the next generation of AI-powered games, developing advanced natural language processing applications or creating intelligent automation systems, users can access these powerful development tools at their fingertips.

Ways to get started:

- Experience and interact with NVIDIA NIM microservices on ai.nvidia.com.

- Join the NVIDIA Developer Program and get free access to NIM for testing and prototyping AI-powered applications.

- Buy an NVIDIA AI Enterprise license with a free 90-day evaluation period for production deployment and use NVIDIA NIM to self-host AI models in the cloud or in data centers.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

]]>Sphere, a new kind of entertainment medium in Las Vegas, is joining the ranks of legendary circular performance spaces such as the Roman Colosseum and Shakespeare’s Globe Theater — captivating audiences with eye-popping LED displays that cover nearly 750,000 square feet inside and outside the venue.

Behind the screens, around 150 NVIDIA RTX A6000 GPUs help power stunning visuals on floor-to-ceiling, 16x16K displays across the Sphere’s interior, as well as 1.2 million programmable LED pucks on the venue’s exterior — the Exosphere, which is the world’s largest LED screen.

Delivering robust network connectivity, NVIDIA BlueField DPUs and NVIDIA ConnectX-6 Dx NICs — along with the NVIDIA DOCA Firefly Service and NVIDIA Rivermax software for media streaming — ensure that all the display panels act as one synchronized canvas.

“Sphere is captivating audiences not only in Las Vegas, but also around the world on social media, with immersive LED content delivered at a scale and clarity that has never been done before,” said Alex Luthwaite, senior vice president of show systems technology at Sphere Entertainment. “This would not be possible without the expertise and innovation of companies such as NVIDIA that are critical to helping power our vision, working closely with our team to redefine what is possible with cutting-edge display technology.”

Named one of TIME’s Best Inventions of 2023, Sphere hosts original Sphere Experiences, concerts and residencies from the world’s biggest artists, and premier marquee and corporate events.

Rock band U2 opened Sphere with a 40-show run that concluded in March. Other shows include The Sphere Experience featuring Darren Aronofsky’s Postcard From Earth, a specially created multisensory cinematic experience that showcases all of the venue’s immersive technologies, including high-resolution visuals, advanced concert-grade sound, haptic seats and atmospheric effects such as wind and scents.

Behind the Screens: Visual Technology Fueling the Sphere

Sphere Studios creates video content in its Burbank, Calif., facility, then transfers it digitally to Sphere in Las Vegas. The content is then streamed in real time to rack-mounted workstations equipped with NVIDIA RTX A6000 GPUs, achieving unprecedented performance capable of delivering three layers of 16K resolution at 60 frames per second.

The NVIDIA Rivermax software helps provide media streaming acceleration, enabling direct data transfers to and from the GPU. Combined, the software and hardware acceleration eliminates jitter and optimizes latency.

NVIDIA BlueField DPUs also facilitate precision timing through the DOCA Firefly Service, which is used to synchronize clocks in a network with sub-microsecond accuracy.

“The integration of NVIDIA RTX GPUs, BlueField DPUs and Rivermax software creates a powerful trifecta of advantages for modern accelerated computing, supporting the unique high-resolution video streams and strict timing requirements needed at Sphere and setting a new standard for media processing capabilities,” said Nir Nitzani, senior product director for networking software at NVIDIA. “This collaboration results in remarkable performance gains, culminating in the extraordinary experiences guests have at Sphere.”

Well-Rounded: From Simulation to Sphere Stage

To create new immersive content exclusively for Sphere, Sphere Entertainment launched Sphere Studios, which is dedicated to developing the next generation of original immersive entertainment. The Burbank campus consists of numerous development facilities, including a quarter-sized version of Sphere screen in Las Vegas, dubbed Big Dome, which serves as a specialized screening, production facility and lab for content.

Sphere Studios also developed the Big Sky camera system, which captures uncompressed, 18K images from a single camera, so that the studio can film content for Sphere without needing to stitch multiple camera feeds together. The studio’s custom image processing software runs on Lenovo servers powered by NVIDIA A40 GPUs.

The A40 GPUs also fuel creative work, including 3D video, virtualization and ray tracing. To develop visuals for different kinds of shows, the team works with apps including Unreal Engine, Unity, Touch Designer and Notch.

For more, explore upcoming sessions in NVIDIA’s room at SIGGRAPH and watch the panel discussion “Immersion in Sphere: Redefining Live Entertainment Experiences” on NVIDIA On-Demand.

All images courtesy of Sphere Entertainment.