Turn Black Friday into Green Thursday with a new deal on GeForce NOW Ultimate and Performance memberships this week. For a limited time, get 50% off new Ultimate or Performance memberships for the first three months to experience the power of GeForce RTX-powered gaming at a fraction of the cost.

The giving continues for GeForce NOW members: SteelSeries is offering a 30% discount exclusively to all GeForce NOW members on Stratus+ or Nimbus+ controllers, perfect for gaming anytime, anywhere when paired with GeForce NOW on Android and iOS devices. To redeem the discount, opt in to GeForce NOW rewards and look out for an email with details. Enjoy this exclusive offer on its own — it can’t be combined with other SteelSeries promotions.

It’s not a GFN Thursday without new games — this week, six are joining the over 2,000 titles in the GeForce NOW library.

Plus, the Steam Autumn Sale is happening now, featuring stellar discounts on GeForce NOW-supported games. Snag beloved publishers’ top titles, including Nightingale from Inflexion Games, Remnant and Remnant II from Arc Games, and Cult of the Lamb and The Plucky Squire from Devolver — and even more from publishers Frost Giant Studios, Metric Empire, tinyBuild, Torn Banner Studios and Tripwire. The sale runs through Wednesday, Dec. 4.

Stuff Your Stockings

This holiday season, GeForce NOW is spreading cheer to gamers everywhere with an irresistible Black Friday offer. Those looking to try out the cloud gaming service can now level up their gaming with 50% off new Ultimate and Performance memberships for the first three months. It’s the perfect time for gamers to treat themselves or a buddy to GeForce RTX-powered gaming without having to upgrade any hardware.

Lock in all the perks of the newly enhanced Performance membership, now featuring crisp 1440p streaming, at half off for the next three months. Or go all out with the Ultimate tier — delivering the same premium experience GeForce RTX 4080 GPU owners enjoy — now available at the regular monthly cost of a Performance membership.

With a GeForce NOW membership, gamers can stream over 2,000 PC games from popular digital gaming stores with longer gaming sessions and real-time ray tracing for supported titlgames across nearly all devices. Performance members can stream at up to 1440p at 60 frames per second, and Ultimate members can stream up to 4K at 120 fps or 1080p at 240 fps.

Don’t let this festive deal slip away — give the gift of gaming this holiday season with GeForce NOW’s Black Friday sale. Whether battling winter bosses or exploring snowy landscapes, do it with exceptional performance at an exceptional price.

Elevating New Games

In addition, members can look for the following:

- New Arc Line (New release on Steam, Nov. 26)

- MEGA MAN X DiVE Offline Demo (Steam)

- PANICORE (Steam)

- Resident Evil 7 Teaser: Beginning Hour Demo (Steam)

- Slime Rancher (Steam)

- Sumerian Six (Steam)

What are you planning to play this weekend? Let us know on X or in the comments below.

]]>Black Friday came early.

Enjoy 50% off your first 3 months of an Ultimate or Performance monthly membership!

Offer available for new & upgrading members—grab it today!

https://t.co/2quxLMccsG pic.twitter.com/3eRx930oSw

—

NVIDIA GeForce NOW (@NVIDIAGFN) November 27, 2024

Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for GeForce RTX PC and NVIDIA RTX workstation users.

Generative AI has transformed the way people bring ideas to life. Agentic AI takes this one step further — using sophisticated, autonomous reasoning and iterative planning to help solve complex, multi-step problems.

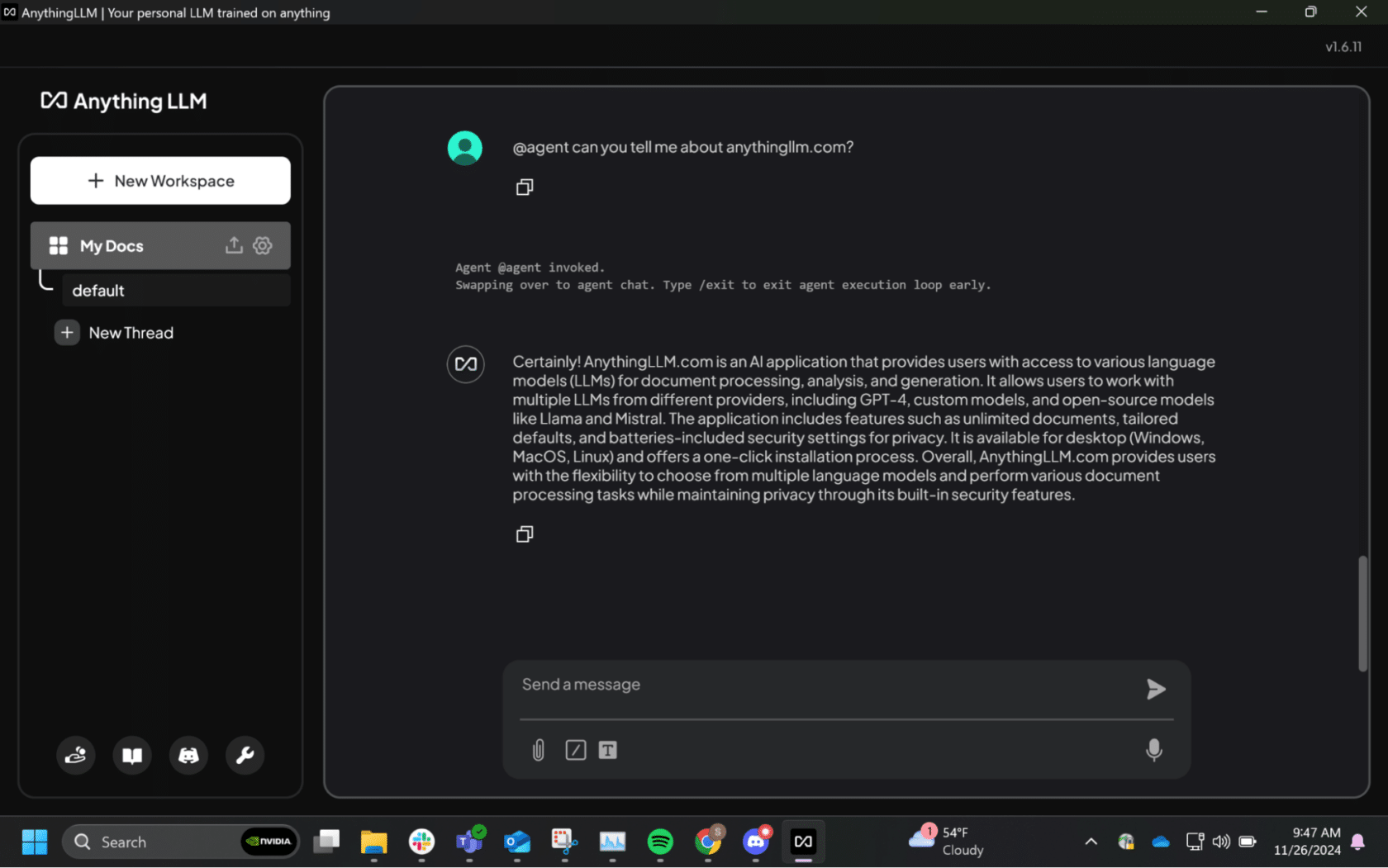

AnythingLLM is a customizable open-source desktop application that lets users seamlessly integrate large language model (LLM) capabilities into various applications locally on their PCs. It enables users to harness AI for tasks such as content generation, summarization and more, tailoring tools to meet specific needs.

Accelerated on NVIDIA RTX AI PCs, AnythingLLM has launched a new Community Hub where users can share prompts, slash commands and AI agent skills while experimenting with building and running AI agents locally.

Autonomously Solve Complex, Multi-Step Problems With Agentic AI

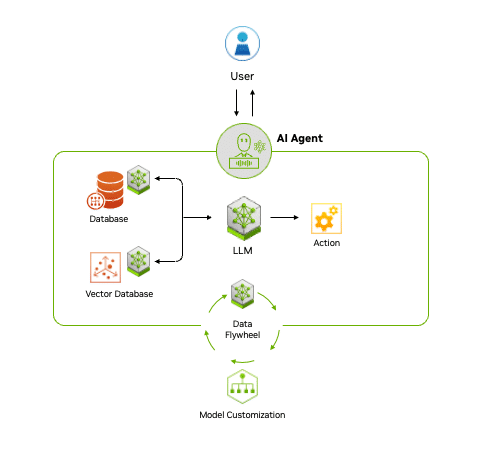

AI agents can take chatbot capabilities further. They typically understand the context of the tasks and can analyze challenges and develop strategies — and some can even fully execute assigned tasks.

For example, while a chatbot could answer a prompt asking for a restaurant recommendation, an AI agent could even surface the restaurant’s phone number for a reservation and add reminders to the user’s calendar.

Agents help achieve big-picture goals and don’t get bogged down at the task level. There are many agentic apps in development to tackle to-do lists, manage schedules, help organize tasks, automate email replies, recommend personalized workout plans or plan trips.

Once prompted, an AI agent can gather and process data from various sources, including databases. It can use an LLM for reasoning — for example, to understand the task — then generate solutions and specific functions. If integrated with external tools and software, an AI agent can next execute the task.

Some sophisticated agents can even be improved through a feedback loop. When the data it generates is fed back into the system, the AI agent becomes smarter and faster.

Accelerated by NVIDIA RTX AI PCs, these agents can perform inferencing and execute tasks faster than any other PC. Users can operate the agent locally to help ensure data privacy, even without an internet connection.

AnythingLLM: A Community Effort, Accelerated by RTX

The AI community is already diving into the possibilities of agentic AI, experimenting with ways to create smarter, more capable systems.

Applications like AnythingLLM let developers easily build, customize and unlock agentic AI with their favorite models — like Llama and Mistral — as well as with other tools, such as Ollama and LMStudio. AnythingLLM is accelerated on RTX-powered AI PCs and workstations with high-performance Tensor Cores, dedicated hardware that provides the compute performance needed to run the latest and most demanding AI models.

AnythingLLM is designed to make working with AI seamless, productive and accessible to everyone. It allows users to chat with their documents using intuitive interfaces, use AI agents to handle complex and custom tasks, and run cutting-edge LLMs locally on RTX-powered PCs and workstations. This means unlocked access to local resources, tools and applications that typically can’t be integrated with cloud- or browser-based applications, or those that require extensive setup and knowledge to build. By tapping into the power of NVIDIA RTX GPUs, AnythingLLM delivers faster, smarter and more responsive AI for a variety of workflows — all within a single desktop application.

AnythingLLM’s Community Hub lets AI enthusiasts easily access system prompts that can help steer LLM behavior, discover productivity-boosting slash commands, build specialized AI agent skills for unique workflows and custom tools, and access on-device resources.

Some example agent skills that are available in the Community Hub include Microsoft Outlook email assistants, calendar agents, web searches and home assistant controllers, as well as agents for populating and even integrating custom application programming interface endpoints and services for a specific use case.

By enabling AI enthusiasts to download, customize and use agentic AI workflows on their own systems with full privacy, AnythingLLM is fueling innovation and making it easier to experiment with the latest technologies — whether building a spreadsheet assistant or tackling more advanced workflows.

Experience AnythingLLM now.

Powered by People, Driven by Innovation

AnythingLLM showcases how AI can go beyond answering questions to actively enhancing productivity and creativity. Such applications illustrate AI’s move toward becoming an essential collaborator across workflows.

Agentic AI’s potential applications are vast and require creativity, expertise and computing capabilities. NVIDIA RTX AI PCs deliver peak performance for running agents locally, whether accomplishing simple tasks like generating and distributing content, or managing more complex use cases such as orchestrating enterprise software.

Learn more and get started with agentic AI.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

]]>With startup Zordi, founder Gilwoo Lee’s enthusiasm for robotics, healthy eating, better produce and sustainable farming has taken root.

Lee hadn’t even finished her Ph.D. in AI and robotics at the University of Washington when investors seeded her ambitious plans for autonomous agriculture. Since researcher-turned-entrepreneur Lee founded Zordi in 2020 with Casey Call, formerly head grower at vertical farming startup Plenty, the robotic grower of strawberries has landed its fruits in Wegmans and is now expanding with partner farms in New Jersey and California.

“The most rewarding part is that the fruits you get taste amazing,” said Lee. “You’re able to consistently do that throughout the cycle of the plant because you are constantly optimizing.”

The company has two types of robots within its hydroponic operations. One is a scouting robot for gathering information on the health of plants using foundational models. The other is a harvesting robot for delicately picking and placing fruits and handling other tasks.

Zordi, whose engineering team is based outside Boston, has farms in southern New Jersey and western New York. The company uses NVIDIA GPUs in the cloud and on desktops for training everything from crop health models to those for fruit picking and assessing fruit quality.

Lee aims to deploy autonomous greenhouse systems globally to support regional markets, cutting down on the carbon footprint for transportation as well as providing fresher, better-tasting fruits grown more sustainably.

Having operated greenhouses in New York and New Jersey for two years, the company recently formed partnerships with greenhouse farms in New Jersey and California to meet growing demand.

Zordi is bringing NVIDIA accelerated AI automation to indoor growing that in many ways is parallel to developments in manufacturing and fulfillment operations.

Adopting Jetson for Sustainable Farming, Energy Efficiency

Zordi is building AI models and robots to enable sustainable farming at scale. It uses NVIDIA Jetson AGX Orin modules for testing out gathering sensor data and running its models to recognize the health of plants, flowers and fruits, early pest and disease symptoms, and the needs for hydration and nutrition, as well as light and temperature management.

Jetson’s energy efficiency and the availability of low-cost, high performance cameras from NVIDIA partners are attractive attributes for Zordi, said Lee. The company runs several cameras on each of its robots to collect data.

“Jetson opens up a lot of lower-cost cameras,” said Lee. “It lets us play with different cameras and gives us better battery management.”

With its scouting and harvesting robots, Zordi also aims to address a big issue farms worldwide complain about: a labor shortage that affects operations, leaving fruits and vegetables sometimes unattended and unharvested altogether.

Zordi is planning to scale up its growing operations to meet consumer demand. The company expects that it can do more with AI and robotic automation despite labor challenges.

“We want our harvesting robots to do more dexterous tasks, like pruning leaves, with the help of simulation,” said Lee.

Omniverse Isaac Sim and Digital Twins to Boost Operations

Zordi is looking at how to boost its indoor growing operations with AI much like industrial manufacturers do, using Isaac Sim in Omniverse for simulations and digital twins to optimize operations.

The company’s software platform for viewing all the data collected from its robots’ sensors provides a live dashboard with a spatial map. It offers a real-time view of every plant in its facilities so that it’s easy to monitor the production remotely.

What’s more, it analyzes plant health and makes optional crop care recommendations using foundational models so that inexperienced farm operators can manage farms like experts.

“We’re literally one step away from putting this all into Isaac Sim and Omniverse,” said Lee, whose Ph.D. dissertation covered reinforcement learning and sim-to-real.

Zordi is working on gripping simulations for strawberries as well as for cucumbers and tomatoes to expand into other categories.

“With strawberries or any other crops, if you can handle them delicately, then it helps with longer shelf life,” Lee said.

Lee is optimistic that the simulations in Isaac Sim will not only boost Zordi’s performance in harvest, but also let it do other manipulation tasks in other scenarios.

Big picture, Zordi aims to create a fully autonomous farming system that makes farming easy and profitable, with AI recommending sustainable crop-care decisions and robots doing the hard work.

“What’s really important for us is how do we automate this, and how do we have a thinking AI that is actually making decisions for the farm with a lot of automations,” said Lee.

]]>AI is changing industries and economies worldwide.

Workforce development is central to ensuring the changes benefit all of us, as Louis Stewart, head of strategic initiatives for NVIDIA’s global developer ecosystem, explains in the latest AI Podcast.

“AI is fueling a lot of change in all ecosystems right now,” Stewart said. “It’s disrupting how we think about traditional economic development — how states and countries plan, how they stay competitive globally, and how they develop their workforces.”

Providing AI education, embracing the technology and addressing workforce challenges are all critical for future workplace development.

“It starts with education,” Stewart said.

AI Education Crucial at All Levels

Educating people on what AI can do, and how the current generation of AI-powered tools work, is the starting point. AI education must come at all levels, according to Stewart — however, higher education systems, in particular, need to be thinking about what’s coming next, so graduating students can optimize their employability.

“Graduates need to understand AI, and need to have had touches in AI,” he explained. Stewart emphasizes that this is broader than an engineering or a research challenge. “This is really a true workforce issue.”

Stewart points to Gwinnett County in Georgia as an early education example, where the community has developed a full K-16 curriculum.

“If young kids are already playing with AI on their phones, they should actually be thinking about it a little bit deeper,” he said. The idea, he explained, is for kids to move beyond simply using the tech to start seeing themselves as future creators of new technology, and being part of the broader evolution.

Nobody Gets Left Out

Beyond the classroom, a comprehensive view of AI education would expose people in the broader community to AI learning opportunities, Stewart said. His experience in the public sector informs his decidedly inclusive view on the matter.

Before joining NVIDIA four years ago, Stewart spent more than a decade working for the state of California, and then its capital city of Sacramento. He points to his time as Sacramento’s chief innovation officer to illustrate how important it is that all citizens be included in progress.

“Sacramento was trying to move into a place to be an innovation leader in the state and nationally. I knew the city because I grew up here, and I knew that there were areas of the city that would never see innovation unless it was brought to them,” he explained. “So if I was bringing autonomous cars to Sacramento, it was for the legislators, and it was for the CHP (California Highway Patrol), but it was also for the people.”

Stewart elaborated that everyone coming in touch with self-driving vehicles needed to understand their impact. There was the technology itself — how autonomous vehicles work, how to use them as a passenger and so forth.

But there were also broader questions, such as how mechanics would need new training to understand the computer systems powering autonomous cars. And how parents would need to understand self-driving vehicles from the point of view of getting their kids to and from school without having to miss work to do the driving themselves.

Just as individuals will have different needs and wants from AI systems, so too will different communities, businesses and states take different approaches when implementing AI, Stewart said.

Diverse Approaches to AI Implementation

Public-private partnerships are critical to implementing AI across the U.S. and beyond. NVIDIA is partnering with states and higher education systems across the country for AI workforce development. And the programs being put in place are just as diverse as the states themselves.

“Every state has their idea about what they want to do when it comes to AI,” Stewart explained.

Still, some common goals hold across state lines. When Stewart’s team engages a governor’s office with talk of AI to empower the workforce, create job opportunities, and improve collaboration, inclusivity and growth, he finds that state officials listen.

Stewart added that they often open up about what they’ve been working on. “We’ve been pleasantly surprised at how far along some of the states are with their AI strategies,” he said.

In August, NVIDIA announced it is working with the state of California to train 100,000 people on AI skills over the next three years. It’s an undertaking that will involve all 116 of the state’s community colleges and California’s university system. NVIDIA will also collaborate with the California human resources system to help state employees understand how AI skills may be incorporated into current and future jobs.

In Mississippi, a robust AI strategy is already in place.

The Mississippi Artificial Intelligence Network (MAIN) is one of the first statewide initiatives focused on addressing the emergence of AI and its effects on various industries’ workforces. MAIN works with educational partners that include community colleges and universities in Mississippi, all collaborating to facilitate AI education and training.

Embrace Technology, Embrace the Future

Stewart said it’s important to encourage individuals, businesses and other organizations to actively engage with AI tools and develop an understanding of how they’re benefiting the workforce landscape.

“Now is not the time to stay on the sidelines,” said Stewart.“This is the time to jump in and start understanding.”

Small businesses, for example, can start using applications like ChatGPT to see firsthand how they can transform operations. From there, Stewart suggests, a business could partner with the local school system to empower student interns to develop AI-powered tools and workflows for data analysis, marketing and other needs.

It’s a win-win: The business can transform itself with AI while playing a crucial part in developing the workforce by giving students valuable real-world experience.

It’s crucial that people get up to speed on the changes that AI is driving. And that we all participate in shaping our collective future, Stewart explained.

“Workforce development is, I think, at the crux of this next part of the conversation because the innovation and the research and everything surrounding AI is driving change so rapidly,” he said.

Hear more from NVIDIA’s Louis Stewart on workforce development opportunities in the latest AI Podcast.

]]>A team of generative AI researchers created a Swiss Army knife for sound, one that allows users to control the audio output simply using text.

While some AI models can compose a song or modify a voice, none have the dexterity of the new offering.

Called Fugatto (short for Foundational Generative Audio Transformer Opus 1), it generates or transforms any mix of music, voices and sounds described with prompts using any combination of text and audio files.

For example, it can create a music snippet based on a text prompt, remove or add instruments from an existing song, change the accent or emotion in a voice — even let people produce sounds never heard before.

“This thing is wild,” said Ido Zmishlany, a multi-platinum producer and songwriter — and cofounder of One Take Audio, a member of the NVIDIA Inception program for cutting-edge startups. “Sound is my inspiration. It’s what moves me to create music. The idea that I can create entirely new sounds on the fly in the studio is incredible.”

A Sound Grasp of Audio

“We wanted to create a model that understands and generates sound like humans do,” said Rafael Valle, a manager of applied audio research at NVIDIA and one of the dozen-plus people behind Fugatto, as well as an orchestral conductor and composer.

Supporting numerous audio generation and transformation tasks, Fugatto is the first foundational generative AI model that showcases emergent properties — capabilities that arise from the interaction of its various trained abilities — and the ability to combine free-form instructions.

“Fugatto is our first step toward a future where unsupervised multitask learning in audio synthesis and transformation emerges from data and model scale,” Valle said.

A Sample Playlist of Use Cases

For example, music producers could use Fugatto to quickly prototype or edit an idea for a song, trying out different styles, voices and instruments. They could also add effects and enhance the overall audio quality of an existing track.

“The history of music is also a history of technology. The electric guitar gave the world rock and roll. When the sampler showed up, hip-hop was born,” said Zmishlany. “With AI, we’re writing the next chapter of music. We have a new instrument, a new tool for making music — and that’s super exciting.”

An ad agency could apply Fugatto to quickly target an existing campaign for multiple regions or situations, applying different accents and emotions to voiceovers.

Language learning tools could be personalized to use any voice a speaker chooses. Imagine an online course spoken in the voice of any family member or friend.

Video game developers could use the model to modify prerecorded assets in their title to fit the changing action as users play the game. Or, they could create new assets on the fly from text instructions and optional audio inputs.

Making a Joyful Noise

“One of the model’s capabilities we’re especially proud of is what we call the avocado chair,” said Valle, referring to a novel visual created by a generative AI model for imaging.

For instance, Fugatto can make a trumpet bark or a saxophone meow. Whatever users can describe, the model can create.

With fine-tuning and small amounts of singing data, researchers found it could handle tasks it was not pretrained on, like generating a high-quality singing voice from a text prompt.

Users Get Artistic Controls

Several capabilities add to Fugatto’s novelty.

During inference, the model uses a technique called ComposableART to combine instructions that were only seen separately during training. For example, a combination of prompts could ask for text spoken with a sad feeling in a French accent.

The model’s ability to interpolate between instructions gives users fine-grained control over text instructions, in this case the heaviness of the accent or the degree of sorrow.

“I wanted to let users combine attributes in a subjective or artistic way, selecting how much emphasis they put on each one,” said Rohan Badlani, an AI researcher who designed these aspects of the model.

“In my tests, the results were often surprising and made me feel a little bit like an artist, even though I’m a computer scientist,” said Badlani, who holds a master’s degree in computer science with a focus on AI from Stanford.

The model also generates sounds that change over time, a feature he calls temporal interpolation. It can, for instance, create the sounds of a rainstorm moving through an area with crescendos of thunder that slowly fade into the distance. It also gives users fine-grained control over how the soundscape evolves.

Plus, unlike most models, which can only recreate the training data they’ve been exposed to, Fugatto allows users to create soundscapes it’s never seen before, such as a thunderstorm easing into a dawn with the sound of birds singing.

A Look Under the Hood

Fugatto is a foundational generative transformer model that builds on the team’s prior work in areas such as speech modeling, audio vocoding and audio understanding.

The full version uses 2.5 billion parameters and was trained on a bank of NVIDIA DGX systems packing 32 NVIDIA H100 Tensor Core GPUs.

Fugatto was made by a diverse group of people from around the world, including India, Brazil, China, Jordan and South Korea. Their collaboration made Fugatto’s multi-accent and multilingual capabilities stronger.

One of the hardest parts of the effort was generating a blended dataset that contains millions of audio samples used for training. The team employed a multifaceted strategy to generate data and instructions that considerably expanded the range of tasks the model could perform, while achieving more accurate performance and enabling new tasks without requiring additional data.

They also scrutinized existing datasets to reveal new relationships among the data. The overall work spanned more than a year.

Valle remembers two moments when the team knew it was on to something. “The first time it generated music from a prompt, it blew our minds,” he said.

Later, the team demoed Fugatto responding to a prompt to create electronic music with dogs barking in time to the beat.

“When the group broke up with laughter, it really warmed my heart.”

Hear what Fugatto can do:

]]>Robots are moving goods in warehouses, packaging foods and helping assemble vehicles — bringing enhanced automation to use cases across industries.

There are two keys to their success: Physical AI and robotics simulation.

Physical AI describes AI models that can understand and interact with the physical world. Physical AI embodies the next wave of autonomous machines and robots, such as self-driving cars, industrial manipulators, mobile robots, humanoids and even robot-run infrastructure like factories and warehouses.

With virtual commissioning of robots in digital worlds, robots are first trained using robotic simulation software before they are deployed for real-world use cases.

Robotics Simulation Summarized

An advanced robotics simulator facilitates robot learning and testing of virtual robots without requiring the physical robot. By applying physics principles and replicating real-world conditions, these simulators generate synthetic datasets to train machine learning models for deployment on physical robots.

Simulations are used for initial AI model training and then to validate the entire software stack, minimizing the need for physical robots during testing. NVIDIA Isaac Sim, a reference application built on the NVIDIA Omniverse platform, provides accurate visualizations and supports Universal Scene Description (OpenUSD)-based workflows for advanced robot simulation and validation.

NVIDIA’s 3 Computer Framework Facilitates Robot Simulation

Three computers are needed to train and deploy robot technology.

- A supercomputer to train and fine-tune powerful foundation and generative AI models.

- A development platform for robotics simulation and testing.

- An onboard runtime computer to deploy trained models to physical robots.

Only after adequate training in simulated environments can physical robots be commissioned.

The NVIDIA DGX platform can serve as the first computing system to train models.

NVIDIA Omniverse running on NVIDIA OVX servers functions as the second computer system, providing the development platform and simulation environment for testing, optimizing and debugging physical AI.

NVIDIA Jetson Thor robotics computers designed for onboard computing serve as the third runtime computer.

Who Uses Robotics Simulation?

Today, robot technology and robot simulations boost operations massively across use cases.

Global leader in power and thermal technologies Delta Electronics uses simulation to test out its optical inspection algorithms to detect product defects on production lines.

Deep tech startup Wandelbots is building a custom simulator by integrating Isaac Sim into its application, making it easy for end users to program robotic work cells in simulation and seamlessly transfer models to a real robot.

Boston Dynamics is activating researchers and developers through its reinforcement learning researcher kit.

Robotics company Fourier is simulating real-world conditions to train humanoid robots with the precision and agility needed for close robot-human collaboration.

Using NVIDIA Isaac Sim, robotics company Galbot built DexGraspNet, a comprehensive simulated dataset for dexterous robotic grasps containing over 1 million ShadowHand grasps on 5,300+ objects. The dataset can be applied to any dexterous robotic hand to accomplish complex tasks that require fine-motor skills.

Using Robotics Simulation for Planning and Control Outcomes

In complex and dynamic industrial settings, robotics simulation is evolving to integrate digital twins, enhancing planning, control and learning outcomes.

Developers import computer-aided design models into a robotics simulator to build virtual scenes and employ algorithms to create the robot operating system and enable task and motion planning. While traditional methods involve prescribing control signals, the shift toward machine learning allows robots to learn behaviors through methods like imitation and reinforcement learning, using simulated sensor signals.

This evolution continues with digital twins in complex facilities like manufacturing assembly lines, where developers can test and refine real-time AIs entirely in simulation. This approach saves software development time and costs, and reduces downtime by anticipating issues. For instance, using NVIDIA Omniverse, Metropolis and cuOpt, developers can use digital twins to develop, test and refine physical AI in simulation before deploying in industrial infrastructure.

High-Fidelity, Physics-Based Simulation Breakthroughs

High-fidelity, physics-based simulations have supercharged industrial robotics through real-world experimentation in virtual environments.

NVIDIA PhysX, integrated into Omniverse and Isaac Sim, empowers roboticists to develop fine- and gross-motor skills for robot manipulators, rigid and soft body dynamics, vehicle dynamics and other critical features that ensure the robot obeys the laws of physics. This includes precise control over actuators and modeling of kinematics, which are essential for accurate robot movements.

To close the sim-to-real gap, Isaac Lab offers a high-fidelity, open-source framework for reinforcement learning and imitation learning that facilitates seamless policy transfer from simulated environments to physical robots. With GPU parallelization, Isaac Lab accelerates training and improves performance, making complex tasks more achievable and safe for industrial robots.

To learn more about creating a locomotion reinforcement learning policy with Isaac Sim and Isaac Lab, read this developer blog.

Teaching Collision-Free Motion for Autonomy

Industrial robot training often occurs in specific settings like factories or fulfillment centers, where simulations help address challenges related to various robot types and chaotic environments. A critical aspect of these simulations is generating collision-free motion in unknown, cluttered environments.

Traditional motion planning approaches that attempt to address these challenges can come up short in unknown or dynamic environments. SLAM, or simultaneous localization and mapping, can be used to generate 3D maps of environments with camera images from multiple viewpoints. However, these maps require revisions when objects move and environments are changed.

The NVIDIA Robotics research team and the University of Washington introduced Motion Policy Networks (MπNets), an end-to-end neural policy that generates real-time, collision-free motion using a single fixed camera’s data stream. Trained on over 3 million motion planning problems and 700 million simulated point clouds, MπNets navigates unknown real-world environments effectively.

While the MπNets model applies direct learning for trajectories, the team also developed a point cloud-based collision model called CabiNet, trained on over 650,000 procedurally generated simulated scenes.

With the CabiNet model, developers can deploy general-purpose, pick-and-place policies of unknown objects beyond a flat tabletop setup. Training with a large synthetic dataset allowed the model to generalize to out-of-distribution scenes in a real kitchen environment, without needing any real data.

How Developers Can Get Started Building Robotic Simulators

Get started with technical resources, reference applications and other solutions for developing physically accurate simulation pipelines by visiting the NVIDIA Robotics simulation use case page.

Robot developers can tap into NVIDIA Isaac Sim, which supports multiple robot training techniques:

- Synthetic data generation for training perception AI models

- Software-in-the-loop testing for the entire robot stack

- Robot policy training with Isaac Lab

Developers can also pair ROS 2 with Isaac Sim to train, simulate and validate their robot systems. The Isaac Sim to ROS 2 workflow is similar to workflows executed with other robot simulators such as Gazebo. It starts with bringing a robot model into a prebuilt Isaac Sim environment, adding sensors to the robot, and then connecting the relevant components to the ROS 2 action graph and simulating the robot by controlling it through ROS 2 packages.

Stay up to date by subscribing to our newsletter and follow NVIDIA Robotics on LinkedIn, Instagram, X and Facebook.

]]>Editor’s note: This post is the first in the AI On blog series, which explores the latest techniques and real-world applications of agentic AI, chatbots and copilots. The series will also highlight the NVIDIA software and hardware powering advanced AI agents, which form the foundation of AI query engines that gather insights and perform tasks to transform everyday experiences and reshape industries.

Whether it’s getting a complex service claim resolved or having a simple purchase inquiry answered, customers expect timely, accurate responses to their requests.

AI agents can help organizations meet this need. And they can grow in scope and scale as businesses grow, helping keep customers from taking their business elsewhere.

AI agents can be used as virtual assistants, which use artificial intelligence and natural language processing to handle high volumes of customer service requests. By automating routine tasks, AI agents ease the workload on human agents, allowing them to focus on tasks requiring a more personal touch.

AI-powered customer service tools like chatbots have become table stakes across every industry looking to increase efficiency and keep buyers happy. According to a recent IDC study on conversational AI, 41% of organizations use AI-powered copilots for customer service and 60% have implemented them for IT help desks.

Now, many of those same industries are looking to adopt agentic AI, semi-autonomous tools that have the ability to perceive, reason and act on more complex problems.

How AI Agents Enhance Customer Service

A primary value of AI-powered systems is the time they free up by automating routine tasks. AI agents can perform specific tasks, or agentic operations, essentially becoming part of an organization’s workforce — working alongside humans who can focus on more complex customer issues.

AI agents can handle predictive tasks and problem-solve, can be trained to understand industry-specific terms and can pull relevant information from an organization’s knowledge bases, wherever that data resides.

With AI agents, companies can:

- Boost efficiency: AI agents handle common questions and repetitive tasks, allowing support teams to prioritize more complicated cases. This is especially useful during high-demand periods.

- Increase customer satisfaction: Faster, more personalized interactions result in happier and more loyal customers. Consistent and accurate support improves customer sentiment and experience.

- Scale Easily: Equipped to handle high volumes of customer support requests, AI agents scale effortlessly with growing businesses, reducing customer wait times and resolving issues faster.

AI Agents for Customer Service Across Industries

AI agents are transforming customer service across sectors, helping companies enhance customer conversations, achieve high-resolution rates and improve human representative productivity.

For instance, ServiceNow recently introduced IT and customer service management AI agents to boost productivity by autonomously solving many employee and customer issues. Its agents can understand context, create step-by-step resolutions and get live agent approvals when needed.

To improve patient care and reduce preprocedure anxiety, The Ottawa Hospital is using AI agents that have consistent, accurate and continuous access to information. The agent has the potential to improve patient care and reduce administrative tasks for doctors and nurses.

The city of Amarillo, Texas, uses a multilingual digital assistant named Emma to provide its residents with 24/7 support. Emma brings more effective and efficient disbursement of important information to all residents, including the one-quarter who don’t speak English.

AI agents meet current customer service demands while preparing organizations for the future.

Key Steps for Designing AI Virtual Assistants for Customer Support

AI agents for customer service come in a wide range of designs, from simple text-based virtual assistants that resolve customer issues, to animated avatars that can provide a more human-like experience.

Digital human interfaces can add warmth and personality to the customer experience. These agents respond with spoken language and even animated avatars, enhancing service interactions with a touch of real-world flair. A digital human interface lets companies customize the assistant’s appearance and tone, aligning it with the brand’s identity.

There are three key building blocks to creating an effective AI agent for customer service:

- Collect and organize customer data: AI agents need a solid base of customer data (such as profiles, past interactions, and transaction histories) to provide accurate, context-aware responses.

- Use memory functions for personalization: Advanced AI systems remember past interactions, allowing agents to deliver personalized support that feels human.

- Build an operations pipeline: Customer service teams should regularly review feedback and update the AI agent’s responses to ensure it’s always improving and aligned with business goals.

Powering AI Agents With NVIDIA NIM Microservices

NVIDIA NIM microservices power AI agents by enabling natural language processing, contextual retrieval and multilingual communication. This allows AI agents to deliver fast, personalized and accurate support tailored to diverse customer needs.

Key NVIDIA NIM microservices for customer service agents include:

NVIDIA NIM for Large Language Models — Microservices that bring advanced language models to applications and enable complex reasoning, so AI agents can understand complicated customer queries.

NVIDIA NeMo Retriever NIM — Embedding and reranking microservices that support retrieval-augmented generation pipelines allow virtual assistants to quickly access enterprise knowledge bases and boost retrieval performance by ranking relevant knowledge-base articles and improving context accuracy.

NVIDIA NIM for Digital Humans — Microservices that enable intelligent, interactive avatars to understand speech and respond in a natural way. NVIDIA Riva NIM microservices for text-to-speech, automatic speech recognition (ASR), and translation services enable AI agents to communicate naturally across languages. The recently released Riva NIM microservices for ASR enable additional multilingual enhancements. To build realistic avatars, Audio2Face NIM converts streamed audio to facial movements for real-time lip syncing. 2D and 3D Audio2Face NIM microservices support varying use cases.

Getting Started With AI Agents for Customer Service

NVIDIA AI Blueprints make it easy to start building and setting up virtual assistants by offering ready-made workflows and tools to accelerate deployment. Whether for a simple AI-powered chatbot or a fully animated digital human interface, the blueprints offer resources to create AI assistants that are scalable, aligned with an organization’s brand and deliver a responsive, efficient customer support experience.

Editor’s note: IDC figures are sourced to IDC, Market Analysis Perspective: Worldwide Conversational AI Tools and Technologies, 2024 US51619524, Sept 2024

]]>Editor’s note: This post is part of Into the Omniverse, a blog series focused on how developers, 3D artists and enterprises can transform their workflows using the latest advances in OpenUSD and NVIDIA Omniverse.

3D product configurators are changing the way industries like retail and automotive engage with customers by offering interactive, customizable 3D visualizations of products.

Using physically accurate product digital twins, even non-3D artists can streamline content creation and generate stunning marketing visuals.

With the new NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI, developers can start using the NVIDIA Omniverse platform and Universal Scene Description (OpenUSD) to easily build personalized, on-brand and product-accurate marketing content at scale.

By integrating generative AI into product configurators, developers can optimize operations and reduce production costs. With repetitive tasks automated, teams can focus on the creative aspects of their jobs.

Developing Controllable Generative AI for Content Production

The new Omniverse Blueprint introduces a robust framework for integrating generative AI into 3D workflows to enable precise and controlled asset creation.

Example images created using the NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI.

Example images created using the NVIDIA Omniverse Blueprint for 3D conditioning for precise visual generative AI.

Key highlights of the blueprint include:

- Model conditioning to ensure that the AI-generated visuals adhere to specific brand requirements like colors and logos.

- Multimodal approach that combines 3D and 2D techniques to offer developers complete control over final visual outputs while ensuring the product’s digital twin remains accurate.

- Key components such as an on-brand hero asset, a simple and untextured 3D scene, and a customizable application built with the Omniverse Kit App Template.

- OpenUSD integration to enhance development of 3D visuals with precise visual generative AI.

- Integration of NVIDIA NIM, such as the Edify 360 NIM, Edify 3D NIM, USD Code NIM and USD Search NIM microservices, allows the blueprint to be extensible and customizable. The microservices are available to preview on build.nvidia.com.

How Developers Are Building AI-Enabled Content Pipelines

Katana Studio developed a content creation tool with OpenUSD called COATcreate that empowers marketing teams to rapidly produce 3D content for automotive advertising. By using 3D data prepared by creative experts and vetted by product specialists in OpenUSD, even users with limited artistic experience can quickly create customized, high-fidelity, on-brand content for any region or use case without adding to production costs.

Global marketing leader WPP has built a generative AI content engine for brand advertising with OpenUSD. The Omniverse Blueprint for precise visual generative AI helped facilitate the integration of controllable generative AI in its content creation tools. Leading global brands like The Coca-Cola Company are already beginning to adopt tools from WPP to accelerate iteration on its creative campaigns at scale.

Watch the replay of a recent livestream with WPP for more on its generative AI- and OpenUSD-enabled workflow:

The NVIDIA creative team developed a reference workflow called CineBuilder on Omniverse that allows companies to use text prompts to generate ads personalized to consumers based on region, weather, time of day, lifestyle and aesthetic preferences.

Developers at independent software vendors and production services agencies are building content creation solutions infused with controllable generative AI and built on OpenUSD. Accenture Song, Collective World, Grip, Monks and WPP are among those adopting Omniverse Blueprints to accelerate development.

Read the tech blog on developing product configurators with OpenUSD and get started developing solutions using the DENZA N7 3D configurator and CineBuilder reference workflow.

Get Plugged Into the World of OpenUSD

Various resources are available to help developers get started building AI-enabled product configuration solutions:

- Omniverse Blueprint: 3D Conditioning for Precise Visual Generative AI

- Reference Architecture: 3D Conditioning for Precise Visual Generative AI

- Reference Architecture: Generative AI Workflow for Content Creation

- Reference Architecture: Product Configurator

- End-to-End Configurator Example Guide

- DLI Course: Building a 3D Product Configurator With OpenUSD

- Livestream: OpenUSD for Marketing and Advertising

For more on optimizing OpenUSD workflows, explore the new self-paced Learn OpenUSD training curriculum that includes free Deep Learning Institute courses for 3D practitioners and developers. For more resources on OpenUSD, attend our instructor-led Learn OpenUSD courses at SIGGRAPH Asia on December 3, explore the Alliance for OpenUSD forum and visit the AOUSD website.

Don’t miss the CES keynote delivered by NVIDIA founder and CEO Jensen Huang live in Las Vegas on Monday, Jan. 6, at 6:30 p.m. PT for more on the future of AI and graphics.

Stay up to date by subscribing to NVIDIA news, joining the community and following NVIDIA Omniverse on Instagram, LinkedIn, Medium and X.

]]>Get ready to dive deeper into the criminal underworld of a galaxy far, far away as GeForce NOW brings the first major story pack for Star Wars Outlaws to the cloud this week.

The season of giving continues — GeForce NOW members can access a new free reward: a special in-game Star Wars Outlaws enhancement.

It’s all part of an exciting GFN Thursday, topped with five new games joining the more than 2,000 titles supported in the GeForce NOW library, including the launch of S.T.A.L.K.E.R. 2: Heart of Chornobyl and Xbox Gaming Studios fan favorites Fallout 3: Game of the Year Edition and The Elder Scrolls IV: Oblivion.

And make sure not to pass this opportunity up — gamers who want to take the Performance and Ultimate memberships for a spin can do so with 25% off Day Passes, now through Friday, Nov. 22. Day Passes give access to 24 continuous hours of powerful cloud gaming.

A New Saga Begins

The galaxy’s most electrifying escapade gets even more exciting with the new Wild Card story pack for Star Wars Outlaws.

This thrilling story pack invites scoundrels to join forces with the galaxy’s smoothest operator, Lando Calrissian, for a high-stakes Sabacc tournament that’ll keep players on the edge of their seats. As Kay Vess, gamers bluff, charm and blast their way through new challenges, exploring uncharted corners of the Star Wars galaxy. Meanwhile, a free update will scatter fresh Contract missions across the stars, offering members ample opportunities to build their reputations and line their pockets with credits.

To kick off this thrilling underworld adventure, GeForce NOW members are in for a special reward with the Forest Commando Character Pack.

The pack gives Kay and Nix, her loyal companion, a complete set of gear that’s perfect for missions in lush forest worlds. Get equipped with tactical trousers, a Bantha leather belt loaded with attachments, a covert poncho to shield against jungle rain and a hood for Nix that’s great for concealment in thick forests.

Members of the GeForce NOW rewards program can check their email for instructions on how to claim the reward. Ultimate and Performance members can start redeeming style packages today. Don’t miss out — this offer is available through Saturday, Dec. 21, on a first-come, first-served basis.

Welcome to the Zone

S.T.A.L.K.E.R. 2: Heart of Chornobyl, the highly anticipated sequel in the cult-classic S.T.A.L.K.E.R. series, is a first-person-shooter survival-horror game set in the Chornobyl Exclusion Zone.

In the game — which blends postapocalyptic fiction with Ukrainian folklore and the eerie reality of the Chornobyl disaster — players can explore a vast open world filled with mutated creatures, anomalies and other stalkers while uncovering the zone’s secrets and battling for survival.

The title features advanced graphics and physics powered by Unreal Engine 5 for stunningly realistic and detailed environments. Players’ choices impact the game world and narrative, which comprises a nonlinear storyline with multiple possible endings.

Players will take on challenging survival mechanics to test their skills and decision-making abilities. Members can make their own epic story with a Performance membership for enhanced GeForce RTX-powered streaming at 1440p or an Ultimate membership for up to 4K 120 frames per second streaming, offering the crispest visuals and smoothest gameplay.

Adventures Await

Members can emerge from Vault 101 into the irradiated ruins of Washington, D.C., in Fallout 3: Game of the Year Edition, which includes all five downloadable content packs released for Fallout 3. Experience the game that redefined the postapocalyptic genre with its morally ambiguous choices, memorable characters and the innovative V.A.T.S. combat system. Whether revisiting the Capital Wasteland, exploring the Mojave Desert or delving into the realm of Cyrodiil, these iconic titles have never looked or played better thanks to the power of GeForce NOW’s cloud streaming technology.

Members can look for the following games available to stream in the cloud this week:

- Towers of Aghasba (New release on Steam, Nov. 19)

- S.T.A.L.K.E.R. 2: Heart of Chornobyl (New release on Steam and Xbox, available on PC Game Pass, Nov. 20)

- Star Wars Outlaws (New release on Steam, Nov. 21)

- The Elder Scrolls IV: Oblivion Game of the Year Edition (Epic Games Store, Steam and Xbox, available on PC Game Pass)

- Fallout 3: Game of the Year Edition (Epic Games Store, Steam and Xbox, available on PC Game Pass)

What are you planning to play this weekend? Let us know on X or in the comments below.

]]>which sci-fi series or movie would make a great game?

—

NVIDIA GeForce NOW (@NVIDIAGFN) November 20, 2024

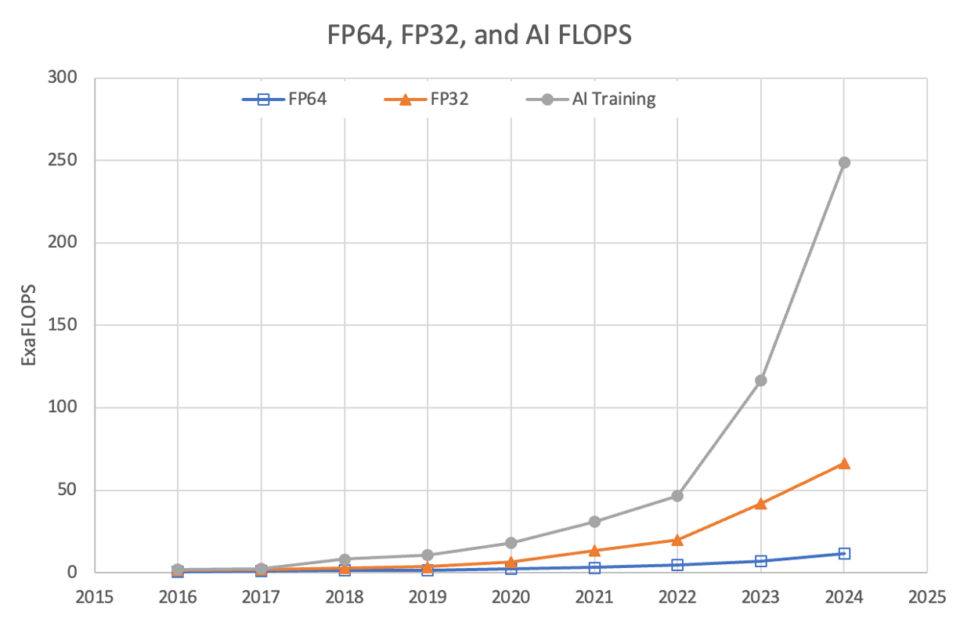

Starting with the release of CUDA in 2006, NVIDIA has driven advancements in AI and accelerated computing — and the most recent TOP500 list of the world’s most powerful supercomputers highlights the culmination of the company’s achievements in the field.

This year, 384 systems on the TOP500 list are powered by NVIDIA technologies. Among the 53 new to the list, 87% — 46 systems — are accelerated. Of those accelerated systems, 85% use NVIDIA Hopper GPUs, driving advancements in areas like climate forecasting, drug discovery and quantum simulation.

Accelerated computing is much more than floating point operations per second (FLOPS). It requires full-stack, application-specific optimization. At SC24 this week, NVIDIA announced the release of cuPyNumeric, an NVIDIA CUDA-X library that enables over 5 million developers to seamlessly scale to powerful computing clusters without modifying their Python code.

NVIDIA also revealed significant updates to the NVIDIA CUDA-Q development platform, which empowers quantum researchers to simulate quantum devices at a scale previously thought computationally impossible.

And, NVIDIA received nearly a dozen HPCwire Readers’ and Editors’ Choice awards across a variety of categories, marking its 20th consecutive year of recognition.

A New Era of Scientific Discovery With Mixed Precision and AI

Mixed-precision floating-point operations and AI have become the tools of choice for researchers grappling with the complexities of modern science. They offer greater speed, efficiency and adaptability than traditional methods, without compromising accuracy.

This shift isn’t just theoretical — it’s already happening. At SC24, two Gordon Bell finalist projects revealed how using AI and mixed precision helped advance genomics and protein design.

In his paper titled “Using Mixed Precision for Genomics,” David Keyes, a professor at King Abdullah University of Science and Technology, used 0.8 exaflops of mixed precision to explore relationships between genomes and their generalized genotypes, and then to the prevalence of diseases to which they are subject.

Similarly, Arvind Ramanathan, a computational biologist from the Argonne National Laboratory, harnessed 3 exaflops of AI performance on the NVIDIA Grace Hopper-powered Alps system to speed up protein design.

To further advance AI-driven drug discovery and the development of lifesaving therapies, researchers can use NVIDIA BioNeMo, powerful tools designed specifically for pharmaceutical applications. Now in open source, the BioNeMo Framework can accelerate AI model creation, customization and deployment for drug discovery and molecular design.

Across the TOP500, the widespread use of AI and mixed-precision floating-point operations reflects a global shift in computing priorities. A total of 249 exaflops of AI performance are now available to TOP500 systems, supercharging innovations and discoveries across industries.

NVIDIA-accelerated TOP500 systems excel across key metrics like AI and mix-precision system performance. With over 190 exaflops of AI performance and 17 exaflops of single-precision (FP32), NVIDIA’s accelerated computing platform is the new engine of scientific computing. NVIDIA also delivers 4 exaflops of double-precision (FP64) performance for certain scientific calculations that still require it.

Accelerated Computing Is Sustainable Computing

As the demand for computational capacity grows, so does the need for sustainability.

In the Green500 list of the world’s most energy-efficient supercomputers, systems with NVIDIA accelerated computing rank among eight of the top 10. The JEDI system at EuroHPC/FZJ, for example, achieves a staggering 72.7 gigaflops per watt, setting a benchmark for what’s possible when performance and sustainability align.

For climate forecasting, NVIDIA announced at SC24 two new NVIDIA NIM microservices for NVIDIA Earth-2, a digital twin platform for simulating and visualizing weather and climate conditions. The CorrDiff NIM and FourCastNet NIM microservices can accelerate climate change modeling and simulation results by up to 500x.

In a world increasingly conscious of its environmental footprint, NVIDIA’s innovations in accelerated computing balance high performance with energy efficiency to help realize a brighter, more sustainable future.

Supercomputing Community Embraces NVIDIA

The 11 HPCwire Readers’ Choice and Editors’ Choice awards NVIDIA received represent the work of the entire scientific community of engineers, developers, researchers, partners, customers and more.

The awards include:

- Readers’ Choice: Best AI Product or Technology – NVIDIA GH200 Grace Hopper Superchip

- Readers’ Choice: Best HPC Interconnect Product or Technology – NVIDIA Quantum-X800

- Readers’ Choice: Best HPC Server Product or Technology – NVIDIA Grace CPU Superchip

- Readers’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA Quantum-X800

- Readers’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA Spectrum-X

- Readers’ and Editors’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA Blackwell GPU

- Editors’ Choice: Top 5 New Products or Technologies to Watch – NVIDIA CUDA-Q

- Readers’ Choice: Top 5 Vendors to Watch – NVIDIA

- Readers’ Choice: Best HPC Response to Societal Plight – NVIDIA Earth-2

- Editors’ Choice: Best Use of HPC in Energy (one of two named contributors) – Real-time simulation of CO2 plume migration in carbon capture and storage

- Readers’ Choice Award: Best HPC Collaboration (one of 11 named contributors) – National Artificial Intelligence Research Resource Pilot

Watch the replay of NVIDIA’s special address at SC24 and learn more about the company’s news in the SC24 online press kit.

See notice regarding software product information.

]]>As COP29 attendees gather in Baku, Azerbaijan, to tackle climate change, the role AI plays in environmental sustainability is front and center.

A panel hosted by Deloitte brought together industry leaders to explore ways to reduce AI’s environmental footprint and align its growth with climate goals.

Experts from Crusoe Energy Systems, EON, the International Energy Agency (IEA) and NVIDIA sat down for a conversation about the energy efficiency of AI.

The Environmental Impact of AI

Deloitte’s recent report, “Powering Artificial Intelligence: A study of AI’s environmental footprint,” shows AI’s potential to drive a climate-neutral economy. The study looks at how organizations can achieve “Green AI” in the coming decades and addresses AI’s energy use.

Deloitte analysis predicts that AI adoption will fuel data center power demand, likely reaching 1,000 terawatt-hours (TWh) by 2030, and potentially climbing to 2,000 TWh by 2050. This will account for 3% of global electricity consumption, indicating faster growth than in other uses like electric cars and green hydrogen production.

While data centers currently consume around 2% of total electricity, and AI is a small fraction of that, the discussion at COP29 emphasized the need to meet rising energy demands with clean energy sources to support global climate goals.

Energy Efficiency From the Ground Up

NVIDIA is prioritizing energy-efficient data center operations with innovations like liquid-cooled GPUs. Direct-to-chip liquid cooling allows data centers to cool systems more effectively than traditional air conditioning, consuming less power and water.

“We see a very rapid trend toward direct-to-chip liquid cooling, which means water demands in data centers are dropping dramatically right now,” said Josh Parker, senior director of legal – corporate sustainability at NVIDIA.

As AI continues to scale, the future of data centers will hinge on designing for energy efficiency from the outset. By prioritizing energy efficiency from the ground up, data centers can meet the growing demands of AI while contributing to a more sustainable future.

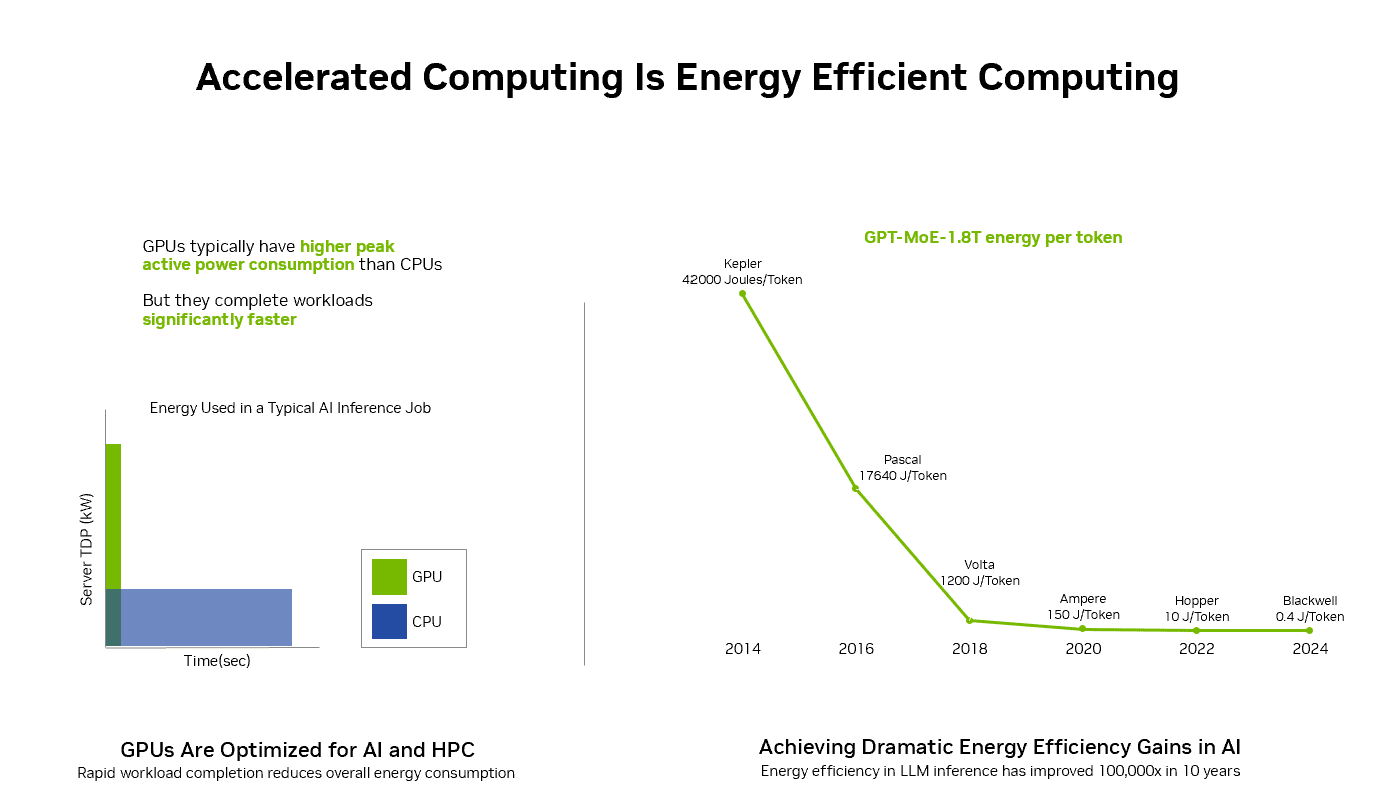

Parker emphasized that existing data center infrastructure is becoming dated and less efficient. “The data shows that it’s 10x more efficient to run workloads on accelerated computing platforms than on traditional data center platforms,” he said. “There’s a huge opportunity for us to reduce the energy consumed in existing infrastructures.”

The Path to Green Computing

AI has the potential to play a large role in moving toward climate-neutral economies, according to Deloitte’s study. This approach, often called Green AI, involves reducing the environmental impact of AI throughout the value chain with practices like purchasing renewable energy and improving hardware design.

Until now, Green AI has mostly been led by industry leaders. Take accelerated computing, for instance, which is all about doing more with less. It uses special hardware — like GPUs — to perform tasks faster and with less energy than general-purpose servers that use CPUs, which handle a task at a time.

That’s why accelerated computing is sustainable computing.

“Accelerated computing is actually the most energy-efficient platform that we’ve seen for AI but also for a lot of other computing applications,” said Parker.

“The trend in energy efficiency for accelerated computing over the last several years shows a 100,000x reduction in energy consumption. And just in the past 2 years, we’ve become 25x more efficient for AI inference. That’s a 96% reduction in energy for the same computational workload,” he said.

Reducing Energy Consumption Across Sectors

Innovations like the NVIDIA Blackwell and Hopper architectures significantly improve energy efficiency with each new generation. NVIDIA Blackwell is 25x more energy-efficient for large language models, and the NVIDIA H100 Tensor Core GPU is 20x more efficient than CPUs for complex workloads.

“AI has the potential to make other sectors much more energy efficient,” said Parker. Murex, a financial services firm, achieved a 4x reduction in energy use and 7x faster performance with the NVIDIA Grace Hopper Superchip.

“In manufacturing, we’re seeing around 30% reductions in energy requirements if you use AI to help optimize the manufacturing process through digital twins,” he said.

For example, manufacturing company Wistron improved energy efficiency using digital twins and NVIDIA Omniverse, a platform for developing OpenUSD applications for industrial digitalization and physical AI simulation. The company reduced its electricity consumption by 120,000 kWh and carbon emissions by 60,000 kg annually.

A Tool for Energy Management

Deloitte reports that AI can help optimize resource use and reduce emissions, playing a crucial role in energy management. This means it has the potential to lower the impact of industries beyond its own carbon footprint.

Combined with digital twins, AI is transforming energy management systems by improving the reliability of renewable sources like solar and wind farms. It’s also being used to optimize facility layouts, monitor equipment, stabilize power grids and predict climate patterns, aiding in global efforts to reduce carbon emissions.

COP29 discussions emphasized the importance of powering AI infrastructure with renewables and setting ethical guidelines. By innovating with the environment in mind, industries can use AI to build a more sustainable world.

Watch a replay of the on-demand COP29 panel discussion.

]]>The U.S. Department of Energy oversees national energy policy and production. As big a job as that is, the DOE also does so much more — enough to have earned the nickname “Department of Everything.”

In this episode of the NVIDIA AI Podcast, Helena Fu, director of the DOE’s Office of Critical and Emerging Technologies (CET) and DOE’s chief AI officer, talks about the department’s latest AI efforts. With initiatives touching national security, infrastructure and utilities, and oversight of 17 national labs and 34 scientific user facilities dedicated to scientific discovery and industry innovation, DOE and CET are central to AI-related research and development throughout the country.

Hear more from Helena Fu by watching the on-demand session, AI for Science, Energy and Security, from AI Summit DC. And learn more about software-defined infrastructure for power and utilities.

Time Stamps

2:20: Four areas of focus for the CET include AI, microelectronics, quantum information science and biotechnology.

10:55: Introducing AI-related initiatives within the DOE, including FASST, or Frontiers in AI for Science, Security and Technology.

16:30: Discussing future applications of AI, large language models and more.

19:35: The opportunity of AI applied to materials discovery and applications across science, energy and national security.

You Might Also Like…

NVIDIA’s Josh Parker on How AI and Accelerated Computing Drive Sustainability – Ep. 234

AI isn’t just about building smarter machines. It’s about building a greener world. AI and accelerated computing are helping industries tackle some of the world’s toughest environmental challenges. Joshua Parker, senior director of corporate sustainability at NVIDIA, explains how these technologies are powering a new era of energy efficiency.

Currents of Change: ITIF’s Daniel Castro on Energy-Efficient AI and Climate Change

AI is everywhere. So, too, are concerns about advanced technology’s environmental impact. Daniel Castro, vice president of the Information Technology and Innovation Foundation and director of its Center for Data Innovation, discusses his AI energy use report that addresses misconceptions about AI’s energy consumption. He also talks about the need for continued development of energy-efficient technology.

How the Ohio Supercomputer Center Drives the Future of Computing – Ep. 213

The Ohio Supercomputer Center’s Open OnDemand program empowers the state’s educational institutions and industries with computational services, training and educational programs. They’ve even helped NASCAR simulate race car designs. Alan Chalker, the director of strategic programs at the OSC, talks about all things supercomputing.

Anima Anandkumar on Using Generative AI to Tackle Global Challenges – Ep. 204

Anima Anandkumar, Bren Professor at Caltech and former senior director of AI research at NVIDIA, speaks to generative AI’s potential to make splashes in the scientific community, from accelerating drug and vaccine research to predicting extreme weather events like hurricanes or heat waves.

Subscribe to the AI Podcast

Get the AI Podcast through iTunes, Google Play, Amazon Music, Castbox, DoggCatcher, Overcast, PlayerFM, Pocket Casts, Podbay, PodBean, PodCruncher, PodKicker, Soundcloud, Spotify, Stitcher and TuneIn.

Make the AI Podcast better: Have a few minutes to spare? Fill out this listener survey.

]]>NVIDIA and Microsoft today unveiled product integrations designed to advance full-stack NVIDIA AI development on Microsoft platforms and applications.

At Microsoft Ignite, Microsoft announced the launch of the first cloud private preview of the Azure ND GB200 V6 VM series, based on the NVIDIA Blackwell platform. The Azure ND GB200 v6 will be a new AI-optimized virtual machine (VM) series and combines the NVIDIA GB200 NVL72 rack design with NVIDIA Quantum InfiniBand networking.

In addition, Microsoft revealed that Azure Container Apps now supports NVIDIA GPUs, enabling simplified and scalable AI deployment. Plus, the NVIDIA AI platform on Azure includes new reference workflows for industrial AI and an NVIDIA Omniverse Blueprint for creating immersive, AI-powered visuals.

At Ignite, NVIDIA also announced multimodal small language models (SLMs) for RTX AI PCs and workstations, enhancing digital human interactions and virtual assistants with greater realism.

NVIDIA Blackwell Powers Next-Gen AI on Microsoft Azure

Microsoft’s new Azure ND GB200 V6 VM series will harness the powerful performance of NVIDIA GB200 Grace Blackwell Superchips, coupled with advanced NVIDIA Quantum InfiniBand networking. This offering is optimized for large-scale deep learning workloads to accelerate breakthroughs in natural language processing, computer vision and more.

The Blackwell-based VM series complements previously announced Azure AI clusters with ND H200 V5 VMs, which provide increased high-bandwidth memory for improved AI inferencing. The ND H200 V5 VMs are already being used by OpenAI to enhance ChatGPT.

Azure Container Apps Enables Serverless AI Inference With NVIDIA Accelerated Computing

Serverless computing provides AI application developers increased agility to rapidly deploy, scale and iterate on applications without worrying about underlying infrastructure. This enables them to focus on optimizing models and improving functionality while minimizing operational overhead.

The Azure Container Apps serverless containers platform simplifies deploying and managing microservices-based applications by abstracting away the underlying infrastructure.

Azure Container Apps now supports NVIDIA-accelerated workloads with serverless GPUs, allowing developers to use the power of accelerated computing for real-time AI inference applications in a flexible, consumption-based, serverless environment. This capability simplifies AI deployments at scale while improving resource efficiency and application performance without the burden of infrastructure management.

Serverless GPUs allow development teams to focus more on innovation and less on infrastructure management. With per-second billing and scale-to-zero capabilities, customers pay only for the compute they use, helping ensure resource utilization is both economical and efficient. NVIDIA is also working with Microsoft to bring NVIDIA NIM microservices to serverless NVIDIA GPUs in Azure to optimize AI model performance.

NVIDIA Unveils Omniverse Reference Workflows for Advanced 3D Applications

NVIDIA announced reference workflows that help developers to build 3D simulation and digital twin applications on NVIDIA Omniverse and Universal Scene Description (OpenUSD) — accelerating industrial AI and advancing AI-driven creativity.

A reference workflow for 3D remote monitoring of industrial operations is coming soon to enable developers to connect physically accurate 3D models of industrial systems to real-time data from Azure IoT Operations and Power BI.

These two Microsoft services integrate with applications built on NVIDIA Omniverse and OpenUSD to provide solutions for industrial IoT use cases. This helps remote operations teams accelerate decision-making and optimize processes in production facilities.

The Omniverse Blueprint for precise visual generative AI enables developers to create applications that let nontechnical teams generate AI-enhanced visuals while preserving brand assets. The blueprint supports models like SDXL and Shutterstock Generative 3D to streamline the creation of on-brand, AI-generated images.

Leading creative groups, including Accenture Song, Collective, GRIP, Monks and WPP, have adopted this NVIDIA Omniverse Blueprint to personalize and customize imagery across markets.

Accelerating Gen AI for Windows With RTX AI PCs

NVIDIA’s collaboration with Microsoft extends to bringing AI capabilities to personal computing devices.

At Ignite, NVIDIA announced its new multimodal SLM, NVIDIA Nemovision-4B Instruct, for understanding visual imagery in the real world and on screen. It’s coming soon to RTX AI PCs and workstations and will pave the way for more sophisticated and lifelike digital human interactions.

Plus, updates to NVIDIA TensorRT Model Optimizer (ModelOpt) offer Windows developers a path to optimize a model for ONNX Runtime deployment. TensorRT ModelOpt enables developers to create AI models for PCs that are faster and more accurate when accelerated by RTX GPUs. This enables large models to fit within the constraints of PC environments, while making it easy for developers to deploy across the PC ecosystem with ONNX runtimes.

RTX AI-enabled PCs and workstations offer enhanced productivity tools, creative applications and immersive experiences powered by local AI processing.

Full-Stack Collaboration for AI Development

NVIDIA’s extensive ecosystem of partners and developers brings a wealth of AI and high-performance computing options to the Azure platform.

SoftServe, a global IT consulting and digital services provider, today announced the availability of SoftServe Gen AI Industrial Assistant, based on the NVIDIA AI Blueprint for multimodal PDF data extraction, on the Azure marketplace. The assistant addresses critical challenges in manufacturing by using AI to enhance equipment maintenance and improve worker productivity.

At Ignite, AT&T will showcase how it’s using NVIDIA AI and Azure to enhance operational efficiency, boost employee productivity and drive business growth through retrieval-augmented generation and autonomous assistants and agents.

Learn more about NVIDIA and Microsoft’s collaboration and sessions at Ignite.

See notice regarding software product information.

]]>Generative AI-powered laptops and PCs are unlocking advancements in gaming, content creation, productivity and development. Today, over 600 Windows apps and games are already running AI locally on more than 100 million GeForce RTX AI PCs worldwide, delivering fast, reliable and low-latency performance.

At Microsoft Ignite, NVIDIA and Microsoft announced tools to help Windows developers quickly build and optimize AI-powered apps on RTX AI PCs, making local AI more accessible. These new tools enable application and game developers to harness powerful RTX GPUs to accelerate complex AI workflows for applications such as AI agents, app assistants and digital humans.

RTX AI PCs Power Digital Humans With Multimodal Small Language Models

NVIDIA ACE is a suite of digital human technologies that brings life to agents, assistants and avatars. To achieve a higher level of understanding so that they can respond with greater context-awareness, digital humans must be able to visually perceive the world like humans do.

Enhancing digital human interactions with greater realism demands technology that enables perception and understanding of their surroundings with greater nuance. To achieve this, NVIDIA developed multimodal small language models that can process both text and imagery, excel in role-playing and are optimized for rapid response times.

The NVIDIA Nemovision-4B-Instruct model, soon to be available, uses the latest NVIDIA VILA and NVIDIA NeMo framework for distilling, pruning and quantizing to become small enough to perform on RTX GPUs with the accuracy developers need.

The model enables digital humans to understand visual imagery in the real world and on the screen to deliver relevant responses. Multimodality serves as the foundation for agentic workflows and offers a sneak peek into a future where digital humans can reason and take action with minimal assistance from a user.

NVIDIA is also introducing the Mistral NeMo Minitron 128k Instruct family, a suite of large-context small language models designed for optimized, efficient digital human interactions, coming soon. Available in 8B-, 4B- and 2B-parameter versions, these models offer flexible options for balancing speed, memory usage and accuracy on RTX AI PCs. They can handle large datasets in a single pass, eliminating the need for data segmentation and reassembly. Built in the GGUF format, these models enhance efficiency on low-power devices and support compatibility with multiple programming languages.

Turbocharge Gen AI With NVIDIA TensorRT Model Optimizer for Windows

When bringing models to PC environments, developers face the challenge of limited memory and compute resources for running AI locally. And they want to make models available to as many people as possible, with minimal accuracy loss.

Today, NVIDIA announced updates to NVIDIA TensorRT Model Optimizer (ModelOpt) to offer Windows developers an improved way to optimize models for ONNX Runtime deployment.

With the latest updates, TensorRT ModelOpt enables models to be optimized into an ONNX checkpoint for deploying the model within ONNX runtime environments — using GPU execution providers such as CUDA, TensorRT and DirectML.

TensorRT-ModelOpt includes advanced quantization algorithms, such as INT4-Activation Aware Weight Quantization. Compared to other tools such as Olive, the new method reduces the memory footprint of the model and improves throughput performance on RTX GPUs.

During deployment, the models can have up to 2.6x reduced memory footprint compared to FP16 models. This results in faster throughput, with minimal accuracy degradation, allowing them to run on a wider range of PCs.

Learn more about how developers on Microsoft systems, from Windows RTX AI PCs to NVIDIA Blackwell-powered Azure servers, are transforming how users interact with AI on a daily basis.

]]>NVIDIA kicked off SC24 in Atlanta with a wave of AI and supercomputing tools set to revolutionize industries like biopharma and climate science.

The announcements, delivered by NVIDIA founder and CEO Jensen Huang and Vice President of Accelerated Computing Ian Buck, are rooted in the company’s deep history in transforming computing.

“Supercomputers are among humanity’s most vital instruments, driving scientific breakthroughs and expanding the frontiers of knowledge,” Huang said. “Twenty-five years after creating the first GPU, we have reinvented computing and sparked a new industrial revolution.”

NVIDIA’s journey in accelerated computing began with CUDA in 2006 and the first GPU for scientific computing, Huang said.

Milestones like Tokyo Tech’s Tsubame supercomputer in 2008, the Oak Ridge National Laboratory’s Titan supercomputer in 2012 and the AI-focused NVIDIA DGX-1 delivered to OpenAI in 2016 highlight NVIDIA’s transformative role in the field.

“Since CUDA’s inception, we’ve driven down the cost of computing by a millionfold,” Huang said. “For some, NVIDIA is a computational microscope, allowing them to see the impossibly small. For others, it’s a telescope exploring the unimaginably distant. And for many, it’s a time machine, letting them do their life’s work within their lifetime.”