Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for GeForce RTX PC and NVIDIA RTX workstation users.

Generative AI has transformed the way people bring ideas to life. Agentic AI takes this one step further — using sophisticated, autonomous reasoning and iterative planning to help solve complex, multi-step problems.

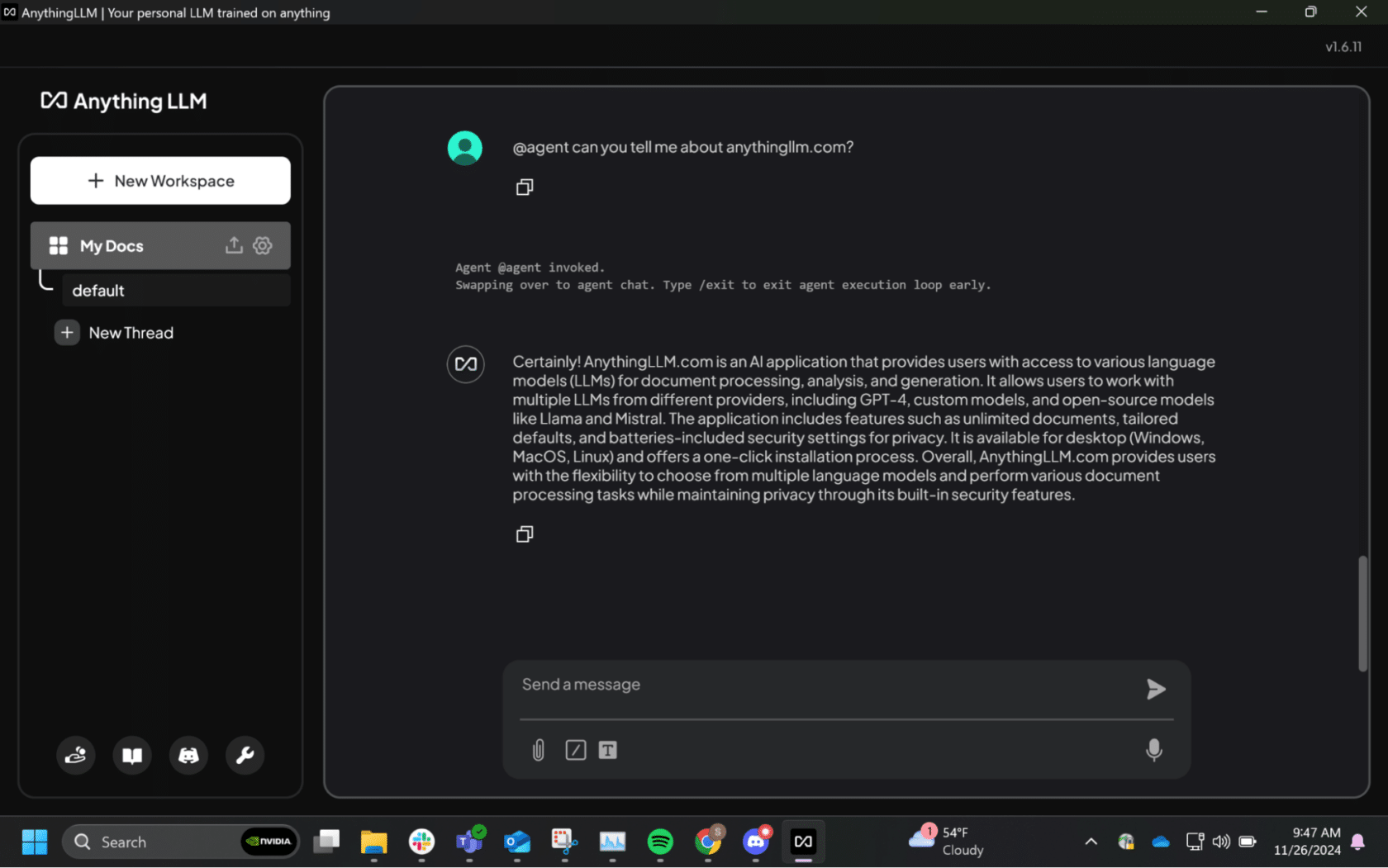

AnythingLLM is a customizable open-source desktop application that lets users seamlessly integrate large language model (LLM) capabilities into various applications locally on their PCs. It enables users to harness AI for tasks such as content generation, summarization and more, tailoring tools to meet specific needs.

Accelerated on NVIDIA RTX AI PCs, AnythingLLM has launched a new Community Hub where users can share prompts, slash commands and AI agent skills while experimenting with building and running AI agents locally.

Autonomously Solve Complex, Multi-Step Problems With Agentic AI

AI agents can take chatbot capabilities further. They typically understand the context of the tasks and can analyze challenges and develop strategies — and some can even fully execute assigned tasks.

For example, while a chatbot could answer a prompt asking for a restaurant recommendation, an AI agent could even surface the restaurant’s phone number for a reservation and add reminders to the user’s calendar.

Agents help achieve big-picture goals and don’t get bogged down at the task level. There are many agentic apps in development to tackle to-do lists, manage schedules, help organize tasks, automate email replies, recommend personalized workout plans or plan trips.

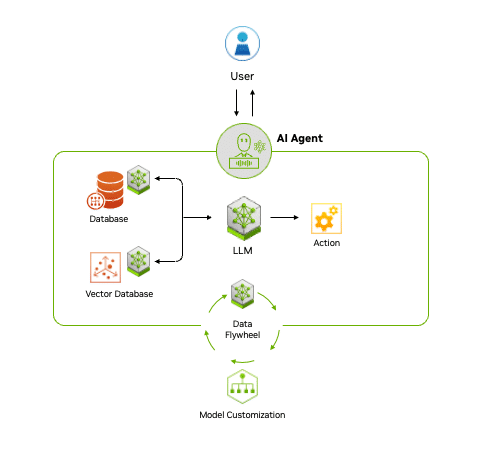

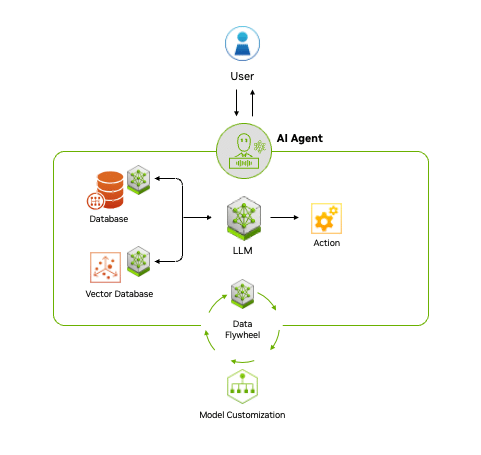

Once prompted, an AI agent can gather and process data from various sources, including databases. It can use an LLM for reasoning — for example, to understand the task — then generate solutions and specific functions. If integrated with external tools and software, an AI agent can next execute the task.

Some sophisticated agents can even be improved through a feedback loop. When the data it generates is fed back into the system, the AI agent becomes smarter and faster.

Accelerated by NVIDIA RTX AI PCs, these agents can perform inferencing and execute tasks faster than any other PC. Users can operate the agent locally to help ensure data privacy, even without an internet connection.

AnythingLLM: A Community Effort, Accelerated by RTX

The AI community is already diving into the possibilities of agentic AI, experimenting with ways to create smarter, more capable systems.

Applications like AnythingLLM let developers easily build, customize and unlock agentic AI with their favorite models — like Llama and Mistral — as well as with other tools, such as Ollama and LMStudio. AnythingLLM is accelerated on RTX-powered AI PCs and workstations with high-performance Tensor Cores, dedicated hardware that provides the compute performance needed to run the latest and most demanding AI models.

AnythingLLM is designed to make working with AI seamless, productive and accessible to everyone. It allows users to chat with their documents using intuitive interfaces, use AI agents to handle complex and custom tasks, and run cutting-edge LLMs locally on RTX-powered PCs and workstations. This means unlocked access to local resources, tools and applications that typically can’t be integrated with cloud- or browser-based applications, or those that require extensive setup and knowledge to build. By tapping into the power of NVIDIA RTX GPUs, AnythingLLM delivers faster, smarter and more responsive AI for a variety of workflows — all within a single desktop application.

AnythingLLM’s Community Hub lets AI enthusiasts easily access system prompts that can help steer LLM behavior, discover productivity-boosting slash commands, build specialized AI agent skills for unique workflows and custom tools, and access on-device resources.

Some example agent skills that are available in the Community Hub include Microsoft Outlook email assistants, calendar agents, web searches and home assistant controllers, as well as agents for populating and even integrating custom application programming interface endpoints and services for a specific use case.

By enabling AI enthusiasts to download, customize and use agentic AI workflows on their own systems with full privacy, AnythingLLM is fueling innovation and making it easier to experiment with the latest technologies — whether building a spreadsheet assistant or tackling more advanced workflows.

Experience AnythingLLM now.

Powered by People, Driven by Innovation

AnythingLLM showcases how AI can go beyond answering questions to actively enhancing productivity and creativity. Such applications illustrate AI’s move toward becoming an essential collaborator across workflows.

Agentic AI’s potential applications are vast and require creativity, expertise and computing capabilities. NVIDIA RTX AI PCs deliver peak performance for running agents locally, whether accomplishing simple tasks like generating and distributing content, or managing more complex use cases such as orchestrating enterprise software.

Learn more and get started with agentic AI.

Generative AI is transforming gaming, videoconferencing and interactive experiences of all kinds. Make sense of what’s new and what’s next by subscribing to the AI Decoded newsletter.

]]>Editor’s note: This post is the first in the AI On blog series, which explores the latest techniques and real-world applications of agentic AI, chatbots and copilots. The series will also highlight the NVIDIA software and hardware powering advanced AI agents, which form the foundation of AI query engines that gather insights and perform tasks to transform everyday experiences and reshape industries.

Whether it’s getting a complex service claim resolved or having a simple purchase inquiry answered, customers expect timely, accurate responses to their requests.

AI agents can help organizations meet this need. And they can grow in scope and scale as businesses grow, helping keep customers from taking their business elsewhere.

AI agents can be used as virtual assistants, which use artificial intelligence and natural language processing to handle high volumes of customer service requests. By automating routine tasks, AI agents ease the workload on human agents, allowing them to focus on tasks requiring a more personal touch.

AI-powered customer service tools like chatbots have become table stakes across every industry looking to increase efficiency and keep buyers happy. According to a recent IDC study on conversational AI, 41% of organizations use AI-powered copilots for customer service and 60% have implemented them for IT help desks.

Now, many of those same industries are looking to adopt agentic AI, semi-autonomous tools that have the ability to perceive, reason and act on more complex problems.

How AI Agents Enhance Customer Service

A primary value of AI-powered systems is the time they free up by automating routine tasks. AI agents can perform specific tasks, or agentic operations, essentially becoming part of an organization’s workforce — working alongside humans who can focus on more complex customer issues.

AI agents can handle predictive tasks and problem-solve, can be trained to understand industry-specific terms and can pull relevant information from an organization’s knowledge bases, wherever that data resides.

With AI agents, companies can:

- Boost efficiency: AI agents handle common questions and repetitive tasks, allowing support teams to prioritize more complicated cases. This is especially useful during high-demand periods.

- Increase customer satisfaction: Faster, more personalized interactions result in happier and more loyal customers. Consistent and accurate support improves customer sentiment and experience.

- Scale Easily: Equipped to handle high volumes of customer support requests, AI agents scale effortlessly with growing businesses, reducing customer wait times and resolving issues faster.

AI Agents for Customer Service Across Industries

AI agents are transforming customer service across sectors, helping companies enhance customer conversations, achieve high-resolution rates and improve human representative productivity.

For instance, ServiceNow recently introduced IT and customer service management AI agents to boost productivity by autonomously solving many employee and customer issues. Its agents can understand context, create step-by-step resolutions and get live agent approvals when needed.

To improve patient care and reduce preprocedure anxiety, The Ottawa Hospital is using AI agents that have consistent, accurate and continuous access to information. The agent has the potential to improve patient care and reduce administrative tasks for doctors and nurses.

The city of Amarillo, Texas, uses a multilingual digital assistant named Emma to provide its residents with 24/7 support. Emma brings more effective and efficient disbursement of important information to all residents, including the one-quarter who don’t speak English.

AI agents meet current customer service demands while preparing organizations for the future.

Key Steps for Designing AI Virtual Assistants for Customer Support

AI agents for customer service come in a wide range of designs, from simple text-based virtual assistants that resolve customer issues, to animated avatars that can provide a more human-like experience.

Digital human interfaces can add warmth and personality to the customer experience. These agents respond with spoken language and even animated avatars, enhancing service interactions with a touch of real-world flair. A digital human interface lets companies customize the assistant’s appearance and tone, aligning it with the brand’s identity.

There are three key building blocks to creating an effective AI agent for customer service:

- Collect and organize customer data: AI agents need a solid base of customer data (such as profiles, past interactions, and transaction histories) to provide accurate, context-aware responses.

- Use memory functions for personalization: Advanced AI systems remember past interactions, allowing agents to deliver personalized support that feels human.

- Build an operations pipeline: Customer service teams should regularly review feedback and update the AI agent’s responses to ensure it’s always improving and aligned with business goals.

Powering AI Agents With NVIDIA NIM Microservices

NVIDIA NIM microservices power AI agents by enabling natural language processing, contextual retrieval and multilingual communication. This allows AI agents to deliver fast, personalized and accurate support tailored to diverse customer needs.

Key NVIDIA NIM microservices for customer service agents include:

NVIDIA NIM for Large Language Models — Microservices that bring advanced language models to applications and enable complex reasoning, so AI agents can understand complicated customer queries.

NVIDIA NeMo Retriever NIM — Embedding and reranking microservices that support retrieval-augmented generation pipelines allow virtual assistants to quickly access enterprise knowledge bases and boost retrieval performance by ranking relevant knowledge-base articles and improving context accuracy.

NVIDIA NIM for Digital Humans — Microservices that enable intelligent, interactive avatars to understand speech and respond in a natural way. NVIDIA Riva NIM microservices for text-to-speech, automatic speech recognition (ASR), and translation services enable AI agents to communicate naturally across languages. The recently released Riva NIM microservices for ASR enable additional multilingual enhancements. To build realistic avatars, Audio2Face NIM converts streamed audio to facial movements for real-time lip syncing. 2D and 3D Audio2Face NIM microservices support varying use cases.

Getting Started With AI Agents for Customer Service

NVIDIA AI Blueprints make it easy to start building and setting up virtual assistants by offering ready-made workflows and tools to accelerate deployment. Whether for a simple AI-powered chatbot or a fully animated digital human interface, the blueprints offer resources to create AI assistants that are scalable, aligned with an organization’s brand and deliver a responsive, efficient customer support experience.

Editor’s note: IDC figures are sourced to IDC, Market Analysis Perspective: Worldwide Conversational AI Tools and Technologies, 2024 US51619524, Sept 2024

]]>Since the advent of the computer age, industries have been so awash in stored data that most of it never gets put to use.

This data is estimated to be in the neighborhood of 120 zettabytes — the equivalent of trillions of terabytes, or more than 120x the amount of every grain of sand on every beach around the globe. Now, the world’s industries are putting that untamed data to work by building and customizing large language models (LLMs).

As 2025 approaches, industries such as healthcare, telecommunications, entertainment, energy, robotics, automotive and retail are using those models, combining it with their proprietary data and gearing up to create AI that can reason.

The NVIDIA experts below focus on some of the industries that deliver $88 trillion worth of goods and services globally each year. They predict that AI that can harness data at the edge and deliver near-instantaneous insights is coming to hospitals, factories, customer service centers, cars and mobile devices near you.

But first, let’s hear AI’s predictions for AI. When asked, “What will be the top trends in AI in 2025 for industries?” both Perplexity and ChatGPT 4.0 responded that agentic AI sits atop the list alongside edge AI, AI cybersecurity and AI-driven robots.

Agentic AI is a new category of generative AI that operates virtually autonomously. It can make complex decisions and take actions based on continuous learning and analysis of vast datasets. Agentic AI is adaptable, has defined goals and can correct itself, and can chat with other AI agents or reach out to a human for help.

Now, hear from NVIDIA experts on what to expect in the year ahead:

Kimberly Powell

Vice President of Healthcare

Human-robotic interaction: Robots will assist human clinicians in a variety of ways, from understanding and responding to human commands, to performing and assisting in complex surgeries.

It’s being made possible by digital twins, simulation and AI that train and test robotic systems in virtual environments to reduce risks associated with real-world trials. It also can train robots to react in virtually any scenario, enhancing their adaptability and performance across different clinical situations.

New virtual worlds for training robots to perform complex tasks will make autonomous surgical robots a reality. These surgical robots will perform complex surgical tasks with precision, reducing patient recovery times and decreasing the cognitive workload for surgeons.

Digital health agents: The dawn of agentic AI and multi-agent systems will address the existential challenges of workforce shortages and the rising cost of care.

Administrative health services will become digital humans taking notes for you or making your next appointment — introducing an era of services delivered by software and birthing a service-as-a-software industry.

Patient experience will be transformed with always-on, personalized care services while healthcare staff will collaborate with agents that help them reduce clerical work, retrieve and summarize patient histories, and recommend clinical trials and state-of-the-art treatments for their patients.

Drug discovery and design AI factories: Just as ChatGPT can generate an email or a poem without putting a pen to paper for trial and error, generative AI models in drug discovery can liberate scientific thinking and exploration.

Techbio and biopharma companies have begun combining models that generate, predict and optimize molecules to explore the near-infinite possible target drug combinations before going into time-consuming and expensive wet lab experiments.

The drug discovery and design AI factories will consume all wet lab data, refine AI models and redeploy those models — improving each experiment by learning from the previous one. These AI factories will shift the industry from a discovery process to a design and engineering one.

Rev Lebaredian

Rev Lebaredian

Vice President of Omniverse and Simulation Technology

Let’s get physical (AI, that is): Getting ready for AI models that can perceive, understand and interact with the physical world is one challenge enterprises will race to tackle.

While LLMs require reinforcement learning largely in the form of human feedback, physical AI needs to learn in a “world model” that mimics the laws of physics. Large-scale physically based simulations are allowing the world to realize the value of physical AI through robots by accelerating the training of physical AI models and enabling continuous training in robotic systems across every industry.

Cheaper by the dozen: In addition to their smarts (or lack thereof), one big factor that has slowed adoption of humanoid robots has been affordability. As agentic AI brings new intelligence to robots, though, volume will pick up and costs will come down sharply. The average cost of industrial robots is expected to drop to $10,800 in 2025, down sharply from $46K in 2010 to $27K in 2017. As these devices become significantly cheaper, they’ll become as commonplace across industries as mobile devices are.

Deepu Talla

Deepu Talla

Vice President of Robotics and Edge Computing

Redefining robots: When people think of robots today, they’re usually images or content showing autonomous mobile robots (AMRs), manipulator arms or humanoids. But tomorrow’s robots are set to be an autonomous system that perceives, reasons, plans and acts — then learns.

Soon we’ll be thinking of robots embodied everywhere from surgical rooms and data centers to warehouses and factories. Even traffic control systems or entire cities will be transformed from static, manually operated systems to autonomous, interactive systems embodied by physical AI.

The rise of small language models: To improve the functionality of robots operating at the edge, expect to see the rise of small language models that are energy-efficient and avoid latency issues associated with sending data to data centers. The shift to small language models in edge computing will improve inference in a range of industries, including automotive, retail and advanced robotics.

Kevin Levitt

Kevin Levitt

Global Director of Financial Services

AI agents boost firm operations: AI-powered agents will be deeply integrated into the financial services ecosystem, improving customer experiences, driving productivity and reducing operational costs.

AI agents will take every form based on each financial services firm’s needs. Human-like 3D avatars will take requests and interact directly with clients, while text-based chatbots will summarize thousands of pages of data and documents in seconds to deliver accurate, tailored insights to employees across all business functions.

AI factories become table stakes: AI use cases in the industry are exploding. This includes improving identity verification for anti-money laundering and know-your-customer regulations, reducing false positives for transaction fraud and generating new trading strategies to improve market returns. AI also is automating document management, reducing funding cycles to help consumers and businesses on their financial journeys.

To capitalize on opportunities like these, financial institutions will build AI factories that use full-stack accelerated computing to maximize performance and utilization to build AI-enabled applications that serve hundreds, if not thousands, of use cases — helping set themselves apart from the competition.

AI-assisted data governance: Due to the sensitive nature of financial data and stringent regulatory requirements, governance will be a priority for firms as they use data to create reliable and legal AI applications, including for fraud detection, predictions and forecasting, real-time calculations and customer service.

Firms will use AI models to assist in the structure, control, orchestration, processing and utilization of financial data, making the process of complying with regulations and safeguarding customer privacy smoother and less labor intensive. AI will be the key to making sense of and deriving actionable insights from the industry’s stockpile of underutilized, unstructured data.

Richard Kerris

Richard Kerris

Vice President of Media and Entertainment

Let AI entertain you: AI will continue to revolutionize entertainment with hyperpersonalized content on every screen, from TV shows to live sports. Using generative AI and advanced vision-language models, platforms will offer immersive experiences tailored to individual tastes, interests and moods. Imagine teaser images and sizzle reels crafted to capture the essence of a new show or live event and create an instant personal connection.

In live sports, AI will enhance accessibility and cultural relevance, providing language dubbing, tailored commentary and local adaptations. AI will also elevate binge-watching by adjusting pacing, quality and engagement options in real time to keep fans captivated. This new level of interaction will transform streaming from a passive experience into an engaging journey that brings people closer to the action and each other.

AI-driven platforms will also foster meaningful connections with audiences by tailoring recommendations, trailers and content to individual preferences. AI’s hyperpersonalization will allow viewers to discover hidden gems, reconnect with old favorites and feel seen. For the industry, AI will drive growth and innovation, introducing new business models and enabling global content strategies that celebrate unique viewer preferences, making entertainment feel boundless, engaging and personally crafted.

Ronnie Vasishta

Ronnie Vasishta

Senior Vice President of Telecoms

The AI connection: Telecommunications providers will begin to deliver generative AI applications and 5G connectivity over the same network. AI radio access network (AI-RAN) will enable telecom operators to transform traditional single-purpose base stations from cost centers into revenue-producing assets capable of providing AI inference services to devices, while more efficiently delivering the best network performance.

AI agents to the rescue: The telecommunications industry will be among the first to dial into agentic AI to perform key business functions. Telco operators will use AI agents for a wide variety of tasks, from suggesting money-saving plans to customers and troubleshooting network connectivity, to answering billing questions and processing payments.

More efficient, higher-performing networks: AI also will be used at the wireless network layer to enhance efficiency, deliver site-specific learning and reduce power consumption. Using AI as an intelligent performance improvement tool, operators will be able to continuously observe network traffic, predict congestion patterns and make adjustments before failures happen, allowing for optimal network performance.

Answering the call on sovereign AI: Nations will increasingly turn to telcos — which have proven experience managing complex, distributed technology networks — to achieve their sovereign AI objectives. The trend will spread quickly across Europe and Asia, where telcos in Switzerland, Japan, Indonesia and Norway are already partnering with national leaders to build AI factories that can use proprietary, local data to help researchers, startups, businesses and government agencies create AI applications and services.

Xinzhou Wu

Xinzhou Wu

Vice President of Automotive

Pedal to generative AI metal: Autonomous vehicles will become more performant as developers tap into advancements in generative AI. For example, harnessing foundation models, such as vision language models, provides an opportunity to use internet-scale knowledge to solve one of the hardest problems in the autonomous vehicle (AV) field, namely that of efficiently and safely reasoning through rare corner cases.

Simulation unlocks success: More broadly, new AI-based tools will enable breakthroughs in how AV development is carried out. For example, advances in generative simulation will enable the scalable creation of complex scenarios aimed at stress-testing vehicles for safety purposes. Aside from allowing for testing unusual or dangerous conditions, simulation is also essential for generating synthetic data to enable end-to-end model training.

Three-computer approach: Effectively, new advances in AI will catalyze AV software development across the three key computers underpinning AV development — one for training the AI-based stack in the data center, another for simulation and validation, and a third in-vehicle computer to process real-time sensor data for safe driving. Together, these systems will enable continuous improvement of AV software for enhanced safety and performance of cars, trucks, robotaxis and beyond.

Marc Spieler

Marc Spieler

Senior Managing Director of Global Energy Industry

Welcoming the smart grid: Do you know when your daily peak home electricity is? You will soon as utilities around the world embrace smart meters that use AI to broadly manage their grid networks, from big power plants and substations and, now, into the home.

As the smart grid takes shape, smart meters — once deemed too expensive to be installed in millions of homes — that combine software, sensors and accelerated computing will alert utilities when trees in a backyard brush up against power lines or when to offer big rebates to buy back the excess power stored through rooftop solar installations.

Powering up: Delivering the optimal power stack has always been mission-critical for the energy industry. In the era of generative AI, utilities will address this issue in ways that reduce environmental impact.

Expect in 2025 to see a broader embrace of nuclear power as one clean-energy path the industry will take. Demand for natural gas also will grow as it replaces coal and other forms of energy. These resurgent forms of energy are being helped by the increased use of accelerated computing, simulation technology and AI and 3D visualization, which helps optimize design, pipeline flows and storage. We’ll see the same happening at oil and gas companies, which are looking to reduce the impact of energy exploration and production.

Azita Martin

Azita Martin

Vice President of Retail, Consumer-Packaged Goods and Quick-Service Restaurants

Software-defined retail: Supercenters and grocery stores will become software-defined, each running computer vision and sophisticated AI algorithms at the edge. The transition will accelerate checkout, optimize merchandising and reduce shrink — the industry term for a product being lost or stolen.

Each store will be connected to a headquarters AI network, using collective data to become a perpetual learning machine. Software-defined stores that continually learn from their own data will transform the shopping experience.

Intelligent supply chain: Intelligent supply chains created using digital twins, generative AI, machine learning and AI-based solvers will drive billions of dollars in labor productivity and operational efficiencies. Digital twin simulations of stores and distribution centers will optimize layouts to increase in-store sales and accelerate throughput in distribution centers.

Agentic robots working alongside associates will load and unload trucks, stock shelves and pack customer orders. Also, last-mile delivery will be enhanced with AI-based routing optimization solvers, allowing products to reach customers faster while reducing vehicle fuel costs.

]]>Austin is drawing people to jobs, music venues, comedy clubs, barbecue and more. But with this boom has come a big city blues: traffic jams.

Rekor, which offers traffic management and public safety analytics, has a front-row seat to the increasing traffic from an influx of new residents migrating to Austin. Rekor works with the Texas Department of Transportation, which has a $7 billion project addressing this, to help mitigate the roadway concerns.

“Texas has been trying to meet that growth and demand on the roadways by investing a lot in infrastructure, and they’re focusing a lot on digital infrastructure,” said Shervin Esfahani, vice president of global marketing and communications at Rekor. “It’s super complex, and they realized their traditional systems were unable to really manage and understand it in real time.”

Rekor, based in Columbia, Maryland, has been harnessing NVIDIA Metropolis for real-time video understanding and NVIDIA Jetson Xavier NX modules for edge AI in Texas, Florida, Philadelphia, Georgia, Nevada, Oklahoma and many more U.S. destinations as well as in Israel and other places internationally.

Metropolis is an application framework for smart infrastructure development with vision AI. It provides developer tools, including the NVIDIA DeepStream SDK, NVIDIA TAO Toolkit, pretrained models on the NVIDIA NGC catalog and NVIDIA TensorRT. NVIDIA Jetson is a compact, powerful and energy-efficient accelerated computing platform used for embedded and robotics applications.

Rekor’s efforts in Texas and Philadelphia to help better manage roads with AI are the latest development in an ongoing story for traffic safety and traffic management.

Reducing Rubbernecking, Pileups, Fatalities and Jams

Rekor offers two main products: Rekor Command and Rekor Discover. Command is an AI-driven platform for traffic management centers, providing rapid identification of traffic events and zones of concern. It offers departments of transportation with real-time situational awareness and alerts that allows them to keep city roadways safer and more congestion-free.

Discover taps into Rekor’s edge system to fully automate the capture of comprehensive traffic and vehicle data and provides robust traffic analytics that turn roadway data into measurable, reliable traffic knowledge. With Rekor Discover, departments of transportation can see a full picture of how vehicles move on roadways and the impact they make, allowing them to better organize and execute their future city-building initiatives.

The company has deployed Command across Austin to help detect issues, analyze incidents and respond to roadway activity with a real-time view.

“For every minute an incident happens and stays on the road, it creates four minutes of traffic, which puts a strain on the road, and the likelihood of a secondary incident like an accident from rubbernecking massively goes up,” said Paul-Mathew Zamsky, vice president of strategic growth and partnerships at Rekor. “Austin deployed Rekor Command and saw a 159% increase in incident detections, and they were able to respond eight and a half minutes faster to those incidents.”

Rekor Command takes in many feeds of data — like traffic camera footage, weather, connected car info and construction updates — and taps into any other data infrastructure, as well as third-party data. It then uses AI to make connections and surface up anomalies, like a roadside incident. That information is presented in workflows to traffic management centers for review, confirmation and response.

“They look at it and respond to it, and they are doing it faster than ever before,” said Esfahani. “It helps save lives on the road, and it also helps people’s quality of life, helps them get home faster and stay out of traffic, and it reduces the strain on the system in the city of Austin.”

In addition to adopting NVIDIA’s full-stack accelerated computing for roadway intelligence, Rekor is going all in on NVIDIA AI and NVIDIA AI Blueprints, which are reference workflows for generative AI use cases, built with NVIDIA NIM microservices as part of the NVIDIA AI Enterprise software platform. NVIDIA NIM is a set of easy-to-use inference microservices for accelerating deployments of foundation models on any cloud or data center while keeping data secure.

Rekor has multiple large language models and vision language models running on NVIDIA Triton Inference Server in production,” according to Shai Maron, senior vice president of global software and data engineering at Rekor.

“Internally, we’ll use it for data annotation, and it will help us optimize different aspects of our day to day,” he said. “LLMs externally will help us calibrate our cameras in a much more efficient way and configure them.”

Rekor is using the NVIDIA AI Blueprint for video search and summarization to build AI agents for city services, particularly in areas such as traffic management, public safety and optimization of city infrastructure. NVIDIA recently announced a new AI Blueprint for video search and summarization enabling a range of interactive visual AI agents that extracts complex activities from massive volumes of live or archived video.

Philadelphia Monitors Roads, EV Charger Needs, Pollution

Philadelphia Navy Yard is a tourism hub run by the Philadelphia Industrial Development Corporation (PIDC), which has some challenges in road management and gathering data on new developments for the popular area. The Navy Yard location, occupying 1,200 acres, has more than 150 companies and 15,000 employees, but a $6 billion redevelopment plan there promises to bring in 12,000-plus new jobs and thousands more as residents to the area.

PIDC sought greater visibility into the effects of road closures and construction projects on mobility and how to improve mobility during significant projects and events. PIDC also looked to strengthen the Navy Yard’s ability to understand the volume and traffic flow of car carriers or other large vehicles and quantify the impact of speed-mitigating devices deployed across hazardous stretches of roadway.

Discover provided PIDC insights into additional infrastructure projects that need to be deployed to manage any changes in traffic.

Understanding the number of electric vehicles, and where they’re entering and leaving the Navy Yard, provides PIDC with clear insights on potential sites for electric vehicle (EV) charge station deployment in the future. By pulling insights from Rekor’s edge systems, built with NVIDIA Jetson Xavier NX modules for powerful edge processing and AI, Rekor Discover lets Navy Yard understand the number of EVs and where they’re entering and leaving, allowing PIDC to better plan potential sites for EV charge station deployment in the future.

Rekor Discover enabled PIDC planners to create a hotspot map of EV traffic by looking at data provided by the AI platform. The solution relies on real-time traffic analysis using NVIDIA’s DeepStream data pipeline and Jetson. Additionally, it uses NVIDIA Triton Inference Server to enhance LLM capabilities.

The PIDC wanted to address public safety issues related to speeding and collisions as well as decrease property damage. Using speed insights, it’s deploying traffic calming measures where average speeds are exceeding what’s ideal on certain segments of roadway.

NVIDIA Jetson Xavier NX to Monitor Pollution in Real Time

Traditionally, urban planners can look at satellite imagery to try to understand pollution locations, but Rekor’s vehicle recognition models, running on NVIDIA Jetson Xavier NX modules, were able to track it to the sources, taking it a step further toward mitigation.

“It’s about air quality,” said Shobhit Jain, senior vice president of product management at Rekor. “We’ve built models to be really good at that. They can know how much pollution each vehicle is putting out.”

Looking ahead, Rekor is examining how NVIDIA Omniverse might be used for digital twins development in order to simulate traffic mitigation with different strategies. Omniverse is a platform for developing OpenUSD applications for industrial digitalization and generative physical AI.

Developing digital twins with Omniverse for municipalities has enormous implications for reducing traffic, pollution and road fatalities — all areas Rekor sees as hugely beneficial to its customers.

“Our data models are granular, and we’re definitely exploring Omniverse,” said Jain. “We’d like to see how we can support those digital use cases.”

Learn about the NVIDIA AI Blueprint for building AI agents for video search and summarization.

]]>Editor’s note: The name of NIM Agent Blueprints was changed to NVIDIA Blueprints in October 2024. All references to the name have been updated in this blog.

AI chatbots use generative AI to provide responses based on a single interaction. A person makes a query and the chatbot uses natural language processing to reply.

The next frontier of artificial intelligence is agentic AI, which uses sophisticated reasoning and iterative planning to autonomously solve complex, multi-step problems. And it’s set to enhance productivity and operations across industries.

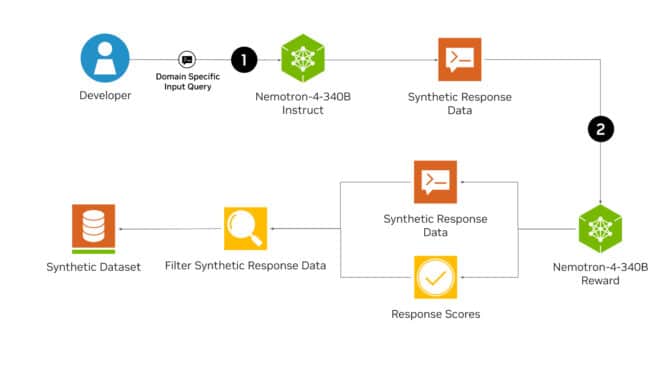

Agentic AI systems ingest vast amounts of data from multiple sources to independently analyze challenges, develop strategies and execute tasks like supply chain optimization, cybersecurity vulnerability analysis and helping doctors with time-consuming tasks.

Agentic AI uses sophisticated reasoning and iterative planning to solve complex, multi-step problems.

How Does Agentic AI Work?

Agentic AI uses a four-step process for problem-solving:

- Perceive: AI agents gather and process data from various sources, such as sensors, databases and digital interfaces. This involves extracting meaningful features, recognizing objects or identifying relevant entities in the environment.

- Reason: A large language model acts as the orchestrator, or reasoning engine, that understands tasks, generates solutions and coordinates specialized models for specific functions like content creation, vision processing or recommendation systems. This step uses techniques like retrieval-augmented generation (RAG) to access proprietary data sources and deliver accurate, relevant outputs.

- Act: By integrating with external tools and software via application programming interfaces, agentic AI can quickly execute tasks based on the plans it has formulated. Guardrails can be built into AI agents to help ensure they execute tasks correctly. For example, a customer service AI agent may be able to process claims up to a certain amount, while claims above the amount would have to be approved by a human.

- Learn: Agentic AI continuously improves through a feedback loop, or

“data flywheel,” where the data generated from its interactions is fed into the system to enhance models. This ability to adapt and become more effective over time offers businesses a powerful tool for driving better decision-making and operational efficiency.

Fueling Agentic AI With Enterprise Data

Fueling Agentic AI With Enterprise Data

Across industries and job functions, generative AI is transforming organizations by turning vast amounts of data into actionable knowledge, helping employees work more efficiently.

AI agents build on this potential by accessing diverse data through accelerated AI query engines, which process, store and retrieve information to enhance generative AI models. A key technique for achieving this is RAG, which allows AI to tap into a broader range of data sources.

Over time, AI agents learn and improve by creating a data flywheel, where data generated through interactions is fed back into the system, refining models and increasing their effectiveness.

The end-to-end NVIDIA AI platform, including NVIDIA NeMo microservices, provides the ability to manage and access data efficiently, which is crucial for building responsive agentic AI applications.

Agentic AI in Action

The potential applications of agentic AI are vast, limited only by creativity and expertise. From simple tasks like generating and distributing content to more complex use cases such as orchestrating enterprise software, AI agents are transforming industries.

Customer Service: AI agents are improving customer support by enhancing self-service capabilities and automating routine communications. Over half of service professionals report significant improvements in customer interactions, reducing response times and boosting satisfaction.

There’s also growing interest in digital humans — AI-powered agents that embody a company’s brand and offer lifelike, real-time interactions to help sales representatives answer customer queries or solve issues directly when call volumes are high.

Content Creation: Agentic AI can help quickly create high-quality, personalized marketing content. Generative AI agents can save marketers an average of three hours per content piece, allowing them to focus on strategy and innovation. By streamlining content creation, businesses can stay competitive while improving customer engagement.

Software Engineering: AI agents are boosting developer productivity by automating repetitive coding tasks. It’s projected that by 2030 AI could automate up to 30% of work hours, freeing developers to focus on more complex challenges and drive innovation.

Healthcare: For doctors analyzing vast amounts of medical and patient data, AI agents can distill critical information to help them make better-informed care decisions. Automating administrative tasks and capturing clinical notes in patient appointments reduces the burden of time-consuming tasks, allowing doctors to focus on developing a doctor-patient connection.

AI agents can also provide 24/7 support, offering information on prescribed medication usage, appointment scheduling and reminders, and more to help patients adhere to treatment plans.

How to Get Started

With its ability to plan and interact with a wide variety of tools and software, agentic AI marks the next chapter of artificial intelligence, offering the potential to enhance productivity and revolutionize the way organizations operate.

To accelerate the adoption of generative AI-powered applications and agents, NVIDIA Blueprints provide sample applications, reference code, sample data, tools and comprehensive documentation.

NVIDIA partners including Accenture are helping enterprises use agentic AI with solutions built with NVIDIA Blueprints.

Visit ai.nvidia.com to learn more about the tools and software NVIDIA offers to help enterprises build their own AI agents.

]]>Editor’s note: The name of NIM Agent Blueprints was changed to NVIDIA Blueprints in October 2024. All references to the name have been updated in this blog.

The U.S. healthcare system is adopting digital health agents to harness AI across the board, from research laboratories to clinical settings.

The latest AI-accelerated tools — on display at the NVIDIA AI Summit taking place this week in Washington, D.C. — include NVIDIA NIM, a collection of cloud-native microservices that support AI model deployment and execution, and NVIDIA Blueprints, a catalog of pretrained, customizable workflows.

These technologies are already in use in the public sector to advance the analysis of medical images, aid the search for new therapeutics and extract information from massive PDF databases containing text, tables and graphs.

For example, researchers at the National Cancer Institute, part of the National Institutes of Health (NIH), are using several AI models built with NVIDIA MONAI for medical imaging — including the VISTA-3D NIM foundation model for segmenting and annotating 3D CT images. A team at NIH’s National Center for Advancing Translational Sciences (NCATS) is using the NVIDIA Blueprint for generative AI-based virtual screening to reduce the time and cost of developing novel drug molecules.

With NVIDIA NIM and NVIDIA Blueprints, medical researchers across the public sector can jump-start their adoption of state-of-the-art, optimized AI models to accelerate their work. The pretrained models are customizable based on an organization’s own data and can be continually refined based on user feedback.

NIM microservices and NVIDIA Blueprints are available at ai.nvidia.com and accessible through a wide variety of cloud service providers, global system integrators and technology solutions providers.

Building With NVIDIA Blueprints

Dozens of NIM microservices and a growing set of NVIDIA Blueprints are available for developers to experience and download for free. They can be deployed in production with the NVIDIA AI Enterprise software platform.

- The blueprint for generative virtual screening for drug discovery brings together three NIM microservices to help researchers search and optimize libraries of small molecules to identify promising candidates that bind to a target protein.

- The multimodal PDF data extraction blueprint uses NVIDIA NeMo Retriever NIM microservices to extract insights from enterprise documents, helping developers build powerful AI agents and chatbots.

- The digital human blueprint supports the creation of interactive, AI-powered avatars for customer service. These avatars have potential applications in telehealth and nonclinical aspects of patient care, such as scheduling appointments, filling out intake forms and managing prescriptions.

Two new NIM microservices for drug discovery are now available on ai.nvidia.com to help researchers understand how proteins bind to target molecules, a crucial step in drug design. By conducting more of this preclinical research digitally, scientists can narrow down their pool of drug candidates before testing in the lab — making the discovery process more efficient and less expensive.

With the AlphaFold2-Multimer NIM microservice, researchers can accurately predict protein structure from their sequences in minutes, reducing the need for time-consuming tests in the lab. The RFdiffusion NIM microservice uses generative AI to design novel proteins that are promising drug candidates because they’re likely to bind with a target molecule.

NCATS Accelerates Drug Discovery Research

ASPIRE, a research laboratory at NCATS, is evaluating the NVIDIA Blueprint for virtual screening and is using RAPIDS, a suite of open-source software libraries for GPU-accelerated data science, to accelerate its drug discovery research. Using the cuGraph library for graph data analytics and cuDF library for accelerating data frames, the lab’s researchers can map chemical reactions across the vast unknown chemical space.

The NCATS informatics team reported that with NVIDIA AI, processes that used to take hours on CPU-based infrastructure are now done in seconds.

Massive quantities of healthcare data — including research papers, radiology reports and patient records — are unstructured and locked in PDF documents, making it difficult for researchers to quickly search for information.

The Genetic and Rare Diseases Information Center, also run by NCATS, is exploring using the PDF data extraction blueprint to develop generative AI tools that enhance the center’s ability to glean information from previously unsearchable databases. These tools will help answer questions from those affected by rare diseases.

“The center analyzes data sources spanning the National Library of Medicine, the Orphanet database and other institutes and centers within the NIH to answer patient questions,” said Sam Michael, chief information officer of NCATS. “AI-powered PDF data extraction can make it massively easier to extract valuable information from previously unsearchable databases.”

Mi-NIM-al Effort, Maximum Benefit: Getting Started With NIM

A growing number of startups, cloud service providers and global systems integrators include NVIDIA NIM microservices and NVIDIA Blueprints as part of their platforms and services, making it easy for federal healthcare researchers to get started.

Abridge, an NVIDIA Inception startup and NVentures portfolio company, was recently awarded a contract from the U.S. Department of Veterans Affairs to help transcribe and summarize clinical appointments, reducing the burden on doctors to document each patient interaction.

The company uses NVIDIA TensorRT-LLM to accelerate AI inference and NVIDIA Triton Inference Server for deploying its audio-to-text and content summarization models at scale, some of the same technologies that power NIM microservices.

The NVIDIA Blueprint for virtual screening is now available through AWS HealthOmics, a purpose-built service that helps customers orchestrate biological data analyses.

Amazon Web Services (AWS) is a partner of the NIH Science and Technology Research Infrastructure for Discovery, Experimentation, and Sustainability Initiative, aka STRIDES Initiative, which aims to modernize the biomedical research ecosystem by reducing economic and process barriers to accessing commercial cloud services. NVIDIA and AWS are collaborating to make NVIDIA Blueprints broadly accessible to the biomedical research community.

ConcertAI, another NVIDIA Inception member, is an oncology AI technology company focused on research and clinical standard-of-care solutions. The company is integrating NIM microservices, NVIDIA CUDA-X microservices and the NVIDIA NeMo platform into its suite of AI solutions for large-scale clinical data processing, multi-agent models and clinical foundation models.

NVIDIA NIM microservices are supporting ConcertAI’s high-performance, low-latency AI models through its CARA AI platform. Use cases include clinical trial design, optimization and patient matching — as well as solutions that can help boost the standard of care and augment clinical decision-making.

Global systems integrator Deloitte is bringing the NVIDIA Blueprint for virtual screening to its customers worldwide. With Deloitte Atlas AI, the company can help clients at federal health agencies easily use NIM to adopt and deploy the latest generative AI pipelines for drug discovery.

Experience NVIDIA NIM microservices and NVIDIA Blueprints today.

NVIDIA AI Summit Highlights Healthcare Innovation

At the NVIDIA AI Summit in Washington, NVIDIA leaders, customers and partners are presenting over 50 sessions highlighting impactful work in the public sector.

Register for a free virtual pass to hear how healthcare researchers are accelerating innovation with NVIDIA-powered AI in these sessions:

- Federal Healthcare Leadership Panel: The Growing Importance of AI to U.S. Government Health features leaders from NVIDIA, NIH, the U.S. Department of Health and Human Services and more.

- Boosting U.S. Innovation and Competitiveness in AI-Enabled Healthcare and Biology features Renee Wegrzyn, director of the Advanced Research Projects Agency for Health, and Rory Kelleher, global head of business development for life sciences at NVIDIA.

- Accelerated Computing Improves Translational Genomics Research features Justin Zook, coleader of the biomarker and genomic sciences group at the National Institute of Standards and Technology, and Laura Egolf, computational scientist at the National Cancer Institute’s Frederick National Laboratory for Cancer Research, discussing their use of NVIDIA Parabricks software for genomics research.

- Building a Specialized Interactive Foundation Model for 3D CT Segmentation features Baris Turkbey, senior clinician and head of MRI and Artificial Intelligence Resource in the National Cancer Institute’s Molecular Imaging Branch, and Pengfei Guo, applied research scientist at NVIDIA.

See notice regarding software product information.

]]>The path to safe, widespread autonomous vehicles is going digital.

MITRE — a government-sponsored nonprofit research organization — today announced its partnership with Mcity at the University of Michigan to develop a virtual and physical autonomous vehicle (AV) validation platform for industry deployment.

As part of this collaboration, announced during the NVIDIA AI Summit in Washington, D.C., MITRE will use Mcity’s simulation tools and a digital twin of its Mcity Test Facility, a real-world AV test environment in its Digital Proving Ground (DPG). The joint platform will deliver physically based sensor simulation enabled by NVIDIA Omniverse Cloud Sensor RTX APIs.

By combining these simulation capabilities with the MITRE DPG reporting framework, developers will be able to perform exhaustive testing in a simulated world to safely validate AVs before real-world deployment.

The current regulatory environment for AVs is highly fragmented, posing significant challenges for widespread deployment. Today, companies navigate regulations at various levels — city, state and the federal government — without a clear path to large-scale deployment. MITRE and Mcity aim to address this ambiguity with comprehensive validation resources open to the entire industry.

Mcity currently operates a 32-acre mock city for automakers and researchers to test their technology. Mcity is also building a digital framework around its physical proving ground to provide developers with AV data and simulation tools.

Raising Safety Standards

One of the largest gaps in the regulatory framework is the absence of universally accepted safety standards that the industry and regulators can rely on.

The lack of common standards leaves regulators with limited tools to verify AV performance and safety in a repeatable manner, while companies struggle to demonstrate the maturity of their AV technology. The ability to do so is crucial in the wake of public road incidents, where AV developers need to demonstrate the reliability of their software in a way that is acceptable to both industry and regulators.

Efforts like the National Highway Traffic Safety Administration’s New Car Assessment Program (NCAP) have been instrumental in setting benchmarks for vehicle safety in traditional automotive development. However, NCAP is insufficient for AV evaluation, where measures of safety go beyond crash tests to the complexity of real-time decision-making in dynamic environments.

Additionally, traditional road testing presents inherent limitations, as it exposes vehicles to real-world conditions but lacks the scalability needed to prove safety across a wide variety of edge cases. It’s particularly difficult to test rare and dangerous scenarios on public roads without significant risk.

By providing both physical and digital resources to validate AVs, MITRE and Mcity will be able to offer a safe, universally accessible solution that addresses the complexity of verifying autonomy.

Physically Based Sensor Simulation

A core piece of this collaboration is sensor simulation, which models the physics and behavior of cameras, lidars, radars and ultrasonic sensors on a physical vehicle, as well as how these sensors interact with their surroundings.

Sensor simulation enables developers to train against and test rare and dangerous scenarios — such as extreme weather conditions, sudden pedestrian crossings or unpredictable driver behavior — safely in virtual settings.

In collaboration with regulators, AV companies can use sensor simulation to recreate a real-world event, analyze their system’s response and evaluate how their vehicle performed — accelerating the validation process.

Moreover, simulation tests are repeatable, meaning developers can track improvements or regressions in the AV stack over time. This means AV companies can provide quantitative evidence to regulators to show that their system is evolving and addressing safety concerns.

Bridging Industry and Regulators

MITRE and its ecosystem are actively developing the Digital Proving Ground platform to facilitate industry-wide standards and regulations.

The platform will be an open and accessible national resource for accelerating safe AV development and deployment, providing a trusted simulation test environment.

Mcity will contribute simulation infrastructure, a digital twin and the ability to seamlessly connect virtual and physical worlds with NVIDIA Omniverse, an open platform enabling system developers to build physical AI and robotic system simulation applications. By integrating this virtual proving ground into DPG, the collaboration will also accelerate the development and use of advanced digital engineering and simulation for AV safety assurance.

Mcity’s simulation tools will connect to Omniverse Cloud Sensor RTX APIs and render a Universal Scene Description (USD) model of Mcity’s physical proving ground. DPG will be able to access this environment, simulate the behavior of vehicles and pedestrians in a realistic test environment and use the DPG reporting framework to explain how the AV performed.

This testing will then be replicated on the physical Mcity proving ground to create a comprehensive feedback loop.

The Road Ahead

As developers, automakers and regulators continue to collaborate, the industry is moving closer to a future where AVs can operate safely and at scale. The establishment of a repeatable testbed for validating safety — across real and simulated environments — will be critical to gaining public trust and regulatory approval, bringing the promise of AVs closer to reality.

]]>Customer service departments across industries are facing increased call volumes, high customer service agent turnover, talent shortages and shifting customer expectations.

Customers expect both self-help options and real-time, person-to-person support. These expectations for seamless, personalized experiences extend across digital communication channels, including live chat, text and social media.

Despite the rise of digital channels, many consumers still prefer picking up the phone for support, placing strain on call centers. As companies strive to enhance the quality of customer interactions, operational efficiency and costs remain a significant concern.

To address these challenges, businesses are deploying AI-powered customer service software to boost agent productivity, automate customer interactions and harvest insights to optimize operations.

In nearly every industry, AI systems can help improve service delivery and customer satisfaction. Retailers are using conversational AI to help manage omnichannel customer requests, telecommunications providers are enhancing network troubleshooting, financial institutions are automating routine banking tasks, and healthcare facilities are expanding their capacity for patient care.

What Are the Benefits of AI for Customer Service?

With strategic deployment of AI, enterprises can transform customer interactions through intuitive problem-solving to build greater operational efficiencies and elevate customer satisfaction.

By harnessing customer data from support interactions, documented FAQs and other enterprise resources, businesses can develop AI tools that tap into their organization’s unique collective knowledge and experiences to deliver personalized service, product recommendations and proactive support.

Customizable, open-source generative AI technologies such as large language models (LLMs), combined with natural language processing (NLP) and retrieval-augmented generation (RAG), are helping industries accelerate the rollout of use-case-specific customer service AI. According to McKinsey, over 80% of customer care executives are already investing in AI or planning to do so soon.

With cost-efficient, customized AI solutions, businesses are automating management of help-desk support tickets, creating more effective self-service tools and supporting their customer service agents with AI assistants. This can significantly reduce operational costs and improve the customer experience.

Developing Effective Customer Service AI

For satisfactory, real-time interactions, AI-powered customer service software must return accurate, fast and relevant responses. Some tricks of the trade include:

Open-source foundation models can fast-track AI development. Developers can flexibly adapt and enhance these pretrained machine learning models, and enterprises can use them to launch AI projects without the high costs of building models from scratch.

RAG frameworks connect foundation or general-purpose LLMs to proprietary knowledge bases and data sources, including inventory management and customer relationship management systems and customer service protocols. Integrating RAG into conversational chatbots, AI assistants and copilots tailors responses to the context of customer queries.

Human-in-the-loop processes remain crucial to both AI training and live deployments. After initial training of foundation models or LLMs, human reviewers should judge the AI’s responses and provide corrective feedback. This helps to guard against issues such as hallucination — where the model generates false or misleading information, and other errors including toxicity or off-topic responses. This type of human involvement ensures fairness, accuracy and security is fully considered during AI development.

Human participation is even more important for AI in production. When an AI is unable to adequately resolve a customer question, the program must be able to route the call to customer support teams. This collaborative approach between AI and human agents ensures that customer engagement is efficient and empathetic.

What’s the ROI of Customer Service AI?

The return on investment of customer service AI should be measured primarily based on efficiency gains and cost reductions. To quantify ROI, businesses can measure key indicators such as reduced response times, decreased operational costs of contact centers, improved customer satisfaction scores and revenue growth resulting from AI-enhanced services.

For instance, the cost of implementing an AI chatbot using open-source models can be compared with the expenses incurred by routing customer inquiries through traditional call centers. Establishing this baseline helps assess the financial impact of AI deployments on customer service operations.

To solidify understanding of ROI before scaling AI deployments, companies can consider a pilot period. For example, by redirecting 20% of call center traffic to AI solutions for one or two quarters and closely monitoring the outcomes, businesses can obtain concrete data on performance improvements and cost savings. This approach helps prove ROI and informs decisions for further investment.

Businesses across industries are using AI for customer service and measuring their success:

Retailers Reduce Call Center Load

Modern shoppers expect smooth, personalized and efficient shopping experiences, whether in store or on an e-commerce site. Customers of all generations continue prioritizing live human support, while also desiring the option to use different channels. But complex customer issues coming from a diverse customer base can make it difficult for support agents to quickly comprehend and resolve incoming requests.

To address these challenges, many retailers are turning to conversational AI and AI-based call routing. According to NVIDIA’s 2024 State of AI in Retail and CPG report, nearly 70% of retailers believe that AI has already boosted their annual revenue.

CP All, Thailand’s sole licensed operator for 7-Eleven convenience stores, has implemented conversational AI chatbots in its call centers, which rack up more than 250,000 calls per day. Training the bots presented unique challenges due to the complexities of the Thai language, which includes 21 consonants, 18 pure vowels, three diphthongs and five tones.

To manage this, CP All used NVIDIA NeMo, a framework designed for building, training and fine-tuning GPU-accelerated speech and natural language understanding models. With automatic speech recognition and NLP models powered by NVIDIA technologies, CP All’s chatbot achieved a 97% accuracy rate in understanding spoken Thai.

With the conversational chatbot handling a significant number of customer conversations, the call load on human agents was reduced by 60%. This allowed customer service teams to focus on more complex tasks. The chatbot also helped reduce wait times and provided quicker, more accurate responses, leading to higher customer satisfaction levels.

With AI-powered support experiences, retailers can enhance customer retention, strengthen brand loyalty and boost sales.

Telecommunications Providers Automate Network Troubleshooting

Telecommunications providers are challenged to address complex network issues while adhering to service-level agreements with end customers for network uptime. Maintaining network performance requires rapid troubleshooting of network devices, pinpointing root causes and resolving difficulties at network operations centers.

With its abilities to analyze vast amounts of data, troubleshoot network problems autonomously and execute numerous tasks simultaneously, generative AI is ideal for network operations centers. According to an IDC survey, 73% of global telcos have prioritized AI and machine learning investments for operational support as their top transformation initiative, underscoring the industry’s shift toward AI and advanced technologies.

Infosys, a leader in next-generation digital services and consulting, has built AI-driven solutions to help its telco partners overcome customer service challenges. Using NVIDIA NIM microservices and RAG, Infosys developed an AI chatbot to support network troubleshooting.

By offering quick access to essential, vendor-agnostic router commands for diagnostics and monitoring, the generative AI-powered chatbot significantly reduces network resolution times, enhancing overall customer support experiences.

To ensure accuracy and contextual responses, Infosys trained the generative AI solution on telecom device-specific manuals, training documents and troubleshooting guides. Using NVIDIA NeMo Retriever to query enterprise data, Infosys achieved 90% accuracy for its LLM output. By fine-tuning and deploying models with NVIDIA technologies, Infosys achieved a latency of 0.9 seconds, a 61% reduction compared with its baseline model. The RAG-enabled chatbot powered by NeMo Retriever also attained 92% accuracy, compared with the baseline model’s 85%.

With AI tools supporting network administrators, IT teams and customer service agents, telecom providers can more efficiently identify and resolve network issues.

Financial Services Institutions Pinpoint Fraud With Ease

While customers expect anytime, anywhere banking and support, financial services require a heightened level of data sensitivity. And unlike other industries that may include one-off purchases, banking is typically based on ongoing transactions and long-term customer relationships.

At the same time, user loyalty can be fleeting, with up to 80% of banking customers willing to switch institutions for a better experience. Financial institutions must continuously improve their support experiences and update their analyses of customer needs and preferences.

Many banks are turning to AI virtual assistants that can interact directly with customers to manage inquiries, execute transactions and escalate complex issues to human customer support agents. According to NVIDIA’s 2024 State of AI in Financial Services report, more than one-fourth of survey respondents are using AI to enhance customer experiences, and 34% are exploring the use of generative AI and LLMs for customer experience and engagement.

Bunq, a European digital bank with more than 2 million customers and 8 billion euros worth of deposits, is deploying generative AI to meet user needs. With proprietary LLMs, the company built Finn, a personal AI assistant available to both customers and bank employees. Finn can answer finance-related inquiries such as “How much did I spend on groceries last month?” or “What is the name of the Indian restaurant I ate at last week?”

Plus, with a human-in-the-loop process, Finn helps employees more quickly identify fraud. By collecting and analyzing data for compliance officers to review, bunq now identifies fraud in just three to seven minutes, down from 30 minutes without Finn.

By deploying AI tools that can use data to protect customer transactions, execute banking requests and act on customer feedback, financial institutions can serve customers at a higher level, building the trust and satisfaction necessary for long-term relationships.

Healthcare and Life Sciences Organizations Overcome Staffing Shortages

In healthcare, patients need quick access to medical expertise, precise and tailored treatment options, and empathetic interactions with healthcare professionals. But with the World Health Organization estimating a 10 million personnel shortage by 2030, access to quality care could be jeopardized.

AI-powered digital healthcare assistants are helping medical institutions do more with less. With LLMs trained on specialized medical corpuses, AI copilots can save physicians and nurses hours of daily work by helping with clinical note-taking, automating order-placing for prescriptions and lab tests, and following up with after-visit patient notes.

Multimodal AI that combines language and vision models can make healthcare settings safer by extracting insights and providing summaries of image data for patient monitoring. For example, such technology can alert staff of patient fall risks and other patient room hazards.

To support healthcare professionals, Hippocratic AI has trained a generative AI healthcare agent to perform low-risk, non-diagnostic routine tasks, like reminding patients of necessary appointment prep and following up after visits to make sure medication routines are being followed and no adverse side effects are being experienced.

Hippocratic AI trained its models on evidence-based medicine and completed rigorous testing with a large group of certified nurses and doctors. The constellation architecture of the solution comprises 20 models, one of which communicates with patients while the other 19 supervise its output. The complete system contains 1.7 trillion parameters.

The possibility of every doctor and patient having their own AI-powered digital healthcare assistant means reduced clinician burnout and higher-quality medical care.

Raising the Bar for Customer Experiences With AI

By integrating AI into customer service interactions, businesses can offer more personalized, efficient and prompt service, setting new standards for omnichannel support experiences across platforms. With AI virtual assistants that process vast amounts of data in seconds, enterprises can equip their support agents to deliver tailored responses to the complex needs of a diverse customer base.

To develop and deploy effective customer service AI, businesses can fine-tune AI models and deploy RAG solutions to meet diverse and specific needs.

NVIDIA offers a suite of tools and technologies to help enterprises get started with customer service AI.

NVIDIA NIM microservices, part of the NVIDIA AI Enterprise software platform, accelerate generative AI deployment and support various optimized AI models for seamless, scalable inference. NVIDIA NIM Agent Blueprints provide developers with packaged reference examples to build innovative solutions for customer service applications.

By taking advantage of AI development tools, enterprises can build accurate and high-speed AI applications to transform employee and customer experiences.

Learn more about improving customer service with generative AI.

]]>Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible, and showcases new hardware, software, tools and accelerations for RTX PC users.

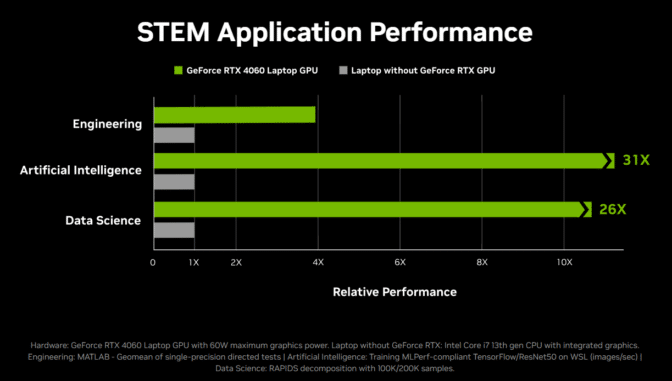

AI powered by NVIDIA GPUs is accelerating nearly every industry, creating high demand for graduates, especially from STEM fields, who are proficient in using the technology. Millions of students worldwide are participating in university STEM programs to learn skills that will set them up for career success.

To prepare students for the future job market, NVIDIA has worked with top universities to develop a GPU-accelerated AI curriculum that’s now taught in more than 5,000 schools globally. Students can get a jumpstart outside of class with NVIDIA’s AI Learning Essentials, a set of resources that equips individuals with the necessary knowledge, skills and certifications for the rapidly evolving AI workforce.

NVIDIA GPUs — whether running in university data centers, GeForce RTX laptops or NVIDIA RTX workstations — are accelerating studies, helping enhance the learning experience and enabling students to gain hands-on experience with hardware used widely in real-world applications.

Supercharged AI Studies

NVIDIA provides several tools to help students accelerate their studies.

The RTX AI Toolkit is a powerful resource for students looking to develop and customize AI models for projects in computer science, data science, and other STEM fields. It allows students to train and fine-tune the latest generative AI models, including Gemma, Llama 3 and Phi 3, up to 30x faster — enabling them to iterate and innovate more efficiently, advancing their studies and research projects.

Students studying data science and economics can use NVIDIA RAPIDS AI and data science software libraries to run traditional machine learning models up to 25x faster than conventional methods, helping them handle large datasets more efficiently, perform complex analyses in record time and gain deeper insights from data.

AI-deal for Robotics, Architecture and Design

Students studying robotics can tap the NVIDIA Isaac platform for developing, testing and deploying AI-powered robotics applications. Powered by NVIDIA GPUs, the platform consists of NVIDIA-accelerated libraries, applications frameworks and AI models that supercharge the development of AI-powered robots like autonomous mobile robots, arms and manipulators, and humanoids.

While GPUs have long been used for 3D design, modeling and simulation, their role has significantly expanded with the advancement of AI. GPUs are today used to run AI models that dramatically accelerate rendering processes.

Some industry-standard design tools powered by NVIDIA GPUs and AI include:

- SOLIDWORKS Visualize: This 3D computer-aided design rendering software uses NVIDIA Optix AI-powered denoising to produce high-quality ray-traced visuals, streamlining the design process by providing faster, more accurate visual feedback.

- Blender: This popular 3D creation suite uses NVIDIA Optix AI-powered denoising to deliver stunning ray-traced visuals, significantly accelerating content creation workflows.

- D5 Render: Commonly used by architects, interior designers and engineers, D5 Render incorporates NVIDIA DLSS technology for real-time viewport rendering, enabling smoother, more detailed visualizations without sacrificing performance. Powered by fourth-generation Tensor Cores and the NVIDIA Optical Flow Accelerator on GeForce RTX 40 Series GPUs and NVIDIA RTX Ada Generation GPUs, DLSS uses AI to create additional frames and improve image quality.

- Enscape: Enscape makes it possible to ray trace more geometry at a higher resolution, at exactly the same frame rate. It uses DLSS to enhance real-time rendering capabilities, providing architects and designers with seamless, high-fidelity visual previews of their projects.

Beyond STEM

Students, hobbyists and aspiring artists use the NVIDIA Studio platform to supercharge their creative processes with RTX and AI. RTX GPUs power creative apps such as Adobe Creative Cloud, Autodesk, Unity and more, accelerating a variety of processes such as exporting videos and rendering art.

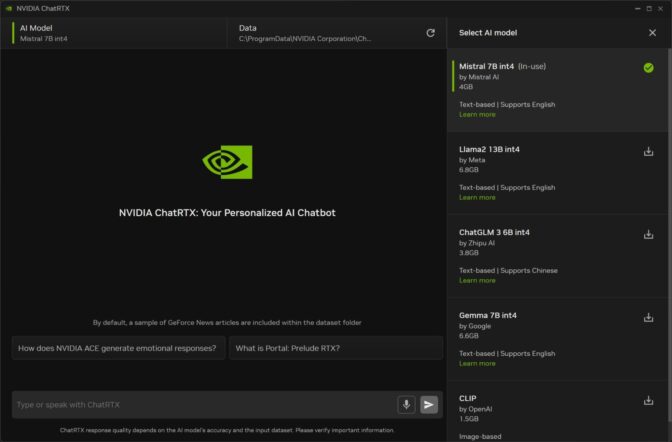

ChatRTX is a demo app that lets students create a personalized GPT large language model connected to their own content and study materials, including text, images or other data. Powered by advanced AI, ChatRTX functions like a personalized chatbot that can quickly provide students relevant answers to questions based on their connected content. The app runs locally on a Windows RTX PC or workstation, meaning students can get fast, secure results personalized to their needs.

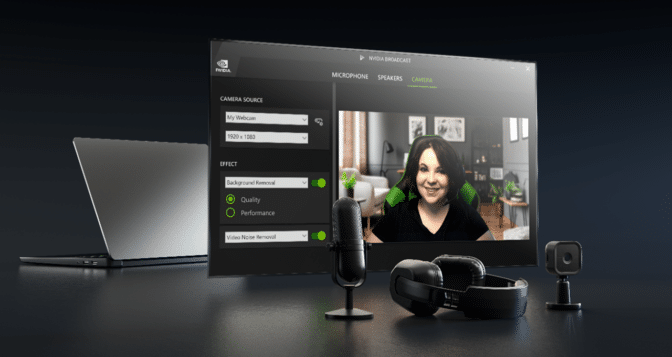

Schools are increasingly adopting remote learning as a teaching modality. NVIDIA Broadcast — a free application that delivers professional-level audio and video with AI-powered features on RTX PCs and workstations — integrates seamlessly with remote learning applications including BlueJeans, Discord, Google Meet, Microsoft Teams, Webex and Zoom. It uses AI to enhance remote learning experiences by removing background noise, improving image quality in low-light scenarios, and enabling background blur and background replacement.

From Data Centers to School Laptops

NVIDIA RTX-powered mobile workstations and GeForce RTX and Studio RTX 40 Series laptops offer supercharged development, learning, gaming and creating experiences with AI-enabled tools and apps. They also include exclusive access to the NVIDIA Studio platform of creative tools and technologies, and Max-Q technologies that optimize battery life and acoustics — giving students an ideal platform for all aspects of campus life.

Say goodbye to late nights in the computer lab — GeForce RTX laptops and NVIDIA RTX workstations share the same architecture as the NVIDIA GPUs powering many university labs and data centers. That means students can study, create and play — all on the same PC.

Learn more about GeForce RTX laptops and NVIDIA RTX workstations.

]]>Generative AI is unlocking new ways for enterprises to engage customers through digital human avatars.

At SIGGRAPH, NVIDIA previewed “James,” an interactive digital human that can connect with people using emotions, humor and more. James is based on a customer-service workflow using NVIDIA ACE, a reference design for creating custom, hyperrealistic, interactive avatars.

Users can interact with James in real time at ai.nvidia.com.

NVIDIA also showcased at the computer graphics conference the latest advancements to the NVIDIA Maxine AI platform, including Maxine 3D and Audio2Face-2D for an immersive telepresence experience.